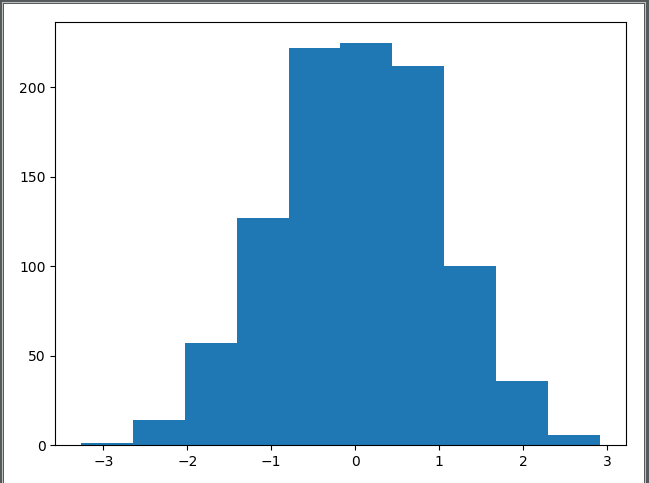

1 import tensorflow as tf 2 import numpy as np 3 ts_norm=tf.random_normal([1000]) 4 with tf.Session() as sess: 5 norm_data=ts_norm.eval() 6 print(norm_data[:5]) 7 import matplotlib.pyplot as plt 8 plt.hist(norm_data) 9 plt.show() 10 def layer_debug(output_dim,input_dim,inputs,activation=None): 11 W=tf.Variable(tf.random_normal([input_dim,output_dim])) 12 b=tf.Variable(tf.random_normal([1,output_dim])) 13 XWb=tf.matmul(inputs,W)+b 14 if activation is None: 15 outputs=XWb 16 else: 17 outputs=activation(XWb) 18 return outputs,W,b 19 X=tf.placeholder("float",[None,4]) 20 h,W1,b1=layer_debug(output_dim=3,input_dim=4,inputs=X, 21 activation=tf.nn.relu) 22 y,W2,b2=layer_debug(output_dim=2,input_dim=3,inputs=h) 23 with tf.Session() as sess: 24 init=tf.global_variables_initializer() 25 sess.run(init) 26 X_array=np.array([[0.4,0.2,0.4,0.5]]) 27 (layer_X,layer_h,layer_y,W1,W2,b1,b2)=sess.run((X,h,y,W1,W2,b1,b2),feed_dict={X:X_array}) 28 print('input layer x:');print(layer_X) 29 print('w1:');print(W1) 30 print('b1:');print(b1) 31 print('input layer h:');print(layer_h) 32 print('w2:');print(W2) 33 print('b2:');print(b2) 34 print('input layer y:');print(layer_y)

运行结果: