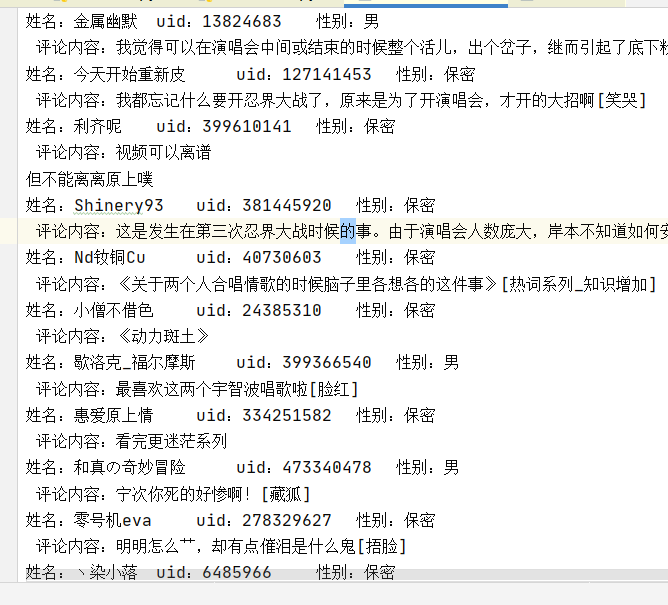

import requests import time from bs4 import BeautifulSoup import json # 必要的库 def get_html(url): headers = { 'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8', 'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) appleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36', } # 模拟访问信息 r = requests.get(url, timeout=30, headers=headers) r.raise_for_status() r.endcodding = 'utf-8' return r.text def get_content(url): comments = [] html = get_html(url) try: s = json.loads(html) except: print("jsonload error") num = len(s['data']['replies']) # 获取每页评论栏的数量 i = 0 while i < num: comment = s['data']['replies'][i] # 获取每栏信息 InfoDict = {} # 存储每组信息字典 InfoDict['用户名'] = comment['member']['uname'] InfoDict['uid号'] = comment['member']['mid'] InfoDict['评论内容'] = comment['content']['message'] InfoDict['性别'] = comment['member']['sex'] comments.append(InfoDict) i+=1 return comments def Out2File(dict): with open('评论区爬取.txt', 'a+', encoding='utf-8') as f: for user in dict: try: f.write('姓名:{}\t uid:{}\t 性别:{}\t \n 评论内容:{}\t \n'.format(user['用户名'], user['uid号'], user['性别'], user['评论内容'])) except: print("out2File error") print('当前页面保存完成') e = 0 page = 1 while e == 0: url = "https://api.bilibili.com/x/v2/reply/main?&jsonp=jsonp&next=" + str(page) + "&type=1&oid=677870443&mode=3&plat=1&_=1641278727643" try: print() content = get_content(url) print("page:", page) Out2File(content) page = page + 1 # 为了降低被封ip的风险,每爬10页便歇5秒。 if page % 10 == 0: # 求余数 time.sleep(5) except: e = 1

参考视频:https://www.bilibili.com/video/BV1fu411d7Hy?from=search&seid=3483579157564497530&spm_id_from=333.337.0.0