1、BeautifulSoup4库简介

What is beautifulsoup ?

答:一个可以用来从HTML 和 XML中提取数据的网页解析库,支持多种解析器(代替正则的复杂用法)

2、安装

pip3 install beautifulsoup4

3、用法详解

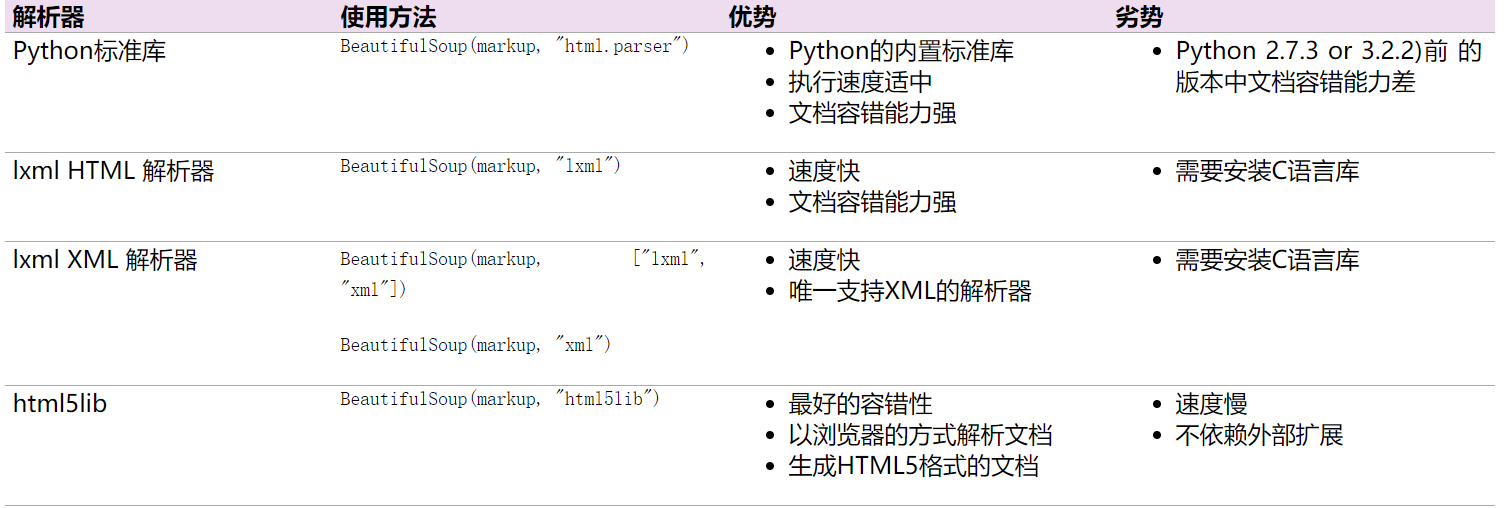

(1)、解析器性能分析(第一个参数markup-要解析的目标代码,第二个参数为解析器)

(2)、使用方法(独孤九剑)

1、总诀式:

#author: "xian" #date: 2018/5/7 #以下为爱丽丝梦游仙境的部分代码 html = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ #小试牛刀 from bs4 import BeautifulSoup #从bs4库导入BeautifulSoup类 soup = BeautifulSoup(html,'lxml') #构造名为soup的对象 print(soup.prettify()) #prettify修饰()方法:格式化代码也就是让各位小伙伴释放眼睛压力哈哈! print(soup.a) #选中a标签 print(soup.a['class'])#打印a标签名为class的属性值 print(soup.a.name) #打印a 标签的名字 soup.a.parent.name 找到a标签的老子 print(soup.a.string) #小伙伴们猜猜看这是干什么? 答:打印a标签的文本 print(soup.find_all('a')) #找到所有的a标签 print(soup.find(id="link3"))#找到id属性值为link3的标签 #找链接 for link in soup.find_all('a'): print(link.get('href')) #遍历所有名为a的标签并得到其链接 #找文本 print(soup.a.get_text()) #获取a标签的文本当然小伙伴们可以任意指定想要的内容 #上面的输出 '''<html> <head> <title> The Dormouse's story </title> </head> <body> <p class="title"> <b> The Dormouse's story </b> </p> <p class="story"> Once upon a time there were three little sisters; and their names were <a class="sister" href="http://example.com/elsie" id="link1"> Elsie </a> , <a class="sister" href="http://example.com/lacie" id="link2"> Lacie </a> and <a class="sister" href="http://example.com/tillie" id="link3"> Tillie </a> ; and they lived at the bottom of a well. </p> <p class="story"> ... </p> </body> </html> <a class="sister" href="http://example.com/elsie" id="link1">Elsie</a> ['sister'] a Elsie [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>, <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>, <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>] <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> http://example.com/elsie http://example.com/lacie http://example.com/tillie'''

其他的小伙伴们可以根据需要获取想要的内容,掌握方法即可,具体可参见官网:https://www.crummy.com/software/BeautifulSoup/bs4/doc.zh/

2、破剑式

1 #author: "xian" 2 #date: 2018/5/7 3 html = """ 4 <html> 5 <head> 6 <title>The Dormouse's story</title> 7 </head> 8 <body> 9 <p class="story"> 10 Once upon a time there were three little sisters; and their names were 11 <a href="http://example.com/elsie" class="sister" id="link1"> 12 <span>Elsie</span> 13 </a> 14 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> 15 and 16 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> 17 and they lived at the bottom of a well 18 </p> 19 <p class="story">...</p> 20 """ 21 #子节点及子孙节点(老子节点与祖宗节点的选择)的选择 22 from bs4 import BeautifulSoup 23 24 soup = BeautifulSoup(html,'lxml') 25 print(soup.p.contents) #contents方法将得到的结果以列表形式输出 26 print(soup.p.children) #是一个迭代器对象,需要用for循环才能得到器内容 children 只后期子节点 27 for i,child in enumerate(soup.p.children): #enumerate() 函数用于将一个可遍历的数据对象(如列表、元组或字符串)组合为一个索引序列,同时列出数据和数据下标,一般用在 for 循环当中。 28 print(i,child) #接受index 和内特 29 print(soup.p.descendants) #descendants 获取所有的儿子和孙子后代节点 30 for i,child in enumerate(soup.p.descendants): 31 print(i,child) 32 33 #上面的输出结果 34 '''[' Once upon a time there were three little sisters; and their names were ', < a 35 class ="sister" href="http://example.com/elsie" id="link1" > 36 < span > Elsie < / span > 37 < / a >, ' ', < a class ="sister" href="http://example.com/lacie" id="link2" > Lacie < / a >, ' and ', < a class ="sister" href="http://example.com/tillie" id="link3" > Tillie < / a >, ' and they lived at the bottom of a well '] 38 < list_iterator object at 0x00000156B2E76EF0 > 39 0 40 Once upon a time there were three little sisters; and their names were 41 42 43 1 < a class ="sister" href="http://example.com/elsie" id="link1" > 44 < span > Elsie < / span > 45 < / a > 46 2 47 48 49 3 < a class ="sister" href="http://example.com/lacie" id="link2" > Lacie < / a > 50 4 51 and 52 53 54 5 < a class ="sister" href="http://example.com/tillie" id="link3" > Tillie < / a > 55 6 56 and they lived at the bottom of a well 57 58 59 < generator object descendants at 0x00000156B08910F8 > 60 0 61 62 Once upon a time there were three little sisters; and their names were 63 64 65 66 1 < a class ="sister" href="http://example.com/elsie" id="link1" > 67 < span > Elsie < / span > 68 < /a > 69 2 70 71 72 3 < span > Elsie < / span > 73 4 Elsie 74 5 75 76 77 6 78 79 80 7 < a class ="sister" href="http://example.com/lacie" id="link2" > Lacie < / a > 81 8 Lacie 82 9 83 and 84 85 86 10 < a class ="sister" href="http://example.com/tillie" id="link3" > Tillie < / a > 87 11 Tillie 88 12 89 and they lived at the bottom of a well''' 90 91 92 #老子节点和祖宗节点方法介绍 children -- parent / descendants -- parents 小伙伴们模仿上面的可是动手试试 93 #兄弟节点的获取 方法为:next_siblings:获取当前对象后面的兄弟节点 previous_siblings:获取当前对象前面的兄弟节点,小伙伴们可以试试

3、破刀式

1 #author: "xian" 2 #date: 2018/5/7 3 #搜索文档内容 find_all() 和find() 4 html = """ 5 <html><head><title>The Dormouse's story</title></head> 6 7 <p class="title"><b>The Dormouse's story</b></p> 8 9 <p class="story">Once upon a time there were three little sisters; and their names were 10 <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, 11 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and 12 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; 13 and they lived at the bottom of a well.</p> 14 15 <p class="story">...</p> 16 """ 17 from bs4 import BeautifulSoup 18 import re 19 20 soup = BeautifulSoup(html,'lxml') 21 #(1)、find_all( name , attrs , recursive , text , **kwargs ) 22 #name参数用法详解(text参数的使用同name类似如soup.find_all(text=["Tillie", "Elsie", "Lacie"])只返回内容,小伙伴们可查阅官方文档:https://www.crummy.com/software/BeautifulSoup/bs4/doc.zh/) 23 print(soup.find_all('head')) #查找head标签 24 print(soup.find_all(id='link2')) #查找id='link2'的标签 25 print(soup.find_all(href=re.compile("(w+)"))) #查找所有包含href属性包含字母数字的标签 26 print(soup.find_all(href=re.compile("(w+)"), id='link1')) #多重过滤 27 #搜索指定名字的属性时可以使用的参数值包括 字符串 , 正则表达式 , 列表, True 28 29 #attrs参数用法详解 30 print(soup.find_all(attrs={'id':'link2'})) #attrs参数以key-value形式传入值 /返回列表类型 31 32 #(2)find( name , attrs , recursive , text , **kwargs )用法同find_all 类似只不过它只返回一个值,小伙伴们可以查找官方用法 33 34 #(3)其他方法汇总:(小伙伴们了解即可具体碰到查文档) 35 #find_parents() 和find_parent() 返回祖宗节点 和 返回老子节点 36 #find_next_siblings() 和 find_next_sibling() 返回后面所有的兄弟节点 和 返回后面第一个兄弟节点 37 #find_previous_siblings() 和 find_previous_sibling() 返回前面所有的兄弟节点 和 返回前面第一个兄弟节点 38 #find_all_next() 和 find_next() 返回节点后满足条件所有的节点 和 返回第一个满足条件的节点 39 #find_all_previous() 和 find_previous() 返回节点前满足条件所有的节点 和 返回第一个满足条件的节点 40 41 #上面的输出结果: 42 ''' 43 [<head><title>The Dormouse's story</title></head>] 44 [<a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>] 45 [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>, <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>, <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>] 46 [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>] 47 [<a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>] 48 <class 'bs4.element.ResultSet'> 49 '''

4、破枪式

1 #author: "xian" 2 #date: 2018/5/7 3 #CSS选择器详解(通过select()传入css选择器即可成功选择) 4 html = """ 5 <html><head><title>The Dormouse's story</title></head> 6 <body> 7 <p class="title"><b>The Dormouse's story</b></p> 8 9 <p class="story">Once upon a time there were three little sisters; and their names were 10 <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, 11 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and 12 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; 13 and they lived at the bottom of a well.</p> 14 15 <p class="story">...</p> 16 """ 17 from bs4 import BeautifulSoup 18 19 soup = BeautifulSoup(html,'lxml') 20 print(soup.select('.title')) #选择class属性为title的标签 css选择器使用请小伙伴们查看官网 21 #再来一例 22 print(soup.select('p a#link1'))# 选择p标签下的a下的id属性为link1的标签 23 print(soup.select('a')[1]) #做一个切片拿到第二个a标签 24 #获取内容 25 print(soup.select('a')[1].get_text()) 26 27 #上面的输出: 28 ''' 29 [<p class="title"><b>The Dormouse's story</b></p>] 30 [<a class="sister" href="http://example.com/elsie" id="link1">Elsie</a>] 31 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 32 laci

33 '''

通过以上的实验,小伙伴们对bs4库是否有了一定的了解,赶紧行动起来,试试学习的效果吧!

总结:

1.建议小伙伴使用lxml解析器

2.多用find_all()和find()

3.css的select()方法掌握下

4.多练习,勤能补拙,孰能生巧,才能渐入化境!