本次课堂测试的要求如下:

但是第一次做还不成熟,只能完成带一部分的前两个要求。

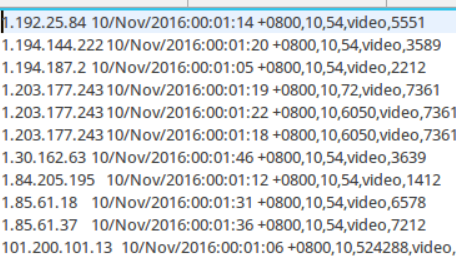

Result文件数据说明:

Ip:106.39.41.166,(城市)

Date:10/Nov/2016:00:01:02 +0800,(日期)

Day:10,(天数)

Traffic: 54 ,(流量)

Type: video,(类型:视频video或文章article)

Id: 8701(视频或者文章的id)

测试要求:

1、 数据清洗:按照进行数据清洗,并将清洗后的数据导入hive数据库中。

两阶段数据清洗:

(1)第一阶段:把需要的信息从原始日志中提取出来

ip: 199.30.25.88

time: 10/Nov/2016:00:01:03 +0800

traffic: 62

文章: article/11325

视频: video/3235

(2)第二阶段:根据提取出来的信息做精细化操作

ip--->城市 city(IP)

date--> time:2016-11-10 00:01:03

day: 10

traffic:62

type:article/video

id:11325

(3)hive数据库表结构:

create table data( ip string, time string , day string, traffic bigint, type string, id string )

2、数据处理:

·统计最受欢迎的视频/文章的Top10访问次数 (video/article)

·按照地市统计最受欢迎的Top10课程 (ip)

·按照流量统计最受欢迎的Top10课程 (traffic)

3、数据可视化:将统计结果倒入MySql数据库中,通过图形化展示的方式展现出来。

Result.txt文件内容如下:

源码:

1 package hivetest; 2 3 import java.io.IOException; 4 5 import org.apache.hadoop.conf.Configuration; 6 import org.apache.hadoop.fs.Path; 7 import org.apache.hadoop.io.LongWritable; 8 import org.apache.hadoop.io.Text; 9 import org.apache.hadoop.mapreduce.Job; 10 import org.apache.hadoop.mapreduce.Mapper; 11 import org.apache.hadoop.mapreduce.Reducer; 12 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 13 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 14 public class Hivetest1 { 15 16 // static ClassService service=new ClassService(); 17 public static class MyMapper extends Mapper<LongWritable, Text, Text/*map对应键类型*/, Text/*map对应值类型*/> 18 { 19 protected void map(LongWritable key, Text value,Context context)throws IOException, InterruptedException 20 { 21 String[] strNlist = value.toString().split(",");//如何分隔 22 //LongWritable,IntWritable,Text等 23 24 context.write(new Text(strNlist[0])/*map对应键类型*/,new Text(strNlist[1]+","+strNlist[2]+","+strNlist[3]+","+strNlist[4]+","+strNlist[5])/*map对应值类型*/); 25 } 26 } 27 public static class MyReducer extends Reducer<Text/*map对应键类型*/, Text/*map对应值类型*/, Text/*reduce对应键类型*/, Text/*reduce对应值类型*/> 28 { 29 // static No1Info info=new No1Info(); 30 protected void reduce(Text key, Iterable<Text/*map对应值类型*/> values,Context context)throws IOException, InterruptedException 31 { 32 for (/*map对应值类型*/Text init : values) 33 { 34 context.write( key/*reduce对应键类型*/, new Text(init)/*reduce对应值类型*/); 35 } 36 } 37 } 38 39 public static void main(String[] args) throws Exception { 40 Configuration conf = new Configuration(); 41 Job job = Job.getInstance(); 42 job.setJarByClass(Hivetest1.class); 43 job.setMapperClass(MyMapper.class); 44 job.setMapOutputKeyClass(/*map对应键类型*/Text.class); 45 job.setMapOutputValueClass( /*map对应值类型*/Text.class); 46 // TODO: specify a reducer 47 job.setReducerClass(MyReducer.class); 48 job.setOutputKeyClass(/*reduce对应键类型*/Text.class); 49 job.setOutputValueClass(/*reduce对应值类型*/Text.class); 50 51 // TODO: specify input and output DIRECTORIES (not files) 52 FileInputFormat.setInputPaths(job, new Path("hdfs://localhost:9000/user/hive/warehouse/test/result.txt")); 53 FileOutputFormat.setOutputPath(job, new Path("hdfs://localhost:9000/user/hive/warehouse/result")); 54 55 boolean flag = job.waitForCompletion(true); 56 System.out.println("完成!"); //任务完成提示 57 System.exit(flag ? 0 : 1); 58 System.out.println(); 59 } 60 61 }

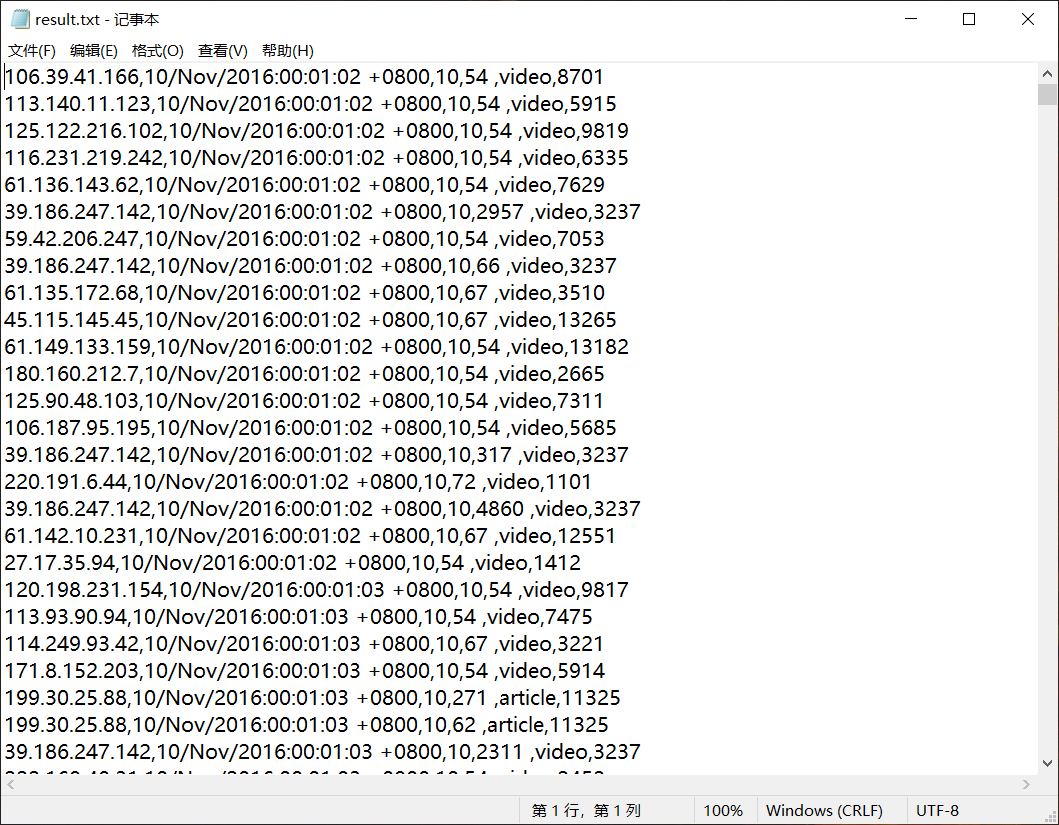

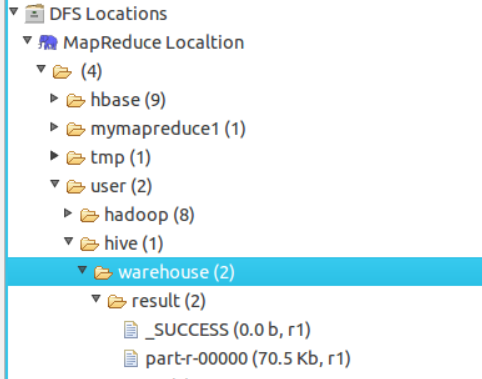

运行结果:

代码②:

1 import java.lang.String; 2 import java.text.SimpleDateFormat; 3 import java.util.Date; 4 import java.util.Locale; 5 import java.io.IOException; 6 import org.apache.hadoop.conf.Configuration; 7 import org.apache.hadoop.fs.Path; 8 import org.apache.hadoop.io.LongWritable; 9 import org.apache.hadoop.io.Text; 10 import org.apache.hadoop.mapreduce.Job; 11 import org.apache.hadoop.mapreduce.Mapper; 12 import org.apache.hadoop.mapreduce.Reducer; 13 import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; 14 import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; 15 public class sjqx { 16 public static final SimpleDateFormat FORMAT = new SimpleDateFormat("d/MMM/yyyy:HH:mm:ss", Locale.ENGLISH); //原时间格式 17 public static final SimpleDateFormat dateformat1 = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");//现时间格式 18 private static Date parseDateFormat(String string) { //转换时间格式 19 Date parse = null; 20 try { 21 parse = FORMAT.parse(string); 22 } catch (Exception e) { 23 e.printStackTrace(); 24 } 25 return parse; 26 } 27 public static class MyMapper extends Mapper<LongWritable, Text, Text/*map对应键类型*/, Text/*map对应值类型*/> 28 { 29 protected void map(LongWritable key, Text value,Context context)throws IOException, InterruptedException 30 { 31 String[] strNlist = value.toString().split(",");//如何分隔 32 //LongWritable,IntWritable,Text等 33 Date date = parseDateFormat(strNlist[1]); 34 context.write(new Text(strNlist[0])/*map对应键类型*/,new Text(dateformat1.format(date)+" "+strNlist[2]+" "+strNlist[3]+" "+strNlist[4]+" "+strNlist[5])/*map对应值类型*/); 35 } 36 } 37 public static class MyReducer extends Reducer<Text/*map对应键类型*/, Text/*map对应值类型*/, Text/*reduce对应键类型*/, Text/*reduce对应值类型*/> 38 { 39 // static No1Info info=new No1Info(); 40 protected void reduce(Text key, Iterable<Text/*map对应值类型*/> values,Context context)throws IOException, InterruptedException 41 { 42 for (/*map对应值类型*/Text init : values) 43 { 44 45 context.write( key/*reduce对应键类型*/, new Text(init)/*reduce对应值类型*/); 46 } 47 } 48 } 49 50 public static void main(String[] args) throws Exception { 51 Configuration conf = new Configuration(); 52 Job job = Job.getInstance(); 53 //job.setJar("MapReduceDriver.jar"); 54 job.setJarByClass(sjqx.class); 55 // TODO: specify a mapper 56 job.setMapperClass(MyMapper.class); 57 job.setMapOutputKeyClass(/*map对应键类型*/Text.class); 58 job.setMapOutputValueClass( /*map对应值类型*/Text.class); 59 60 // TODO: specify a reducer 61 job.setReducerClass(MyReducer.class); 62 job.setOutputKeyClass(/*reduce对应键类型*/Text.class); 63 job.setOutputValueClass(/*reduce对应值类型*/Text.class); 64 65 // TODO: specify input and output DIRECTORIES (not files) 66 FileInputFormat.setInputPaths(job, new Path("hdfs://localhost:9000/test/in/result")); 67 FileOutputFormat.setOutputPath(job, new Path("hdfs://localhost:9000/test/out")); 68 69 boolean flag = job.waitForCompletion(true); 70 System.out.println("SUCCEED!"+flag); //任务完成提示 71 System.exit(flag ? 0 : 1); 72 System.out.println(); 73 } 74 }

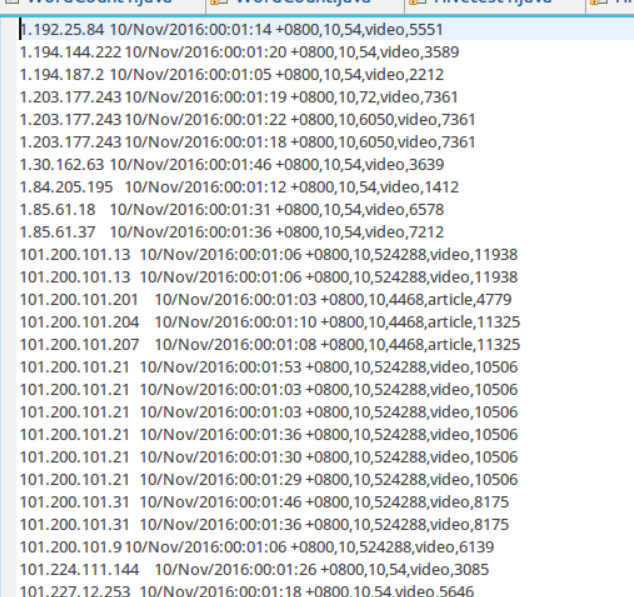

运行结果: