下面主要内容:

- 用户权限管理和授权流程

- 用普通用户挂载rbd和cephfs

- mds高可用

- 多mds active

- 多mds active加standby

一、Ceph的用户权限管理和授权流程

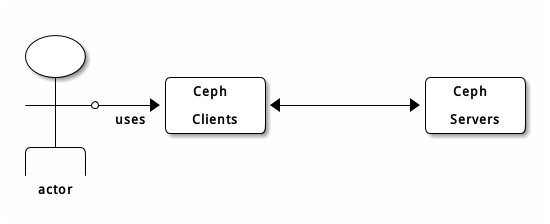

一般系统的身份认真无非三点:账号、角色和认真鉴权,Ceph 的用户可以是一个具体的人或系统角色(e.g. 应用程序),Ceph 管理员通过创建用户并设置权限来控制谁可以访问、操作 Ceph Cluster、Pool 或 Objects 等资源。

Ceph 的用户类型可以分为以下几类:

- 客户端用户

- 操作用户(e.g. client.admin)

- 应用程序用户(e.g. client.cinder)

- 其他用户

- Ceph 守护进程用户(e.g. mds.ceph-node1、osd.0)

用户命名遵循 <TYPE.ID> 的命名规则,其中 Type 有 mon,osd,client 三者,L 版本以后添加了 mgr 类型。

NOTE:为 Ceph 守护进程创建用户,是因为 MON、OSD、MDS 等守护进程同样遵守 CephX 协议,但它们不能属于真正意义上的客户端。

Ceph 用户的密钥:相当于用户的密码,本质是一个唯一字符串。e.g. key: AQB3175c7fuQEBAAMIyROU5o2qrwEghuPwo68g==

Ceph 用户的授权(Capabilities, caps):即用户的权限,通常在创建用户的同时进行授权。只有在授权之后,用户才可以使用权限范围内的 MON、OSD、MDS 的功能,也可以通过授权限制用户对 Ceph 集群数据或命名空间的访问范围。以 client.admin 和 client.jack为例:

1 client.admin 2 key: AQBErhthY4YdIhAANKTOMAjkzpKkHSkXSoNpaQ== 3 # 允许访问 MDS 4 caps: [mds] allow * 5 # 允许访问 Mgr 6 caps: [mgr] allow * 7 # 允许访问 MON 8 caps: [mon] allow * 9 # 允许访问 OSD 10 caps: [osd] allow * 11 12 client.tom 13 key: AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 14 # 允许对 MON 读的权限 15 caps: [mon] allow r 16 # 允许对rbd-data资源池进行读写 17 caps: [osd] allow rwx pool=rbd-date

授权类型:

- allow:在守护进程进行访问设置之前就已经具有特定权限,常见于管理员和守护进程用户。

- r:授予用户读的权限,读取集群各个组件(MON/OSD/MDS/CRUSH/PG)的状态,但是不能修改。

- w:授予用户写对象的权限,与 r 配合使用,修改集群的各个组件的状态,可以执行组件的各个动作指令。

- x:授予用户调用类方法的能力,仅仅和 ceph auth 操作相关。

- class-read:授予用户调用类读取方法的能力,是 x 的子集。

- class-write:授予用户调用类写入方法的能力,是 x 的子集。

- *:授予用户 rwx 权限。

- profile osd:授权用户以 OSD 身份连接到其它 OSD 或 MON,使得 OSD 能够处理副本心跳和状态汇报。

- profile mds:授权用户以 MDS 身份连接其它 MDS 或 MON。

- profile bootstrap-osd:授权用户引导 OSD 守护进程的能力,通常授予部署工具(e.g. ceph-deploy),让它们在引导 OSD 时就有增加密钥的权限了。

- profile bootstrap-mds:授权用户引导 MDS 守护进程的能力。同上。

NOTE:可见 Ceph 客户端不直接访问 Objects,而是必须要经过 OSD 守护进程的交互。

用户管理常规操作

Ceph 用户管理指令 auth:

1 root@node02:~# ceph auth --help 2 ... 3 auth add <entity> [<caps>...] add auth info for <entity> from input file, or random key if no input is given, and/or any caps 4 specified in the command 5 auth caps <entity> <caps>... update caps for <name> from caps specified in the command 6 auth export [<entity>] write keyring for requested entity, or master keyring if none given 7 auth get <entity> write keyring file with requested key 8 auth get-key <entity> display requested key 9 auth get-or-create <entity> [<caps>...] add auth info for <entity> from input file, or random key if no input given, and/or any caps specified 10 in the command 11 auth get-or-create-key <entity> [<caps>...] get, or add, key for <name> from system/caps pairs specified in the command. If key already exists, any 12 given caps must match the existing caps for that key. 13 auth import auth import: read keyring file from -i <file> 14 auth ls list authentication state 15 auth print-key <entity> display requested key 16 auth print_key <entity> display requested key 17 auth rm <entity> remove all caps for <name>

获取指定用户的权限信息:

1 root@node02:~# ceph auth get client.admin 2 [client.admin] 3 key = AQBErhthY4YdIhAANKTOMAjkzpKkHSkXSoNpaQ== 4 caps mds = "allow *" 5 caps mgr = "allow *" 6 caps mon = "allow *" 7 caps osd = "allow *" 8 exported keyring for client.admin 9 root@node02:~#

新建用户:

1 # 授权的格式为:`{守护进程类型} 'allow {权限}'` 2 3 # 创建 client.jack 用户,并授权可读 MON、可读写 OSD Pool livepool 4 ceph auth add client.jack mon 'allow r' osd 'allow rw pool=rbd-data' 5 6 # 获取或创建 client.tom,若创建,则授权可读 MON,可读写 OSD Pool livepool 7 ceph auth get-or-create client.tom mon 'allow r' osd 'allow rw pool=rbd-data' 8 9 # 获取或创建 client.jerry,若创建,则授权可读 MON,可读写 OSD Pool livepool,输出用户秘钥环文件 10 ceph auth get-or-create client.jerry mon 'allow r' osd 'allow rw pool=rbd-data' -o jerry.keyring 11 ceph auth get-or-create-key client.ringo mon 'allow r' osd 'allow rw pool=rbd-data' -o ringo.key

删除用户:

1 ceph auth del {TYPE}.{ID}

CephX 认证系统

Ceph 提供了两种身份认证方式:None 和 CephX。前者表示客户端不需要通过密钥访问即可访问 Ceph 存储集群,显然这种方式是不被推荐的。所以我们一般会启用 CephX 认证系统,通过编辑 ceph.conf 开启:

1 [global] 2 ... 3 # 表示存储集群(mon,osd,mds)相互之间需要通过 keyring 认证 4 auth_cluster_required = cephx 5 # 表示客户端(比如gateway)到存储集群(mon,osd,mds)需要通过 keyring 认证 6 auth_service_required = cephx 7 # 表示存储集群(mon,osd,mds)到客户端(e.g. gateway)需要通过 keyring 认证 8 auth_client_required = cephx

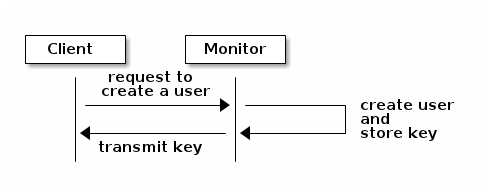

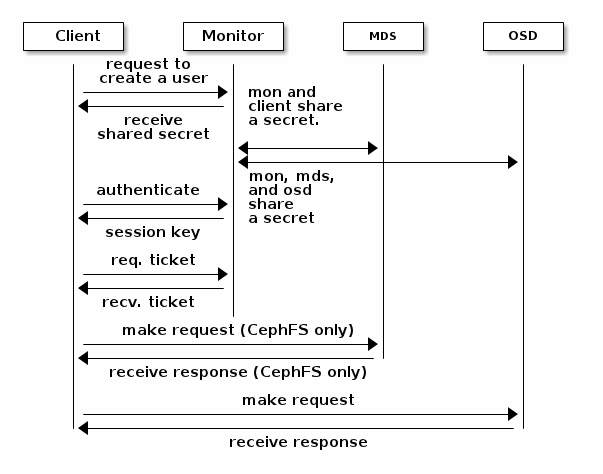

CephX 的本质是一种对称加密协议,加密算法为 AES,用于识别用户的身份、对用户在客户端上的操作进行认证,以此防止中间人攻击、数据篡改等网络安全问题。应用 CephX 的前提是创建一个用户,当我们使用上述指令创建一个用户时,MON 会将用户的密钥返回给客户端,同时自己也会保存一份副本。这样客户端和 MON 就共享了一份密钥。CephX 使用共享密钥的方式进行认证,客户端和 MON 集群都会持有一份用户密钥,它提供了一个相互认证的机制,MON 集群确定客户端持有用户密钥,而客户端确定 MON 集群持有用户密钥的的副本。

身份认证原理

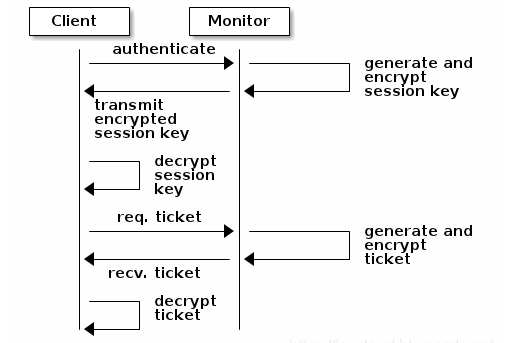

- 用户通过客户端向 MON 发起请求。

- 客户端将用户名传递到 MON。

- MON 对用户名进行检查,若用户存在,则通过加密用户密钥生成一个 session key 并返回客户端。

- 客户端通过共享密钥解密 session key,只有拥有相同用户密钥环文件的客户端可以完成解密。

- 客户端得到 session key 后,客户端持有 session key 再次向 MON 发起请求

- MON 生成一个 ticket,同样使用用户密钥进行加密,然后发送给客户端。

- 客户端同样通过共享密钥解密得到 ticket。

- 往后,客户端持有 ticket 向 MON、OSD 发起请求。

这就是共享密钥认证的好处,客户端、MON、OSD、MDS 共同持有用户的密钥,只要客户端与 MON 完成验证之后,客户端就可以与任意服务进行交互。并且只要客户端拥有任意用户的密钥环文件,客户端就可以执行特定用户所具有权限的所有操作。当我们执行 ceph -s 时候,实际上执行的是 ceph -s --conf /etc/ceph/ceph.conf --name client.admin --keyring /etc/ceph/ceph.client.admin.keyring。客户端会从下列默认路径查看 admin 用户的 keyring:

1 /etc/ceph/ceph.client.admin.keyring 2 /etc/ceph/ceph.keyring 3 /etc/ceph/keyring 4 /etc/ceph/keyring.bin

使用 ceph-authtool 进行密钥环管理

NOTE:密钥环文件 .keyring 是 Ceph 用户的载体,当客户端拥有了密钥环文件就相当于可以通过对应的用户来执行客户端操作。

MON、OSD、admin client 的初始密钥环文件:

- /var/lib/ceph/mon/ceph-$hostname/keyring

- /var/lib/ceph/osd/ceph-$hostname/keyring

- /etc/ceph/ceph.client.admin.keyring

指定密钥环(admin 用户)来执行集群操作:

1 ceph -n client.admin --keyring=/etc/ceph/ceph.client.admin.keyring health 2 rbd create -p rbd volume01 --size 1G --image-feature layering --conf /etc/ceph/ceph.conf --name client.admin --keyring /etc/ceph/ceph.client.admin.keyring

查看用户密钥:

ceph auth print-key {TYPE}.{ID}

将用户密钥导入到密钥环:

1 ceph auth get client.admin -o /etc/ceph/ceph.client.admin.keyring

创建用户并生产密钥环文件:

1 ceph-authtool -n client.user1 --cap osd 'allow rwx' --cap mon 'allow rwx' /etc/ceph/ceph.keyring 2 ceph-authtool -C /etc/ceph/ceph.keyring -n client.user1 --cap osd 'allow rwx' --cap mon 'allow rwx' --gen-key

修改用户授权:

ceph-authtool /etc/ceph/ceph.keyring -n client.user1 --cap osd 'allow rwx' --cap mon 'allow rwx'

从密钥环文件导入一个用户:

1 ceph auth import -i /path/to/keyring

将用户导出到一个密钥环文件:

1 ceph auth get client.admin -o /etc/ceph/ceph.client.admin.keyring

不废话了,下面是我本次作业的情况:

1、创建块、cephfs和创建普通用户挂载

1.1、创建rbd-data存储池并创建rbdtest1.img块:

1 root@node01:~# ceph df 2 --- RAW STORAGE --- 3 CLASS SIZE AVAIL USED RAW USED %RAW USED 4 hdd 240 GiB 240 GiB 41 MiB 41 MiB 0.02 5 TOTAL 240 GiB 240 GiB 41 MiB 41 MiB 0.02 6 7 --- POOLS --- 8 POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL 9 device_health_metrics 1 1 0 B 0 0 B 0 76 GiB 10 mypool1 2 32 3.1 KiB 3 21 KiB 0 76 GiB 11 root@node01:~# 12 root@node01:~# ceph osd pool create rbd-data 32 32 13 pool 'rbd-data' created 14 root@node02:~# ceph osd lspools 15 1 device_health_metrics 16 2 mypool1 17 3 rbd-data 18 root@node02:~# 19 root@node01:~# ceph osd pool ls 20 device_health_metrics 21 mypool1 22 rbd-data 23 root@node01:~# ceph osd pool application enable rbd-data rbd 24 enabled application 'rbd' on pool 'rbd-data' 25 root@node01:~# rb 26 rbash rbd rbdmap rbd-replay rbd-replay-many rbd-replay-prep 27 root@node01:~# rbd pool init -p rbd-data 28 root@node01:~# rbd create rbdtest1.img --pool rbd-data --size 1G 29 root@node01:~# rbd ls --pool rbd-data 30 rbdtest1.img 31 root@node01:~# rbd info rbd-data/rbdtest1.img 32 rbd image 'rbdtest1.img': 33 size 1 GiB in 256 objects 34 order 22 (4 MiB objects) 35 snapshot_count: 0 36 id: fb9a70e1dad6 37 block_name_prefix: rbd_data.fb9a70e1dad6 38 format: 2 39 features: layering, exclusive-lock, object-map, fast-diff, deep-flatten 40 op_features: 41 flags: 42 create_timestamp: Tue Aug 24 20:17:40 2021 43 access_timestamp: Tue Aug 24 20:17:40 2021 44 modify_timestamp: Tue Aug 24 20:17:40 2021 45 root@node01:~# rbd feature disable rbd-data/rbdtest1.img exclusive-lock object-map fast-diff deep-flatten 46 root@node01:~# rbd info rbd-data/rbdtest1.img 47 rbd image 'rbdtest1.img': 48 size 1 GiB in 256 objects 49 order 22 (4 MiB objects) 50 snapshot_count: 0 51 id: fb9a70e1dad6 52 block_name_prefix: rbd_data.fb9a70e1dad6 53 format: 2 54 features: layering 55 op_features: 56 flags: 57 create_timestamp: Tue Aug 24 20:17:40 2021 58 access_timestamp: Tue Aug 24 20:17:40 2021 59 modify_timestamp: Tue Aug 24 20:17:40 2021 60 root@node01:~#

下面是具体主要步骤命令:

1 root@node01:~# ceph osd pool create rbd-data 32 32 2 root@node01:~# ceph osd pool application enable rbd-data rbd 3 root@node01:~# rbd pool init -p rbd-data 4 root@node01:~# rbd create rbdtest1.img --pool rbd-data --size 1G 5 root@node01:~# rbd ls --pool rbd-data 6 root@node01:~# rbd info rbd-data/rbdtest1.img 7 root@node01:~# rbd feature disable rbd-data/rbdtest1.img exclusive-lock object-map fast-diff deep-flatten 8 root@node01:~# rbd info rbd-data/rbdtest1.img

1.2、创建删除tom、Jerry等账号的情况:

1 root@node01:~# ceph auth add client.tom mon 'allow r' osd 'allow rwx pool=rbd-date' 2 added key for client.tom 3 root@node01:~# ceph auth get client.tom 4 [client.tom] 5 key = AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 6 caps mon = "allow r" 7 caps osd = "allow rwx pool=rbd-date" 8 exported keyring for client.tom 9 root@node01:~# 10 root@node01:~# 11 root@node01:~# ceph auth get 12 get get-key get-or-create get-or-create-key 13 root@node01:~# ceph auth get-or-create client.jerry mon 'allow r' osd 'allow rwx pool=rbd-data' 14 [client.jerry] 15 key = AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 16 root@node01:~# 17 root@node01:~# ceph auth get client.jerry 18 [client.jerry] 19 key = AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 20 caps mon = "allow r" 21 caps osd = "allow rwx pool=rbd-data" 22 exported keyring for client.jerry 23 root@node01:~# 24 root@node01:~# ceph auth get-or-create-key client.jerry 25 AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 26 root@node01:~# ceph auth get-or-create-key client.jerry mon 'allow r' osd 'allow rwx pool=rbd-data' 27 AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 28 root@node01:~# ceph auth get-or-create-key client.jerry 29 AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 30 root@node01:~# 31 root@node01:~# ceph auth print-key client.jerry 32 AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw==root@node01:~# 33 root@node01:~# 34 root@node01:~# 35 root@node01:~# ceph auth get client.jerry 36 [client.jerry] 37 key = AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 38 caps mon = "allow r" 39 caps osd = "allow rwx pool=rbd-data" 40 exported keyring for client.jerry 41 root@node01:~# ceph auth caps client.jerry mon 'allow rw' osd 'allow r pool=rbd-data' 42 updated caps for client.jerry 43 root@node01:~# ceph auth get client.jerry 44 [client.jerry] 45 key = AQBb5SRhY64XORAAHS+d0M/q8UixCa013knHQw== 46 caps mon = "allow rw" 47 caps osd = "allow r pool=rbd-data" 48 exported keyring for client.jerry 49 root@node01:~# ceph auth del client.jerry 50 updated 51 root@node01:~# ceph auth get client.jerry 52 Error ENOENT: failed to find client.jerry in keyring 53 root@node01:~#

下面是具体主要步骤命令:

1 root@node01:~# ceph auth add client.tom mon 'allow r' osd 'allow rwx pool=rbd-date' 2 root@node01:~# ceph auth get client.tom 3 root@node01:~# ceph auth get-or-create client.jerry mon 'allow r' osd 'allow rwx pool=rbd-data' 4 root@node01:~# ceph auth get-or-create-key client.jerry 5 root@node01:~# ceph auth get-or-create-key client.jerry mon 'allow r' osd 'allow rwx pool=rbd-data' 6 root@node01:~# ceph auth print-key client.jerry 7 root@node01:~# ceph auth caps client.jerry mon 'allow rw' osd 'allow r pool=rbd-data'

1.3、通过秘钥环文件备份与恢复用

1 root@node01:~# ceph auth get-or-create client.user1 mon 'allow r' osd 'allow rwx pool=rbd-data' 2 [client.user1] 3 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 4 root@node01:~# ceph auth get client.user1 5 [client.user1] 6 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 7 caps mon = "allow r" 8 caps osd = "allow rwx pool=rbd-data" 9 exported keyring for client.user1 10 root@node01:~# ls 11 ceph-deploy cluster snap 12 root@node01:~# ceph-authtool --help 13 usage: ceph-authtool keyringfile [OPTIONS]... 14 where the options are: 15 -l, --list will list all keys and capabilities present in 16 the keyring 17 -p, --print-key will print an encoded key for the specified 18 entityname. This is suitable for the 19 'mount -o secret=..' argument 20 -C, --create-keyring will create a new keyring, overwriting any 21 existing keyringfile 22 -g, --gen-key will generate a new secret key for the 23 specified entityname 24 --gen-print-key will generate a new secret key without set it 25 to the keyringfile, prints the secret to stdout 26 --import-keyring FILE will import the content of a given keyring 27 into the keyringfile 28 -n NAME, --name NAME specify entityname to operate on 29 -a BASE64, --add-key BASE64 will add an encoded key to the keyring 30 --cap SUBSYSTEM CAPABILITY will set the capability for given subsystem 31 --caps CAPSFILE will set all of capabilities associated with a 32 given key, for all subsystems 33 --mode MODE will set the desired file mode to the keyring 34 e.g: '0644', defaults to '0600' 35 root@node01:~# ceph-authtool -C ceph.client.user1.keyring 36 creating ceph.client.user1.keyring 37 root@node01:~# cat ceph.client.user1.keyring 38 root@node01:~# file ceph.client.user1.keyring 39 ceph.client.user1.keyring: empty 40 root@node01:~# 41 root@node01:~# ceph auth get client.user1 42 [client.user1] 43 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 44 caps mon = "allow r" 45 caps osd = "allow rwx pool=rbd-data" 46 exported keyring for client.user1 47 root@node01:~# ceph auth get client.user1 -o ceph.client.user1.keyring 48 exported keyring for client.user1 49 root@node01:~# cat ceph.client.user1.keyring 50 [client.user1] 51 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 52 caps mon = "allow r" 53 caps osd = "allow rwx pool=rbd-data" 54 root@node01:~# 55 56 从 keyring 文件恢复用户认证信息: 57 58 59 root@node01:~# 60 root@node01:~# ceph auth del client.user1 61 updated 62 root@node01:~# ceph auth get client.user1 63 Error ENOENT: failed to find client.user1 in keyring 64 root@node01:~# 65 root@node01:~# ceph auth import -i ceph.client.user1.keyring 66 imported keyring 67 root@node01:~# 68 root@node01:~# ceph auth get client.user1 69 [client.user1] 70 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 71 caps mon = "allow r" 72 caps osd = "allow rwx pool=rbd-data" 73 exported keyring for client.user1 74 root@node01:~#

下面是具体主要步骤命令:

1 root@node01:~# ceph auth get-or-create client.user1 mon 'allow r' osd 'allow rwx pool=rbd-data' 2 root@node01:~# ceph auth get client.user1 3 root@node01:~# ceph-authtool -C ceph.client.user1.keyring 4 root@node01:~# ceph auth get client.user1 -o ceph.client.user1.keyring 5 6 从keyring 文件恢复用户认证信息: 7 root@node01:~# ceph auth del client.user1 8 root@node01:~# ceph auth get client.user1 9 root@node01:~# ceph auth import -i ceph.client.user1.keyring 10 root@node01:~# ceph auth get client.user1

再加一点:一个配置文件中多个账号情况:

1 root@node01:~# ceph-authtool -C ceph.client.tom.keyring 2 creating ceph.client.tom.keyring 3 root@node01:~# ceph auth get client.tom -o ceph.client.tom.keyring 4 exported keyring for client.tom 5 root@node01:~# cat ceph.client.tom.keyring 6 [client.tom] 7 key = AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 8 caps mon = "allow r" 9 caps osd = "allow rwx pool=rbd-date" 10 root@node01:~# ceph-authtool -l ceph.client.tom.keyring 11 [client.tom] 12 key = AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 13 caps mon = "allow r" 14 caps osd = "allow rwx pool=rbd-date" 15 root@node01:~# 16 root@node01:~# ceph-authtool ceph.client.user1.keyring --import-keyring ceph.client.tom.keyring 17 importing contents of ceph.client.tom.keyring into ceph.client.user1.keyring 18 root@node01:~# echo $? 19 0 20 root@node01:~# cat ceph.client.tom.keyring 21 [client.tom] 22 key = AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 23 caps mon = "allow r" 24 caps osd = "allow rwx pool=rbd-date" 25 root@node01:~# 26 root@node01:~# cat ceph.client.user1.keyring 27 [client.tom] 28 key = AQAQ5SRhNPftJBAA3lKYmTsgyeA1OQOVo2AwZQ== 29 caps mon = "allow r" 30 caps osd = "allow rwx pool=rbd-date" 31 [client.user1] 32 key = AQDR5yRhiTkqJhAAY5ZmSnVKf/1/BGr/q0OTaQ== 33 caps mon = "allow r" 34 caps osd = "allow rwx pool=rbd-data" 35 root@node01:~#

1.4、普通用户挂载RBD情况:

1 服务上同步配置文件到客户端: 2 root@node01:~# ls 3 ceph.client.tom.keyring ceph.client.user1.keyring ceph-deploy client.client.user1.keyring client.user1.keyring cluster snap 4 root@node01:~# scp ceph.client.user1.keyring ceph-deploy/ceph.conf root@node04:/etc/ceph/ 5 root@node04's password: 6 ceph.client.user1.keyring 100% 244 263.4KB/s 00:00 7 ceph.conf 100% 265 50.5KB/s 00:00 8 root@node01:~# 9 10 客户端操作 11 12 root@node04:/etc/netplan# apt install ceph-common 13 Reading package lists... Done 14 Building dependency tree 15 Reading state information... Done 16 The following additional packages will be installed: 17 ibverbs-providers libbabeltrace1 libcephfs2 libdw1 libgoogle-perftools4 libibverbs1 libjaeger libleveldb1d liblttng-ust-ctl4 liblttng-ust0 liblua5.3-0 libnl-route-3-200 liboath0 librabbitmq4 librados2 18 libradosstriper1 librbd1 librdkafka1 librdmacm1 librgw2 libsnappy1v5 libtcmalloc-minimal4 python3-ceph-argparse python3-ceph-common python3-cephfs python3-prettytable python3-rados python3-rbd python3-rgw 19 Suggested packages: 20 ceph-base ceph-mds 21 The following NEW packages will be installed: 22 ceph-common ibverbs-providers libbabeltrace1 libcephfs2 libdw1 libgoogle-perftools4 libibverbs1 libjaeger libleveldb1d liblttng-ust-ctl4 liblttng-ust0 liblua5.3-0 libnl-route-3-200 liboath0 librabbitmq4 23 librados2 libradosstriper1 librbd1 librdkafka1 librdmacm1 librgw2 libsnappy1v5 libtcmalloc-minimal4 python3-ceph-argparse python3-ceph-common python3-cephfs python3-prettytable python3-rados python3-rbd 24 python3-rgw 25 0 upgraded, 30 newly installed, 0 to remove and 79 not upgraded. 26 Need to get 37.3 MB of archives. 27 After this operation, 152 MB of additional disk space will be used. 28 Do you want to continue? [Y/n] y 29 Get:1 https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific focal/main amd64 libjaeger amd64 16.2.5-1focal [3,780 B] 30 31 中间太长了,我就删除了点 32 33 Setting up ceph-common (16.2.5-1focal) ... 34 Adding group ceph....done 35 Adding system user ceph....done 36 Setting system user ceph properties....done 37 chown: cannot access '/var/log/ceph/*.log*': No such file or directory 38 Created symlink /etc/systemd/system/multi-user.target.wants/ceph.target → /lib/systemd/system/ceph.target. 39 Created symlink /etc/systemd/system/multi-user.target.wants/rbdmap.service → /lib/systemd/system/rbdmap.service. 40 Processing triggers for man-db (2.9.1-1) ... 41 Processing triggers for libc-bin (2.31-0ubuntu9.2) ... 42 root@node04:/etc/netplan# ls /etc/ceph/ 43 rbdmap 44 root@node04:/etc/netplan# ls /etc/ceph/ 45 ceph.client.user1.keyring ceph.conf rbdmap 46 root@node04:/etc/netplan# cd /etc/ceph/ 47 root@node04:/etc/ceph# ll 48 total 20 49 drwxr-xr-x 2 root root 4096 Aug 24 21:10 ./ 50 drwxr-xr-x 96 root root 4096 Aug 24 21:09 ../ 51 -rw------- 1 root root 244 Aug 24 21:10 ceph.client.user1.keyring 52 -rw-r--r-- 1 root root 265 Aug 24 21:10 ceph.conf 53 -rw-r--r-- 1 root root 92 Jul 8 22:16 rbdmap 54 root@node04:/etc/ceph# ceph --user user1 -s 55 cluster: 56 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 57 health: HEALTH_WARN 58 1 slow ops, oldest one blocked for 4225 sec, mon.node02 has slow ops 59 60 services: 61 mon: 3 daemons, quorum node01,node02,node03 (age 70m) 62 mgr: node01(active, since 70m), standbys: node02, node03 63 osd: 6 osds: 6 up (since 70m), 6 in (since 7d) 64 65 data: 66 pools: 3 pools, 65 pgs 67 objects: 7 objects, 54 B 68 usage: 44 MiB used, 240 GiB / 240 GiB avail 69 pgs: 65 active+clean 70 71 root@node04:/etc/ceph# 72 root@node04:/etc/ceph# 73 root@node04:/etc/ceph# rbd --user user1 -p rbd-data map rbdtest1.img 74 /dev/rbd0 75 rbd: --user is deprecated, use --id 76 root@node04:/etc/ceph# lsblk 77 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 78 loop0 7:0 0 55.4M 1 loop /snap/core18/2128 79 loop1 7:1 0 55.4M 1 loop /snap/core18/1944 80 loop2 7:2 0 31.1M 1 loop /snap/snapd/10707 81 loop3 7:3 0 69.9M 1 loop /snap/lxd/19188 82 loop4 7:4 0 32.3M 1 loop /snap/snapd/12704 83 loop5 7:5 0 70.3M 1 loop /snap/lxd/21029 84 sda 8:0 0 40G 0 disk 85 ├─sda1 8:1 0 1M 0 part 86 ├─sda2 8:2 0 1G 0 part /boot 87 └─sda3 8:3 0 39G 0 part 88 └─ubuntu--vg-ubuntu--lv 253:0 0 20G 0 lvm / 89 sr0 11:0 1 1.1G 0 rom 90 rbd0 252:0 0 1G 0 disk 91 root@node04:/etc/ceph# 92 root@node04:/etc/ceph# rbd --id user1 -p rbd-data map rbdtest1.img 93 rbd: warning: image already mapped as /dev/rbd0 94 /dev/rbd1 95 root@node04:/etc/ceph# rbd --id user1 unmap rbd-data/rbdtest1.img 96 rbd: rbd-data/rbdtest1.img: mapped more than once, unmapping /dev/rbd0 only 97 root@node04:/etc/ceph# lsblk 98 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 99 loop0 7:0 0 55.4M 1 loop /snap/core18/2128 100 loop1 7:1 0 55.4M 1 loop /snap/core18/1944 101 loop2 7:2 0 31.1M 1 loop /snap/snapd/10707 102 loop3 7:3 0 69.9M 1 loop /snap/lxd/19188 103 loop4 7:4 0 32.3M 1 loop /snap/snapd/12704 104 loop5 7:5 0 70.3M 1 loop /snap/lxd/21029 105 sda 8:0 0 40G 0 disk 106 ├─sda1 8:1 0 1M 0 part 107 ├─sda2 8:2 0 1G 0 part /boot 108 └─sda3 8:3 0 39G 0 part 109 └─ubuntu--vg-ubuntu--lv 253:0 0 20G 0 lvm / 110 sr0 11:0 1 1.1G 0 rom 111 rbd1 252:16 0 1G 0 disk 112 root@node04:/etc/ceph# rbd --id user1 unmap rbd-data/rbdtest1.img 113 root@node04:/etc/ceph# lsblk 114 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 115 loop0 7:0 0 55.4M 1 loop /snap/core18/2128 116 loop1 7:1 0 55.4M 1 loop /snap/core18/1944 117 loop2 7:2 0 31.1M 1 loop /snap/snapd/10707 118 loop3 7:3 0 69.9M 1 loop /snap/lxd/19188 119 loop4 7:4 0 32.3M 1 loop /snap/snapd/12704 120 loop5 7:5 0 70.3M 1 loop /snap/lxd/21029 121 sda 8:0 0 40G 0 disk 122 ├─sda1 8:1 0 1M 0 part 123 ├─sda2 8:2 0 1G 0 part /boot 124 └─sda3 8:3 0 39G 0 part 125 └─ubuntu--vg-ubuntu--lv 253:0 0 20G 0 lvm / 126 sr0 11:0 1 1.1G 0 rom 127 root@node04:/etc/ceph# 128 root@node04:/etc/ceph# 129 root@node04:/etc/ceph# rbd --id user1 -p rbd-data map rbdtest1.img 130 /dev/rbd0 131 root@node04:/etc/ceph# lsblk 132 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT 133 loop0 7:0 0 55.4M 1 loop /snap/core18/2128 134 loop1 7:1 0 55.4M 1 loop /snap/core18/1944 135 loop2 7:2 0 31.1M 1 loop /snap/snapd/10707 136 loop3 7:3 0 69.9M 1 loop /snap/lxd/19188 137 loop4 7:4 0 32.3M 1 loop /snap/snapd/12704 138 loop5 7:5 0 70.3M 1 loop /snap/lxd/21029 139 sda 8:0 0 40G 0 disk 140 ├─sda1 8:1 0 1M 0 part 141 ├─sda2 8:2 0 1G 0 part /boot 142 └─sda3 8:3 0 39G 0 part 143 └─ubuntu--vg-ubuntu--lv 253:0 0 20G 0 lvm / 144 sr0 11:0 1 1.1G 0 rom 145 rbd0 252:0 0 1G 0 disk 146 root@node04:/etc/ceph# 147 root@node04:/etc/ceph# mkfs.xfs /dev/rbd0 148 meta-data=/dev/rbd0 isize=512 agcount=8, agsize=32768 blks 149 = sectsz=512 attr=2, projid32bit=1 150 = crc=1 finobt=1, sparse=1, rmapbt=0 151 = reflink=1 152 data = bsize=4096 blocks=262144, imaxpct=25 153 = sunit=16 swidth=16 blks 154 naming =version 2 bsize=4096 ascii-ci=0, ftype=1 155 log =internal log bsize=4096 blocks=2560, version=2 156 = sectsz=512 sunit=16 blks, lazy-count=1 157 realtime =none extsz=4096 blocks=0, rtextents=0 158 root@node04:/etc/ceph# mount /dev/rbd0 /mnt/ 159 root@node04:/etc/ceph# cp /etc/passwd /mnt/ 160 root@node04:/etc/ceph# cd /mnt/ 161 root@node04:/mnt# ls 162 passwd 163 root@node04:/mnt# tail passwd 164 uuidd:x:107:112::/run/uuidd:/usr/sbin/nologin 165 tcpdump:x:108:113::/nonexistent:/usr/sbin/nologin 166 landscape:x:109:115::/var/lib/landscape:/usr/sbin/nologin 167 pollinate:x:110:1::/var/cache/pollinate:/bin/false 168 usbmux:x:111:46:usbmux daemon,,,:/var/lib/usbmux:/usr/sbin/nologin 169 sshd:x:112:65534::/run/sshd:/usr/sbin/nologin 170 systemd-coredump:x:999:999:systemd Core Dumper:/:/usr/sbin/nologin 171 vmuser:x:1000:1000:vmuser:/home/vmuser:/bin/bash 172 lxd:x:998:100::/var/snap/lxd/common/lxd:/bin/false 173 ceph:x:64045:64045:Ceph storage service:/var/lib/ceph:/usr/sbin/nologin 174 root@node04:/mnt#

下面是具体主要步骤命令:

1 服务上操作同步配置文件到客户端: 2 root@node01:~# scp ceph.client.user1.keyring ceph-deploy/ceph.conf root@node04:/etc/ceph/ 3 4 客户端操作: 5 root@node04:/etc/netplan# apt install ceph-common 6 root@node04:/etc/netplan# ls /etc/ceph/ 7 root@node04:/etc/ceph# ceph --user user1 -s 8 root@node04:/etc/ceph# rbd --user user1 -p rbd-data map rbdtest1.img(这台命令少用吧,用下面的) 9 root@node04:/etc/ceph# rbd --id user1 -p rbd-data map rbdtest1.img 10 root@node04:/etc/ceph# mkfs.xfs /dev/rbd0 11 root@node04:/etc/ceph# mount /dev/rbd0 /mnt/

1.5、普通用户挂载CephFS情况:

1 root@node01:~/ceph-deploy# ceph -s 2 cluster: 3 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 4 health: HEALTH_WARN 5 1 slow ops, oldest one blocked for 4986 sec, mon.node02 has slow ops 6 7 services: 8 mon: 3 daemons, quorum node01,node02,node03 (age 83m) 9 mgr: node01(active, since 83m), standbys: node02, node03 10 osd: 6 osds: 6 up (since 83m), 6 in (since 7d) 11 12 data: 13 pools: 3 pools, 65 pgs 14 objects: 18 objects, 14 MiB 15 usage: 132 MiB used, 240 GiB / 240 GiB avail 16 pgs: 65 active+clean 17 18 root@node01:~/ceph-deploy# ceph osd lspools 19 1 device_health_metrics 20 2 mypool1 21 3 rbd-data 22 root@node01:~/ceph-deploy# 23 root@node01:~/ceph-deploy# 24 root@node01:~/ceph-deploy# ceph osd pool create cephfs-metadata 16 16 25 pool 'cephfs-metadata' created 26 root@node01:~/ceph-deploy# ceph osd pool create cephfs-data 32 32 27 pool 'cephfs-data' created 28 root@node01:~/ceph-deploy# 29 root@node01:~/ceph-deploy# ceph -s 30 cluster: 31 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 32 health: HEALTH_WARN 33 1 slow ops, oldest one blocked for 5126 sec, mon.node02 has slow ops 34 35 services: 36 mon: 3 daemons, quorum node01,node02,node03 (age 85m) 37 mgr: node01(active, since 85m), standbys: node02, node03 38 osd: 6 osds: 6 up (since 85m), 6 in (since 7d) 39 40 data: 41 pools: 5 pools, 113 pgs 42 objects: 18 objects, 14 MiB 43 usage: 137 MiB used, 240 GiB / 240 GiB avail 44 pgs: 113 active+clean 45 46 root@node01:~/ceph-deploy# 47 root@node01:~/ceph-deploy# ceph fs new mycephfs ceph-metadata cephfs-data 48 Error ENOENT: pool 'ceph-metadata' does not exist 49 root@node01:~/ceph-deploy# ceph fs new mycephfs cephfs-metadata cephfs-data 50 new fs with metadata pool 4 and data pool 5 51 root@node01:~/ceph-deploy# 52 root@node01:~/ceph-deploy# ceph -s 53 cluster: 54 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 55 health: HEALTH_ERR 56 1 filesystem is offline 57 1 filesystem is online with fewer MDS than max_mds 58 1 slow ops, oldest one blocked for 5221 sec, mon.node02 has slow ops 59 60 services: 61 mon: 3 daemons, quorum node01,node02,node03 (age 87m) 62 mgr: node01(active, since 87m), standbys: node02, node03 63 mds: 0/0 daemons up 64 osd: 6 osds: 6 up (since 87m), 6 in (since 7d) 65 66 data: 67 volumes: 1/1 healthy 68 pools: 5 pools, 113 pgs 69 objects: 18 objects, 14 MiB 70 usage: 137 MiB used, 240 GiB / 240 GiB avail 71 pgs: 113 active+clean 72 73 root@node01:~/ceph-deploy# 74 root@node01:~/ceph-deploy# ceph mds stat 75 mycephfs:0 76 root@node01:~/ceph-deploy# ceph mds status mycephfs 77 no valid command found; 10 closest matches: 78 mds metadata [<who>] 79 mds count-metadata <property> 80 mds versions 81 mds compat show 82 mds ok-to-stop <ids>... 83 mds fail <role_or_gid> 84 mds repaired <role> 85 mds rm <gid:int> 86 mds compat rm_compat <feature:int> 87 mds compat rm_incompat <feature:int> 88 Error EINVAL: invalid command 89 root@node01:~/ceph-deploy# ceph fs status mycephfs 90 mycephfs - 0 clients 91 ======== 92 POOL TYPE USED AVAIL 93 cephfs-metadata metadata 0 75.9G 94 cephfs-data data 0 75.9G 95 root@node01:~/ceph-deploy# ceph -s 96 cluster: 97 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 98 health: HEALTH_ERR 99 1 filesystem is offline 100 1 filesystem is online with fewer MDS than max_mds 101 1 slow ops, oldest one blocked for 5291 sec, mon.node02 has slow ops 102 103 services: 104 mon: 3 daemons, quorum node01,node02,node03 (age 88m) 105 mgr: node01(active, since 88m), standbys: node02, node03 106 mds: 0/0 daemons up 107 osd: 6 osds: 6 up (since 88m), 6 in (since 7d) 108 109 data: 110 volumes: 1/1 healthy 111 pools: 5 pools, 113 pgs 112 objects: 18 objects, 14 MiB 113 usage: 137 MiB used, 240 GiB / 240 GiB avail 114 pgs: 113 active+clean 115 116 root@node01:~/ceph-deploy# ceph mds stat 117 mycephfs:0 118 root@node01:~/ceph-deploy# ls 119 ceph.bootstrap-mds.keyring ceph.bootstrap-mgr.keyring ceph.bootstrap-osd.keyring ceph.bootstrap-rgw.keyring ceph.client.admin.keyring ceph.conf ceph-deploy-ceph.log ceph.mon.keyring 120 root@node01:~/ceph-deploy# ceph-de 121 ceph-debugpack ceph-dencoder ceph-deploy 122 root@node01:~/ceph-deploy# ceph-deploy mds create node01 123 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 124 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node01 125 [ceph_deploy.cli][INFO ] ceph-deploy options: 126 [ceph_deploy.cli][INFO ] username : None 127 [ceph_deploy.cli][INFO ] verbose : False 128 [ceph_deploy.cli][INFO ] overwrite_conf : False 129 [ceph_deploy.cli][INFO ] subcommand : create 130 [ceph_deploy.cli][INFO ] quiet : False 131 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9007af8a50> 132 [ceph_deploy.cli][INFO ] cluster : ceph 133 [ceph_deploy.cli][INFO ] func : <function mds at 0x7f9007acddd0> 134 [ceph_deploy.cli][INFO ] ceph_conf : None 135 [ceph_deploy.cli][INFO ] mds : [('node01', 'node01')] 136 [ceph_deploy.cli][INFO ] default_release : False 137 [ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node01:node01 138 [node01][DEBUG ] connected to host: node01 139 [node01][DEBUG ] detect platform information from remote host 140 [node01][DEBUG ] detect machine type 141 [ceph_deploy.mds][INFO ] Distro info: Ubuntu 20.04 focal 142 [ceph_deploy.mds][DEBUG ] remote host will use systemd 143 [ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node01 144 [node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 145 [node01][WARNIN] mds keyring does not exist yet, creating one 146 [node01][DEBUG ] create a keyring file 147 [node01][DEBUG ] create path if it doesn't exist 148 [node01][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node01 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node01/keyring 149 [node01][INFO ] Running command: systemctl enable ceph-mds@node01 150 [node01][WARNIN] Created symlink /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node01.service → /lib/systemd/system/ceph-mds@.service. 151 [node01][INFO ] Running command: systemctl start ceph-mds@node01 152 [node01][INFO ] Running command: systemctl enable ceph.target 153 root@node01:~/ceph-deploy# ceph mds stat 154 mycephfs:1 {0=node01=up:active} 155 root@node01:~/ceph-deploy# 156 root@node01:~/ceph-deploy# 157 root@node01:~/ceph-deploy# 158 root@node01:~/ceph-deploy# 159 root@node01:~/ceph-deploy# ceph auth add client.jack mon 'allow r' mds 'allow rw' osd 'allow rwx pool=cephfs-data' 160 added key for client.jack 161 root@node01:~/ceph-deploy# ceph auth get client.jack 162 [client.jack] 163 key = AQD29CRh1IjhChAAjYT5Ydmp/cVYuVfKeAaBfw== 164 caps mds = "allow rw" 165 caps mon = "allow r" 166 caps osd = "allow rwx pool=cephfs-data" 167 exported keyring for client.jack 168 root@node01:~/ceph-deploy# ceph auth get client.admin 169 [client.admin] 170 key = AQBErhthY4YdIhAANKTOMAjkzpKkHSkXSoNpaQ== 171 caps mds = "allow *" 172 caps mgr = "allow *" 173 caps mon = "allow *" 174 caps osd = "allow *" 175 exported keyring for client.admin 176 root@node01:~/ceph-deploy# 177 root@node01:~/ceph-deploy# 178 root@node01:~/ceph-deploy# ceph auth get client.jack -o ceph.client.jack.keyring 179 exported keyring for client.jack 180 root@node01:~/ceph-deploy# cat ceph.client.jack.keyring 181 [client.jack] 182 key = AQD29CRh1IjhChAAjYT5Ydmp/cVYuVfKeAaBfw== 183 caps mds = "allow rw" 184 caps mon = "allow r" 185 caps osd = "allow rwx pool=cephfs-data" 186 root@node01:~/ceph-deploy# 187 root@node01:~/ceph-deploy# 188 root@node01:~/ceph-deploy# scp ceph.client.jack.keyring node04:/etc/ceph/ 189 root@node04's password: 190 ceph.client.jack.keyring 100% 148 118.7KB/s 00:00 191 root@node01:~/ceph-deploy# 192 root@node01:~/ceph-deploy# 193 root@node01:~/ceph-deploy# ceph auth get-or-create-key cliet.jack 194 Error EINVAL: bad entity name 195 root@node01:~/ceph-deploy# ceph auth print-key cliet.jack 196 Error EINVAL: invalid entity_auth cliet.jack 197 root@node01:~/ceph-deploy# ceph auth print-key client.jack 198 AQD29CRh1IjhChAAjYT5Ydmp/cVYuVfKeAaBfw==root@node01:~/ceph-deploy# ceph auth print-key client.jack > jack.key 199 root@node01:~/ceph-deploy# scp jack.key node04:/etc/ceph/ 200 root@node04's password: 201 jack.key 100% 40 26.3KB/s 00:00 202 root@node01:~/ceph-deploy# 203 204 205 root@node04:~# ceph --user jack -s 206 cluster: 207 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 208 health: HEALTH_WARN 209 1 slow ops, oldest one blocked for 5671 sec, mon.node02 has slow ops 210 211 services: 212 mon: 3 daemons, quorum node01,node02,node03 (age 94m) 213 mgr: node01(active, since 95m), standbys: node02, node03 214 mds: 1/1 daemons up 215 osd: 6 osds: 6 up (since 94m), 6 in (since 7d) 216 217 data: 218 volumes: 1/1 healthy 219 pools: 5 pools, 113 pgs 220 objects: 40 objects, 14 MiB 221 usage: 138 MiB used, 240 GiB / 240 GiB avail 222 pgs: 113 active+clean 223 224 root@node04:~# ceph --id jack -s 225 cluster: 226 id: 9138c3cf-f529-4be6-ba84-97fcab59844b 227 health: HEALTH_WARN 228 1 slow ops, oldest one blocked for 5676 sec, mon.node02 has slow ops 229 230 services: 231 mon: 3 daemons, quorum node01,node02,node03 (age 94m) 232 mgr: node01(active, since 95m), standbys: node02, node03 233 mds: 1/1 daemons up 234 osd: 6 osds: 6 up (since 94m), 6 in (since 7d) 235 236 data: 237 volumes: 1/1 healthy 238 pools: 5 pools, 113 pgs 239 objects: 40 objects, 14 MiB 240 usage: 138 MiB used, 240 GiB / 240 GiB avail 241 pgs: 113 active+clean 242 243 root@node04:~# 244 root@node04:~# mkdir /data 245 root@node04:~# 246 root@node04:~# mount -t ceph node01:6789,node02:6789,node03:6789:/ /data/ -o name=jack,secretfile=/etc/ceph/jack.key 247 root@node04:~# df -hT 248 Filesystem Type Size Used Avail Use% Mounted on 249 udev devtmpfs 936M 0 936M 0% /dev 250 tmpfs tmpfs 196M 1.2M 195M 1% /run 251 /dev/mapper/ubuntu--vg-ubuntu--lv ext4 20G 4.5G 15G 25% / 252 tmpfs tmpfs 980M 0 980M 0% /dev/shm 253 tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock 254 tmpfs tmpfs 980M 0 980M 0% /sys/fs/cgroup 255 /dev/sda2 ext4 976M 106M 804M 12% /boot 256 /dev/loop0 squashfs 56M 56M 0 100% /snap/core18/2128 257 /dev/loop1 squashfs 56M 56M 0 100% /snap/core18/1944 258 /dev/loop2 squashfs 32M 32M 0 100% /snap/snapd/10707 259 /dev/loop3 squashfs 70M 70M 0 100% /snap/lxd/19188 260 tmpfs tmpfs 196M 0 196M 0% /run/user/0 261 /dev/loop4 squashfs 33M 33M 0 100% /snap/snapd/12704 262 /dev/loop5 squashfs 71M 71M 0 100% /snap/lxd/21029 263 /dev/rbd0 xfs 1014M 40M 975M 4% /mnt 264 192.168.11.210:6789,192.168.11.220:6789,192.168.11.230:6789:/ ceph 76G 0 76G 0% /data 265 root@node04:~# cd /data/ 266 root@node04:/data# echo 'test content' > testfile.txt 267 root@node04:/data# cat testfile.txt 268 test content 269 root@node04:/data#

下面是具体主要步骤命令:

1 服务器端操作: 2 root@node01:~/ceph-deploy# ceph-deploy mds create node01 3 root@node01:~/ceph-deploy# ceph osd pool create cephfs-metadata 16 16 4 root@node01:~/ceph-deploy# ceph osd pool create cephfs-data 32 32 5 root@node01:~/ceph-deploy# ceph fs new mycephfs cephfs-metadata cephfs-data 6 7 root@node01:~/ceph-deploy# ceph auth add client.jack mon 'allow r' mds 'allow rw' osd 'allow rwx pool=cephfs-data' 8 root@node01:~/ceph-deploy# ceph auth get client.jack -o ceph.client.jack.keyring 9 10 root@node01:~/ceph-deploy# scp ceph.client.jack.keyring node04:/etc/ceph/ 11 root@node01:~/ceph-deploy# ceph auth print-key client.jack > jack.key 12 root@node01:~/ceph-deploy# scp jack.key node04:/etc/ceph/ 13 14 客户端操作: 15 root@node04:~# ceph --user jack -s 16 root@node04:~# mkdir /data 17 root@node04:~# mount -t ceph node01:6789,node02:6789,node03:6789:/ /data/ -o name=jack,secretfile=/etc/ceph/jack.key

2、CephFS的mds高可用情况:

本次实验的节点情况:

1 192.168.11.210 node01 2 192.168.11.220 node02 3 192.168.11.230 node03 4 192.168.11.240 node04 这个节点就是新加的作为上面客户端和这里的mds

2.1、开始创建多个mds吧

1 root@node01:~/ceph-deploy# ceph-deploy mds create node02 2 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 3 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node02 4 [ceph_deploy.cli][INFO ] ceph-deploy options: 5 [ceph_deploy.cli][INFO ] username : None 6 [ceph_deploy.cli][INFO ] verbose : False 7 [ceph_deploy.cli][INFO ] overwrite_conf : False 8 [ceph_deploy.cli][INFO ] subcommand : create 9 [ceph_deploy.cli][INFO ] quiet : False 10 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7febd49fda50> 11 [ceph_deploy.cli][INFO ] cluster : ceph 12 [ceph_deploy.cli][INFO ] func : <function mds at 0x7febd49d2dd0> 13 [ceph_deploy.cli][INFO ] ceph_conf : None 14 [ceph_deploy.cli][INFO ] mds : [('node02', 'node02')] 15 [ceph_deploy.cli][INFO ] default_release : False 16 [ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node02:node02 17 [node02][DEBUG ] connected to host: node02 18 [node02][DEBUG ] detect platform information from remote host 19 [node02][DEBUG ] detect machine type 20 [ceph_deploy.mds][INFO ] Distro info: Ubuntu 20.04 focal 21 [ceph_deploy.mds][DEBUG ] remote host will use systemd 22 [ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node02 23 [node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 24 [node02][WARNIN] mds keyring does not exist yet, creating one 25 [node02][DEBUG ] create a keyring file 26 [node02][DEBUG ] create path if it doesn't exist 27 [node02][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node02 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node02/keyring 28 [node02][INFO ] Running command: systemctl enable ceph-mds@node02 29 [node02][WARNIN] Created symlink /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node02.service → /lib/systemd/system/ceph-mds@.service. 30 [node02][INFO ] Running command: systemctl start ceph-mds@node02 31 [node02][INFO ] Running command: systemctl enable ceph.target 32 root@node01:~/ceph-deploy# 33 root@node01:~/ceph-deploy# ceph mds stat 34 mycephfs:1 {0=node01=up:active} 1 up:standby 35 root@node01:~/ceph-deploy# 36 root@node01:~/ceph-deploy# ceph-deploy mds create node03 37 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 38 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node03 39 [ceph_deploy.cli][INFO ] ceph-deploy options: 40 [ceph_deploy.cli][INFO ] username : None 41 [ceph_deploy.cli][INFO ] verbose : False 42 [ceph_deploy.cli][INFO ] overwrite_conf : False 43 [ceph_deploy.cli][INFO ] subcommand : create 44 [ceph_deploy.cli][INFO ] quiet : False 45 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f1d42589a50> 46 [ceph_deploy.cli][INFO ] cluster : ceph 47 [ceph_deploy.cli][INFO ] func : <function mds at 0x7f1d4255edd0> 48 [ceph_deploy.cli][INFO ] ceph_conf : None 49 [ceph_deploy.cli][INFO ] mds : [('node03', 'node03')] 50 [ceph_deploy.cli][INFO ] default_release : False 51 [ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node03:node03 52 [node03][DEBUG ] connected to host: node03 53 [node03][DEBUG ] detect platform information from remote host 54 [node03][DEBUG ] detect machine type 55 [ceph_deploy.mds][INFO ] Distro info: Ubuntu 20.04 focal 56 [ceph_deploy.mds][DEBUG ] remote host will use systemd 57 [ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node03 58 [node03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 59 [node03][WARNIN] mds keyring does not exist yet, creating one 60 [node03][DEBUG ] create a keyring file 61 [node03][DEBUG ] create path if it doesn't exist 62 [node03][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node03 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node03/keyring 63 [node03][INFO ] Running command: systemctl enable ceph-mds@node03 64 [node03][WARNIN] Created symlink /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node03.service → /lib/systemd/system/ceph-mds@.service. 65 [node03][INFO ] Running command: systemctl start ceph-mds@node03 66 [node03][INFO ] Running command: systemctl enable ceph.target 67 root@node01:~/ceph-deploy# ceph mds stat 68 mycephfs:1 {0=node01=up:active} 1 up:standby 69 root@node01:~/ceph-deploy# ceph mds stat 70 mycephfs:1 {0=node01=up:active} 2 up:standby 71 root@node01:~/ceph-deploy# ceph-deploy mds create node04 72 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 73 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node04 74 [ceph_deploy.cli][INFO ] ceph-deploy options: 75 [ceph_deploy.cli][INFO ] username : None 76 [ceph_deploy.cli][INFO ] verbose : False 77 [ceph_deploy.cli][INFO ] overwrite_conf : False 78 [ceph_deploy.cli][INFO ] subcommand : create 79 [ceph_deploy.cli][INFO ] quiet : False 80 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f72374d4a50> 81 [ceph_deploy.cli][INFO ] cluster : ceph 82 [ceph_deploy.cli][INFO ] func : <function mds at 0x7f72374a9dd0> 83 [ceph_deploy.cli][INFO ] ceph_conf : None 84 [ceph_deploy.cli][INFO ] mds : [('node04', 'node04')] 85 [ceph_deploy.cli][INFO ] default_release : False 86 [ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node04:node04 87 root@node04's password: 88 bash: python2: command not found 89 [ceph_deploy.mds][ERROR ] connecting to host: node04 resulted in errors: IOError cannot send (already closed?) 90 [ceph_deploy][ERROR ] GenericError: Failed to create 1 MDSs 91 92 root@node01:~/ceph-deploy# ceph-deploy mds create node04 93 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 94 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy mds create node04 95 [ceph_deploy.cli][INFO ] ceph-deploy options: 96 [ceph_deploy.cli][INFO ] username : None 97 [ceph_deploy.cli][INFO ] verbose : False 98 [ceph_deploy.cli][INFO ] overwrite_conf : False 99 [ceph_deploy.cli][INFO ] subcommand : create 100 [ceph_deploy.cli][INFO ] quiet : False 101 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f4fa90baa50> 102 [ceph_deploy.cli][INFO ] cluster : ceph 103 [ceph_deploy.cli][INFO ] func : <function mds at 0x7f4fa908fdd0> 104 [ceph_deploy.cli][INFO ] ceph_conf : None 105 [ceph_deploy.cli][INFO ] mds : [('node04', 'node04')] 106 [ceph_deploy.cli][INFO ] default_release : False 107 [ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node04:node04 108 root@node04's password: 109 root@node04's password: 110 [node04][DEBUG ] connected to host: node04 111 [node04][DEBUG ] detect platform information from remote host 112 [node04][DEBUG ] detect machine type 113 [ceph_deploy.mds][INFO ] Distro info: Ubuntu 20.04 focal 114 [ceph_deploy.mds][DEBUG ] remote host will use systemd 115 [ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node04 116 [node04][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 117 [node04][WARNIN] mds keyring does not exist yet, creating one 118 [node04][DEBUG ] create a keyring file 119 [node04][DEBUG ] create path if it doesn't exist 120 [node04][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node04 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node04/keyring 121 [node04][INFO ] Running command: systemctl enable ceph-mds@node04 122 [node04][WARNIN] Created symlink /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node04.service → /lib/systemd/system/ceph-mds@.service. 123 [node04][INFO ] Running command: systemctl start ceph-mds@node04 124 [node04][INFO ] Running command: systemctl enable ceph.target 125 root@node01:~/ceph-deploy# ceph mds stat 126 mycephfs:1 {0=node01=up:active} 3 up:standby 127 root@node01:~/ceph-deploy# 128 root@node01:~/ceph-deploy# 129 root@node01:~/ceph-deploy# ceph mds stat 130 mycephfs:1 {0=node01=up:active} 3 up:standby 131 root@node01:~/ceph-deploy# 132 root@node01:~/ceph-deploy# 133 root@node01:~/ceph-deploy# ceph fs status 134 mycephfs - 1 clients 135 ======== 136 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 137 0 active node01 Reqs: 0 /s 11 14 12 2 138 POOL TYPE USED AVAIL 139 cephfs-metadata metadata 108k 75.9G 140 cephfs-data data 12.0k 75.9G 141 STANDBY MDS 142 node03 143 node04 144 node02 145 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 146 root@node01:~/ceph-deploy# 147 root@node01:~/ceph-deploy# 148 root@node01:~/ceph-deploy# ceph fs get mycephfs 149 Filesystem 'mycephfs' (1) 150 fs_name mycephfs 151 epoch 5 152 flags 12 153 created 2021-08-24T21:27:30.730136+0800 154 modified 2021-08-24T21:29:55.774998+0800 155 tableserver 0 156 root 0 157 session_timeout 60 158 session_autoclose 300 159 max_file_size 1099511627776 160 required_client_features {} 161 last_failure 0 162 last_failure_osd_epoch 0 163 compat compat={},rocompat={},incompat={1=base v0.20,2=client writeable ranges,3=default file layouts on dirs,4=dir inode in separate object,5=mds uses versioned encoding,6=dirfrag is stored in omap,8=no anchor table,9=file layout v2,10=snaprealm v2} 164 max_mds 1 165 in 0 166 up {0=65409} 167 failed 168 damaged 169 stopped 170 data_pools [5] 171 metadata_pool 4 172 inline_data disabled 173 balancer 174 standby_count_wanted 1 175 [mds.node01{0:65409} state up:active seq 2 addr [v2:192.168.11.210:6810/3443284017,v1:192.168.11.210:6811/3443284017]] 176 root@node01:~/ceph-deploy# 177 root@node01:~/ceph-deploy# 178 root@node01:~/ceph-deploy# ceph fs set mycephfs max_mds 2 179 root@node01:~/ceph-deploy# 180 root@node01:~/ceph-deploy# 181 root@node01:~/ceph-deploy# ceph fs get mycephfs 182 Filesystem 'mycephfs' (1) 183 fs_name mycephfs 184 epoch 12 185 flags 12 186 created 2021-08-24T21:27:30.730136+0800 187 modified 2021-08-24T21:44:44.248039+0800 188 tableserver 0 189 root 0 190 session_timeout 60 191 session_autoclose 300 192 max_file_size 1099511627776 193 required_client_features {} 194 last_failure 0 195 last_failure_osd_epoch 0 196 compat compat={},rocompat={},incompat={1=base v0.20,2=client writeable ranges,3=default file layouts on dirs,4=dir inode in separate object,5=mds uses versioned encoding,6=dirfrag is stored in omap,8=no anchor table,9=file layout v2,10=snaprealm v2} 197 max_mds 2 198 in 0,1 199 up {0=65409,1=64432} 200 failed 201 damaged 202 stopped 203 data_pools [5] 204 metadata_pool 4 205 inline_data disabled 206 balancer 207 standby_count_wanted 1 208 [mds.node01{0:65409} state up:active seq 2 addr [v2:192.168.11.210:6810/3443284017,v1:192.168.11.210:6811/3443284017]] 209 [mds.node02{1:64432} state up:active seq 41 addr [v2:192.168.11.220:6808/4242415336,v1:192.168.11.220:6809/4242415336]] 210 root@node01:~/ceph-deploy# 211 root@node01:~/ceph-deploy# 212 root@node01:~/ceph-deploy# ceph fs status 213 mycephfs - 1 clients 214 ======== 215 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 216 0 active node01 Reqs: 0 /s 11 14 12 2 217 1 active node02 Reqs: 0 /s 10 13 11 0 218 POOL TYPE USED AVAIL 219 cephfs-metadata metadata 180k 75.9G 220 cephfs-data data 12.0k 75.9G 221 STANDBY MDS 222 node03 223 node04 224 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 225 root@node01:~/ceph-deploy# 226 root@node01:~/ceph-deploy# 227 root@node01:~/ceph-deploy#

下面是具体主要步骤命令:

部署节点上(node01)上添加另外三个mds: root@node01:~/ceph-deploy# ceph-deploy mds create node02 root@node01:~/ceph-deploy# ceph-deploy mds create node03 root@node01:~/ceph-deploy# ceph-deploy mds create node04 查看状态: root@node01:~/ceph-deploy# ceph mds stat root@node01:~/ceph-deploy# ceph fs status mycephfs - 1 clients ======== RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 0 active node01 Reqs: 0 /s 11 14 12 2 POOL TYPE USED AVAIL cephfs-metadata metadata 108k 75.9G cephfs-data data 12.0k 75.9G STANDBY MDS node03 node04 node02 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) root@node01:~/ceph-deploy# ceph fs get mycephfs root@node01:~/ceph-deploy# ceph fs set mycephfs max_mds 2 root@node01:~/ceph-deploy# ceph fs status

此时两主,两备,任何一个主出现问题,两个备节点中的一个会自动顶上去:

1 root@node01:~/ceph-deploy# ceph fs status 2 mycephfs - 1 clients 3 ======== 4 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 5 0 active node01 Reqs: 0 /s 11 14 12 2 6 1 active node02 Reqs: 0 /s 10 13 11 0 7 POOL TYPE USED AVAIL 8 cephfs-metadata metadata 180k 75.9G 9 cephfs-data data 12.0k 75.9G 10 STANDBY MDS 11 node03 12 node04 13 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 14 15 重启node01节点 16 17 root@node01:~/ceph-deploy# systemctl restart ceph 18 ceph-crash.service ceph-mds@node01.service ceph-mgr@node01.service ceph-mon@node01.service ceph-osd@0.service ceph-osd.target ceph.service 19 ceph-fuse.target ceph-mds.target ceph-mgr.target ceph-mon.target ceph-osd@3.service ceph-radosgw.target ceph.target 20 root@node01:~/ceph-deploy# systemctl restart ceph-m 21 ceph-mds@node01.service ceph-mds.target ceph-mgr@node01.service ceph-mgr.target ceph-mon@node01.service ceph-mon.target 22 root@node01:~/ceph-deploy# systemctl restart ceph-mds 23 ceph-mds@node01.service ceph-mds.target 24 root@node01:~/ceph-deploy# systemctl restart ceph-mds@node01.service 25 root@node01:~/ceph-deploy# 26 root@node01:~/ceph-deploy# ceph fs status 27 mycephfs - 1 clients 28 ======== 29 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 30 0 active node04 Reqs: 0 /s 1 4 2 1 31 1 active node02 Reqs: 0 /s 10 13 11 0 32 POOL TYPE USED AVAIL 33 cephfs-metadata metadata 180k 75.9G 34 cephfs-data data 12.0k 75.9G 35 STANDBY MDS 36 node03 37 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 38 root@node01:~/ceph-deploy# 39 root@node01:~/ceph-deploy# 40 root@node01:~/ceph-deploy# ceph fs status 41 mycephfs - 1 clients 42 ======== 43 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 44 0 active node04 Reqs: 0 /s 11 14 12 1 45 1 active node02 Reqs: 0 /s 10 13 11 0 46 POOL TYPE USED AVAIL 47 cephfs-metadata metadata 180k 75.9G 48 cephfs-data data 12.0k 75.9G 49 STANDBY MDS 50 node03 51 node01 52 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 53 root@node01:~/ceph-deploy#

如果想做成一对一的绑定——node01和node02互为主备,node03和node04互为主备,下面这样整一下吧(主要是配置文件):

1 root@node01:~/ceph-deploy# vim ceph.conf 2 root@node01:~/ceph-deploy# cat ceph.conf 3 [global] 4 fsid = 9138c3cf-f529-4be6-ba84-97fcab59844b 5 public_network = 192.168.11.0/24 6 cluster_network = 192.168.22.0/24 7 mon_initial_members = node01 8 mon_host = 192.168.11.210 9 auth_cluster_required = cephx 10 auth_service_required = cephx 11 auth_client_required = cephx 12 13 [mds.node04] 14 mds_standby_for_name = node03 15 mds_standby_replay = true 16 [mds.node02] 17 mds_standby_for_name = node04 18 mds_standby_replay = true 19 root@node01:~/ceph-deploy# vim ceph.conf 20 root@node01:~/ceph-deploy# cat ceph.conf 21 [global] 22 fsid = 9138c3cf-f529-4be6-ba84-97fcab59844b 23 public_network = 192.168.11.0/24 24 cluster_network = 192.168.22.0/24 25 mon_initial_members = node01 26 mon_host = 192.168.11.210 27 auth_cluster_required = cephx 28 auth_service_required = cephx 29 auth_client_required = cephx 30 31 [mds.node04] 32 mds_standby_for_name = node03 33 mds_standby_replay = true 34 [mds.node02] 35 mds_standby_for_name = node01 36 mds_standby_replay = true 37 root@node01:~/ceph-deploy# ceph-deploy config push node01 38 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 39 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy config push node01 40 [ceph_deploy.cli][INFO ] ceph-deploy options: 41 [ceph_deploy.cli][INFO ] username : None 42 [ceph_deploy.cli][INFO ] verbose : False 43 [ceph_deploy.cli][INFO ] overwrite_conf : False 44 [ceph_deploy.cli][INFO ] subcommand : push 45 [ceph_deploy.cli][INFO ] quiet : False 46 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f4fdbb800f0> 47 [ceph_deploy.cli][INFO ] cluster : ceph 48 [ceph_deploy.cli][INFO ] client : ['node01'] 49 [ceph_deploy.cli][INFO ] func : <function config at 0x7f4fdbbd6350> 50 [ceph_deploy.cli][INFO ] ceph_conf : None 51 [ceph_deploy.cli][INFO ] default_release : False 52 [ceph_deploy.config][DEBUG ] Pushing config to node01 53 [node01][DEBUG ] connected to host: node01 54 [node01][DEBUG ] detect platform information from remote host 55 [node01][DEBUG ] detect machine type 56 [node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 57 [ceph_deploy.config][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite-conf to overwrite 58 [ceph_deploy][ERROR ] GenericError: Failed to config 1 hosts 59 60 root@node01:~/ceph-deploy# ceph-deploy --overwrite-conf config push node01 61 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 62 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf config push node01 63 [ceph_deploy.cli][INFO ] ceph-deploy options: 64 [ceph_deploy.cli][INFO ] username : None 65 [ceph_deploy.cli][INFO ] verbose : False 66 [ceph_deploy.cli][INFO ] overwrite_conf : True 67 [ceph_deploy.cli][INFO ] subcommand : push 68 [ceph_deploy.cli][INFO ] quiet : False 69 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f201b0280f0> 70 [ceph_deploy.cli][INFO ] cluster : ceph 71 [ceph_deploy.cli][INFO ] client : ['node01'] 72 [ceph_deploy.cli][INFO ] func : <function config at 0x7f201b07e350> 73 [ceph_deploy.cli][INFO ] ceph_conf : None 74 [ceph_deploy.cli][INFO ] default_release : False 75 [ceph_deploy.config][DEBUG ] Pushing config to node01 76 [node01][DEBUG ] connected to host: node01 77 [node01][DEBUG ] detect platform information from remote host 78 [node01][DEBUG ] detect machine type 79 [node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 80 root@node01:~/ceph-deploy# ceph-deploy --overwrite-conf config push node02 node02 node04 81 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 82 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf config push node02 node02 node04 83 [ceph_deploy.cli][INFO ] ceph-deploy options: 84 [ceph_deploy.cli][INFO ] username : None 85 [ceph_deploy.cli][INFO ] verbose : False 86 [ceph_deploy.cli][INFO ] overwrite_conf : True 87 [ceph_deploy.cli][INFO ] subcommand : push 88 [ceph_deploy.cli][INFO ] quiet : False 89 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f549232a0f0> 90 [ceph_deploy.cli][INFO ] cluster : ceph 91 [ceph_deploy.cli][INFO ] client : ['node02', 'node02', 'node04'] 92 [ceph_deploy.cli][INFO ] func : <function config at 0x7f5492380350> 93 [ceph_deploy.cli][INFO ] ceph_conf : None 94 [ceph_deploy.cli][INFO ] default_release : False 95 [ceph_deploy.config][DEBUG ] Pushing config to node02 96 [node02][DEBUG ] connected to host: node02 97 [node02][DEBUG ] detect platform information from remote host 98 [node02][DEBUG ] detect machine type 99 [node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 100 [ceph_deploy.config][DEBUG ] Pushing config to node02 101 [node02][DEBUG ] connected to host: node02 102 [node02][DEBUG ] detect platform information from remote host 103 [node02][DEBUG ] detect machine type 104 [node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 105 [ceph_deploy.config][DEBUG ] Pushing config to node04 106 root@node04's password: 107 root@node04's password: 108 [node04][DEBUG ] connected to host: node04 109 [node04][DEBUG ] detect platform information from remote host 110 [node04][DEBUG ] detect machine type 111 [node04][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 112 root@node01:~/ceph-deploy# 113 root@node01:~/ceph-deploy# systemctl restart ceph-mds@node01.service 114 root@node01:~/ceph-deploy# 115 root@node01:~/ceph-deploy# ceph fs status 116 mycephfs - 1 clients 117 ======== 118 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 119 0 active node04 Reqs: 0 /s 11 14 12 1 120 1 active node02 Reqs: 0 /s 10 13 11 0 121 POOL TYPE USED AVAIL 122 cephfs-metadata metadata 180k 75.9G 123 cephfs-data data 12.0k 75.9G 124 STANDBY MDS 125 node03 126 node01 127 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 128 root@node01:~/ceph-deploy# 129 root@node01:~/ceph-deploy# 130 root@node01:~/ceph-deploy# ceph-deploy admin node01 node02 node03 node04 131 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 132 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy admin node01 node02 node03 node04 133 [ceph_deploy.cli][INFO ] ceph-deploy options: 134 [ceph_deploy.cli][INFO ] username : None 135 [ceph_deploy.cli][INFO ] verbose : False 136 [ceph_deploy.cli][INFO ] overwrite_conf : False 137 [ceph_deploy.cli][INFO ] quiet : False 138 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9fa57ccf50> 139 [ceph_deploy.cli][INFO ] cluster : ceph 140 [ceph_deploy.cli][INFO ] client : ['node01', 'node02', 'node03', 'node04'] 141 [ceph_deploy.cli][INFO ] func : <function admin at 0x7f9fa58a54d0> 142 [ceph_deploy.cli][INFO ] ceph_conf : None 143 [ceph_deploy.cli][INFO ] default_release : False 144 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node01 145 [node01][DEBUG ] connected to host: node01 146 [node01][DEBUG ] detect platform information from remote host 147 [node01][DEBUG ] detect machine type 148 [node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 149 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node02 150 [node02][DEBUG ] connected to host: node02 151 [node02][DEBUG ] detect platform information from remote host 152 [node02][DEBUG ] detect machine type 153 [node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 154 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node03 155 [node03][DEBUG ] connected to host: node03 156 [node03][DEBUG ] detect platform information from remote host 157 [node03][DEBUG ] detect machine type 158 [node03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 159 [ceph_deploy.admin][ERROR ] RuntimeError: config file /etc/ceph/ceph.conf exists with different content; use --overwrite-conf to overwrite 160 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node04 161 root@node04's password: 162 Permission denied, please try again. 163 root@node04's password: 忘了做免密同步,不过也没上面,就输入一下密码即可 164 [node04][DEBUG ] connected to host: node04 165 [node04][DEBUG ] detect platform information from remote host 166 [node04][DEBUG ] detect machine type 167 [node04][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 168 [ceph_deploy][ERROR ] GenericError: Failed to configure 1 admin hosts 169 170 root@node01:~/ceph-deploy# ceph-deploy admin node01 node02 node03 node04 --overwrite-conf 171 usage: ceph-deploy [-h] [-v | -q] [--version] [--username USERNAME] 172 [--overwrite-conf] [--ceph-conf CEPH_CONF] 173 COMMAND ... 174 ceph-deploy: error: unrecognized arguments: --overwrite-conf 175 root@node01:~/ceph-deploy# ceph-deploy --overwrite-conf admin node01 node02 node03 node04 176 [ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf 177 [ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/bin/ceph-deploy --overwrite-conf admin node01 node02 node03 node04 178 [ceph_deploy.cli][INFO ] ceph-deploy options: 179 [ceph_deploy.cli][INFO ] username : None 180 [ceph_deploy.cli][INFO ] verbose : False 181 [ceph_deploy.cli][INFO ] overwrite_conf : True 182 [ceph_deploy.cli][INFO ] quiet : False 183 [ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f41f97a5f50> 184 [ceph_deploy.cli][INFO ] cluster : ceph 185 [ceph_deploy.cli][INFO ] client : ['node01', 'node02', 'node03', 'node04'] 186 [ceph_deploy.cli][INFO ] func : <function admin at 0x7f41f987e4d0> 187 [ceph_deploy.cli][INFO ] ceph_conf : None 188 [ceph_deploy.cli][INFO ] default_release : False 189 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node01 190 [node01][DEBUG ] connected to host: node01 191 [node01][DEBUG ] detect platform information from remote host 192 [node01][DEBUG ] detect machine type 193 [node01][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 194 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node02 195 [node02][DEBUG ] connected to host: node02 196 [node02][DEBUG ] detect platform information from remote host 197 [node02][DEBUG ] detect machine type 198 [node02][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 199 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node03 200 [node03][DEBUG ] connected to host: node03 201 [node03][DEBUG ] detect platform information from remote host 202 [node03][DEBUG ] detect machine type 203 [node03][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 204 [ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node04 205 root@node04's password: 206 root@node04's password: 207 [node04][DEBUG ] connected to host: node04 208 [node04][DEBUG ] detect platform information from remote host 209 [node04][DEBUG ] detect machine type 210 [node04][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf 211 root@node01:~/ceph-deploy# 212 root@node01:~/ceph-deploy# ls 213 ceph.bootstrap-mds.keyring ceph.bootstrap-osd.keyring ceph.client.admin.keyring ceph.conf ceph.mon.keyring 214 ceph.bootstrap-mgr.keyring ceph.bootstrap-rgw.keyring ceph.client.jack.keyring ceph-deploy-ceph.log jack.key 215 root@node01:~/ceph-deploy# vim ceph.conf 216 root@node01:~/ceph-deploy# ceph fs status 217 mycephfs - 1 clients 218 ======== 219 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 220 0 active node04 Reqs: 0 /s 11 14 12 1 221 1 active node02 Reqs: 0 /s 10 13 11 0 222 POOL TYPE USED AVAIL 223 cephfs-metadata metadata 180k 75.9G 224 cephfs-data data 12.0k 75.9G 225 STANDBY MDS 226 node03 227 node01 228 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 229 root@node01:~/ceph-deploy# 230 root@node01:~/ceph-deploy# ceph fs status 231 mycephfs - 1 clients 232 ======== 233 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 234 0 active node02 Reqs: 0 /s 1 4 2 0 235 1 active node01 Reqs: 0 /s 10 13 11 0 236 POOL TYPE USED AVAIL 237 cephfs-metadata metadata 180k 75.9G 238 cephfs-data data 12.0k 75.9G 239 STANDBY MDS 240 node03 241 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 242 root@node01:~/ceph-deploy# ceph fs status 243 mycephfs - 1 clients 244 ======== 245 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 246 0 active node02 Reqs: 0 /s 11 14 12 1 247 1 active node01 Reqs: 0 /s 10 13 11 0 248 POOL TYPE USED AVAIL 249 cephfs-metadata metadata 180k 75.9G 250 cephfs-data data 12.0k 75.9G 251 STANDBY MDS 252 node03 253 node04 254 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 255 root@node01:~/ceph-deploy# ceph fs status 256 mycephfs - 1 clients 257 ======== 258 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 259 0 active node02 Reqs: 0 /s 11 14 12 1 260 1 active node01 Reqs: 0 /s 10 13 11 0 261 POOL TYPE USED AVAIL 262 cephfs-metadata metadata 180k 75.9G 263 cephfs-data data 12.0k 75.9G 264 STANDBY MDS 265 node03 266 node04 267 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 268 root@node01:~/ceph-deploy# 269 root@node01:~/ceph-deploy# 270 root@node01:~/ceph-deploy# cat ceph.c 271 cat: ceph.c: No such file or directory 272 root@node01:~/ceph-deploy# cat ceph.conf 273 [global] 274 fsid = 9138c3cf-f529-4be6-ba84-97fcab59844b 275 public_network = 192.168.11.0/24 276 cluster_network = 192.168.22.0/24 277 mon_initial_members = node01 278 mon_host = 192.168.11.210 279 auth_cluster_required = cephx 280 auth_service_required = cephx 281 auth_client_required = cephx 282 283 [mds.node04] 284 mds_standby_for_name = node03 285 mds_standby_replay = true 286 [mds.node02] 287 mds_standby_for_name = node01 288 mds_standby_replay = true 289 root@node01:~/ceph-deploy# 290 root@node01:~/ceph-deploy# 291 root@node01:~/ceph-deploy# systemctl restart ceph-mds@node01.service 292 root@node01:~/ceph-deploy# 293 root@node01:~/ceph-deploy# 294 root@node01:~/ceph-deploy# ceph fs status 295 mycephfs - 1 clients 296 ======== 297 RANK STATE MDS ACTIVITY DNS INOS DIRS CAPS 298 0 active node02 Reqs: 0 /s 11 14 12 1 299 1 active node04 Reqs: 0 /s 10 13 12 0 300 POOL TYPE USED AVAIL 301 cephfs-metadata metadata 180k 75.9G 302 cephfs-data data 12.0k 75.9G 303 STANDBY MDS 304 node03 305 node01 306 MDS version: ceph version 16.2.5 (0883bdea7337b95e4b611c768c0279868462204a) pacific (stable) 307 root@node01:~/ceph-deploy#

好了就这样吧!!!

================================ 下面是我的整个过程的log ===================================