ubuntu@Ubuntu:~$ sudo gedit /etc/hostname

2、创建hnu用户

为hadoop集群专门设置一个用户组及用户

ubuntu@Ubuntu:~$ sudo groupadd hadoop //设置hadoop用户组

ubuntu@Ubuntu:~$ sudo useradd -s /bin/bash -d /home/hnu -m hnu -g hadoop //添加一个hnu用户,此用户属于hadoop用户组。

ubuntu@Ubuntu:~$ sudo passwd hnu //设置用户hnu登录密码

ubuntu@Ubuntu:~$ su hnu //切换到hnu用户中

3、安装SSH、配置SSH无密码登录

Ubuntu14.04环境下:

ubuntu@Ubuntu:~$ sudo apt-get update

ubuntu@Ubuntu:~$ sudo apt-get install openssh-client=1:6.6p1-2ubuntu1

ubuntu@Ubuntu:~$ sudo apt-get install openssh-server

hnu@Ubuntu:~$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

以上命令是产生公私密钥,产生目录在用户主目录下的.ssh目录中,如下:

hnu@Ubuntu:~$ cd .ssh

hnu@Ubuntu:~/.ssh$ ls

id_dsa.pub为公钥,id_dsa为私钥,紧接着将公钥文件复制成authorized_keys文件,这个步骤是必须的,过程如下:

hnu@Ubuntu:~/.ssh$ cat id_dsa.pub >> authorized_keys

hnu@Ubuntu:~/.ssh$ ls

hnu@Ubuntu:~$ ssh localhost

Ubuntu16.04环境下:

ubuntu@Ubuntu:~$ sudo apt-get install openssh-server

如果系统为Ubuntu16.0.4,则该步骤如下图所示

4、安装Java环境

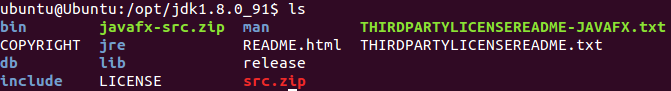

解压JDK

ubuntu@Ubuntu:/opt$ sudo tar -zxvf jdk-8u91-linux-x64.tar.gz -C /opt

ubuntu@Ubuntu:~$ sudo gedit /etc/profile

#JAVA

export JAVA_HOME=/opt/jdk1.8.0_91

export PATH=$PATH:$JAVA_HOME/bin

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/jre/lib

#HADOOP

export HADOOP_PREFIX=/home/hnu/hadoop-2.6.0

export PATH=$PATH:$HADOOP_PREFIX/bin:$HADOOP_PREFIX/sbin

export HADOOP_COMMON_LIB_NATIVE_DIR=${HADOOP_PREFIX}/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_PREFIX/lib:$HADOOP_PREFIX/lib/native"

5、安装Hadoop

解压Hadoop

ubuntu@Ubuntu:~$ sudo tar -zxvf hadoop-2.6.0.tar.gz -C /home/hnu

core-site.xml:

ubuntu@Ubuntu:/home/hnu/hadoop-2.6.0/etc/hadoop$ sudo gedit core-site.xml

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/hnu/hadoop-2.6.0/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

hdfs-site.xml:

ubuntu@Ubuntu:/home/hnu/hadoop-2.6.0/etc/hadoop$ sudo gedit hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/hnu/hadoop-2.6.0/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/hnu/hadoop-2.6.0/tmp/dfs/data</value>

</property>

</configuration>

mapred-site.xml:

ubuntu@Ubuntu:/home/hnu/hadoop-2.6.0/etc/hadoop$ sudo gedit mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>locahost:9001</value>

</property>

</configuration>

hadoop-env.sh:

ubuntu@Ubuntu:/home/hnu/hadoop-2.6.0/etc/hadoop$ sudo gedit hadoop-env.sh

export JAVA_HOME=/opt/jdk1.8.0_91

yarn-env.sh:

ubuntu@Ubuntu:/home/hnu/hadoop-2.6.0/etc/hadoop$ sudo gedit yarn-env.sh

export JAVA_HOME=/opt/jdk1.8.0_91

6、赋予权限

root@Ubuntu:/home/hnu# chown -R hnu:hadoop hadoop-2.6.0

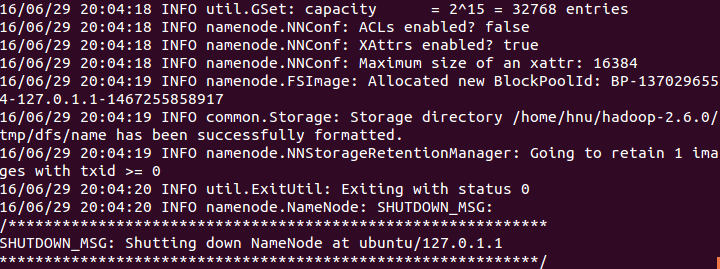

7、格式化hdfs

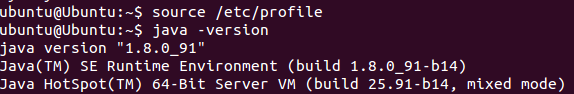

hnu@Ubuntu:~$ source /etc/profile

hnu@Ubuntu:~$ hdfs namenode -format

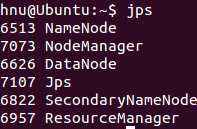

8、启动/关闭hadoop

start-all.sh/stop-all.sh

start-dfs.sh/start-yarn.sh

stop-dfs.sh/stop-dfs.sh

hnu@Ubuntu:~$ start-dfs.sh

hnu@Ubuntu:~$ start-yarn.sh

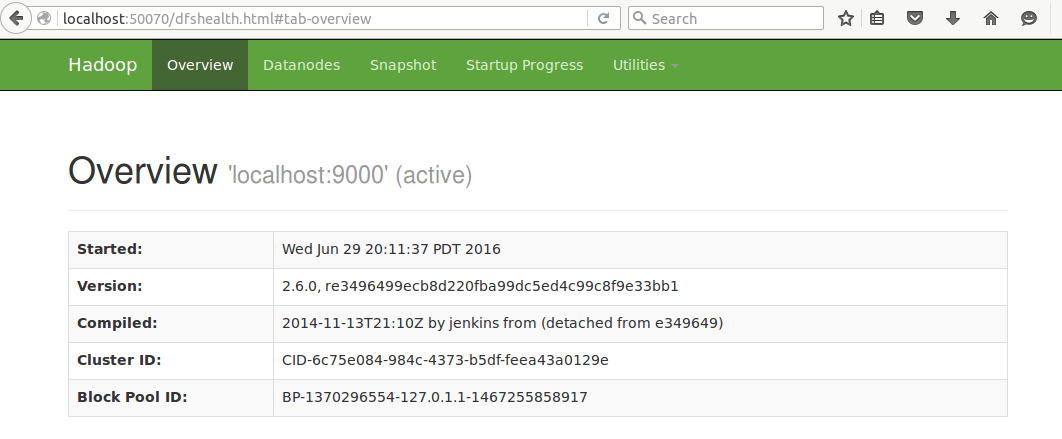

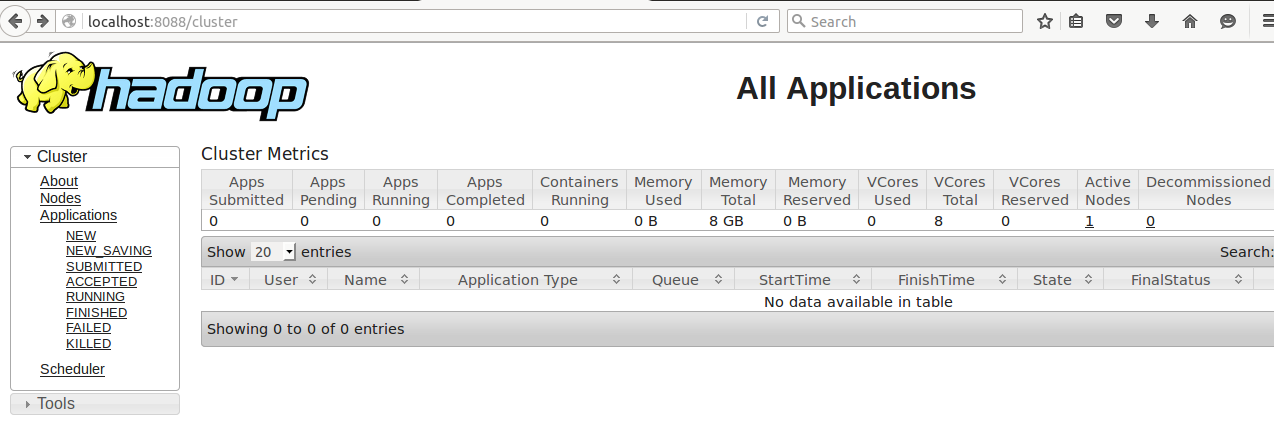

查看hdfs/RM

localhost:50070/localhost:8088