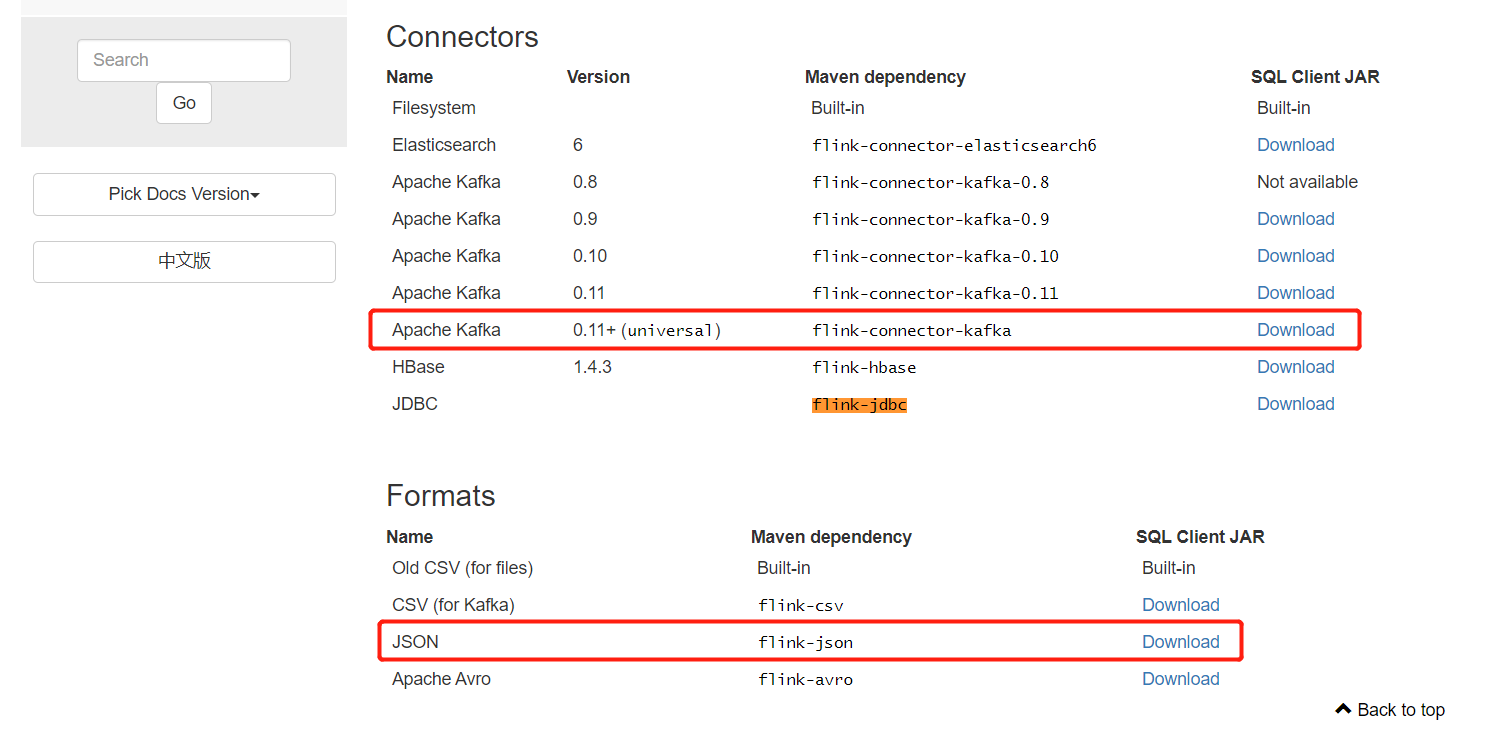

需要依赖包,并放到flink/lib目录中:

下载地址: https://ci.apache.org/projects/flink/flink-docs-release-1.10/dev/table/connect.html#jdbc-connector

-

flink-json-1.10.0-sql-jar.jar

-

flink-sql-connector-kafka_2.11-1.10.0.jar

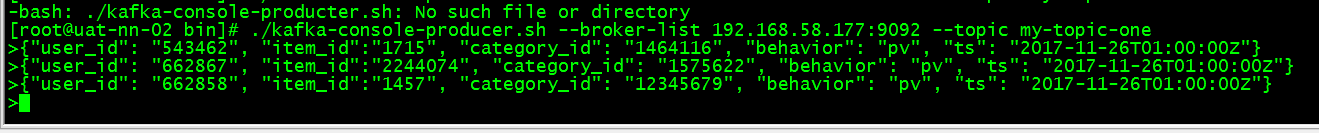

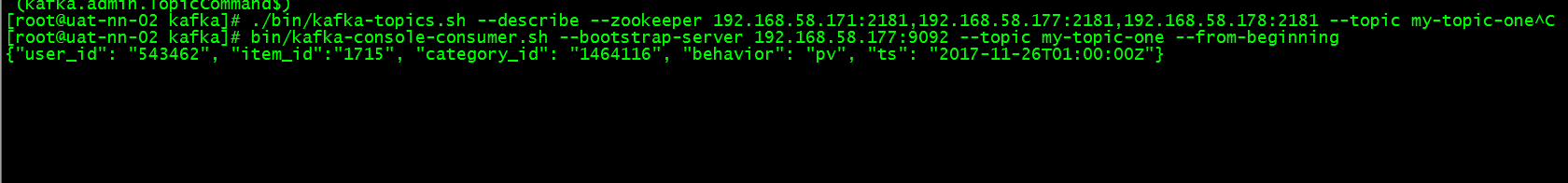

1.通过自建kafka的生产者来产生数据

/bin/kafka-console-producter.sh --broker-list 192.168.58.177:9092 --topic my_topic

数据

{"user_id": "543462", "item_id":"1715", "category_id": "1464116", "behavior": "pv", "ts": "2017-11-26T01:00:00Z"}

{"user_id": "662867", "item_id":"2244074", "category_id": "1575622", "behavior": "pv", "ts": "2017-11-26T01:00:00Z"}

{"user_id": "662868", "item_id":"1784", "category_id": "54123654", "behavior": "pv", "ts": "2017-11-26T01:00:00Z"}

{"user_id": "662854", "item_id":"1456", "category_id": "12345678", "behavior": "pv", "ts": "2017-11-26T01:00:00Z"}

{"user_id": "662858", "item_id":"1457", "category_id": "12345679", "behavior": "pv", "ts": "2017-11-26T01:00:00Z"}

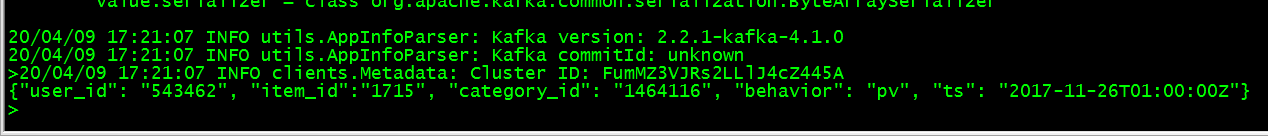

2.在kafka进行消费

/bin/kafka-console-consumer.sh --bootstrap-server 192.168.58.177:9092 --topic my_topic --partition 0 --offset 0

第一种:在SqlClient上进行测试

3.在Flink的sqlclient 创建表

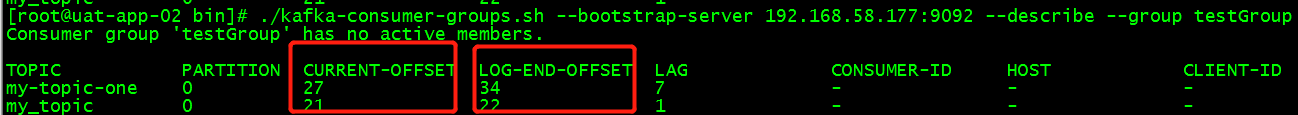

1. 这里需要注意,如果是指定Kafka的Offset消费,则参数 'connector.startup-mode' = 'specific-offsets',并且需要添加参数 'connector.specific-offsets' = 'partition:0,offset:27',这里需要指定分区以及从什么地方开始消费.下图中的topic消费到27,那么我们从27开始继续消费。

2. 查看目前topic消费的位置

./kafka-consumer-groups.sh --bootstrap-server 192.168.58.177:9092 --describe --group testGroup

CREATE TABLE user_log1 ( user_id VARCHAR, item_id VARCHAR, category_id VARCHAR, behavior VARCHAR, ts VARCHAR ) WITH ( 'connector.type' = 'kafka', 'connector.version' = 'universal', 'connector.topic' = 'my-topic-one', 'connector.startup-mode' = 'earliest-offset',-- optional: valid modes are "earliest-offset","latest-offset", "group-offsets",or "specific-offsets" 'connector.properties.group.id' = 'testGroup', 'connector.properties.zookeeper.connect' = '192.168.58.171:2181,192.168.58.177:2181,192.168.58.178:2181', 'connector.properties.bootstrap.servers' = '192.168.58.177:9092', 'format.type' = 'json' );

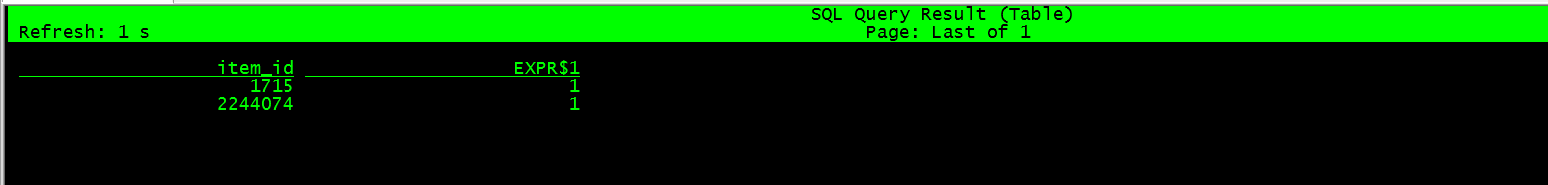

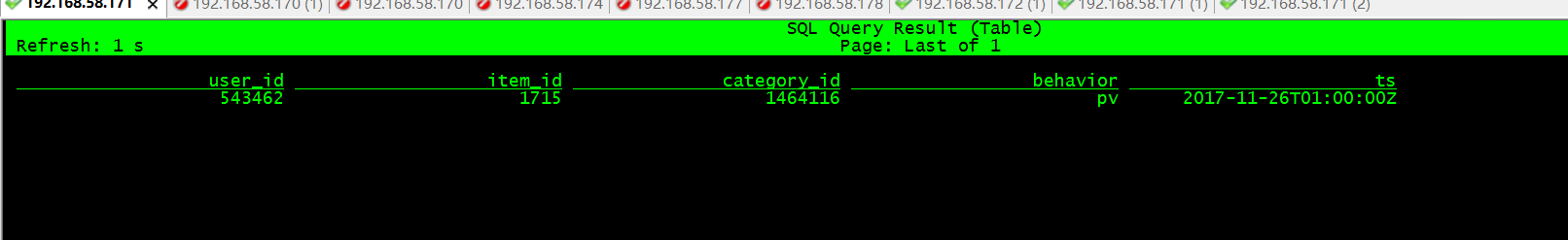

实时计算

select item_id,count(*) from user_log1 group by item_id;

第二种,在java 项目中进行测试

代码:

public static void main(String[] args) throws Exception { //创建flink运行环境 StreamExecutionEnvironment Env = StreamExecutionEnvironment.getExecutionEnvironment(); //创建tableEnvironment StreamTableEnvironment TableEnv = StreamTableEnvironment.create(Env); TableEnv.sqlUpdate("CREATE TABLE user_log1 ( " + " user_id VARCHAR, " + " item_id VARCHAR, " + " category_id VARCHAR, " + " behavior VARCHAR, " + " ts VARCHAR " + ") WITH ( " + " 'connector.type' = 'kafka', " + " 'connector.version' = 'universal', " + " 'connector.topic' = 'my-topic-one', " + " 'connector.startup-mode' = 'earliest-offset', " + //optional: valid modes are "earliest-offset","latest-offset", "group-offsets",or "specific-offsets" " 'connector.properties.group.id' = 'testGroup', " + " 'connector.properties.zookeeper.connect' = '192.168.58.171:2181,192.168.58.177:2181,192.168.58.178:2181', " + " 'connector.properties.bootstrap.servers' = '192.168.58.177:9092', " + " 'format.type' = 'json' " + ")" ) ; Table result=TableEnv.sqlQuery("select user_id,count(*) from user_log1 group by user_id"); //TableEnv.toAppendStream(result, Types.TUPLE(Types.INT,Types.LONG)).print(); TableEnv.toRetractStream(result,Types.TUPLE(Types.STRING,Types.LONG)) .print(); Env.execute("flink job"); }

执行结果: