转载 https://blog.csdn.net/a417930422/article/details/50663639

1 同一个订阅组内不同Consumer实例订阅不同topic消费混乱问题调查

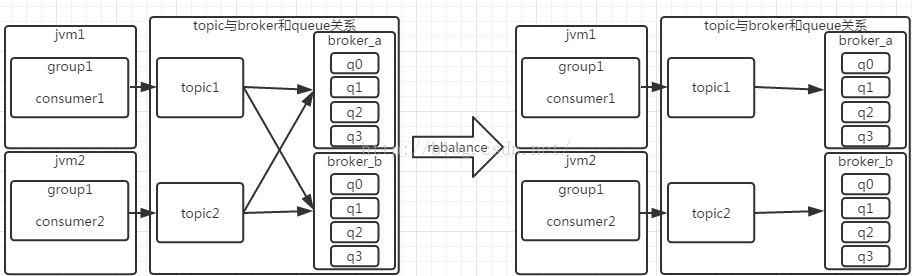

图1:

背景说明:

如图1左半部分,假设目前的关系如下:

broker: 两个,broker_a和broker_b

topic:两个,topic1和topic2,每个topic在每个broker上分为4个queue

consumer:两个,consumer1和consumer2,都属于group1,分属于不同的jvm运行。

默认情况下,topic和queue的对应关系是:

topic1 <-> broker_a q0~q3,

topic1 <-> broker_b q0~q3,

topic2 <-> broker_a q0~q3,

topic2 <-> broker_b q0~q3

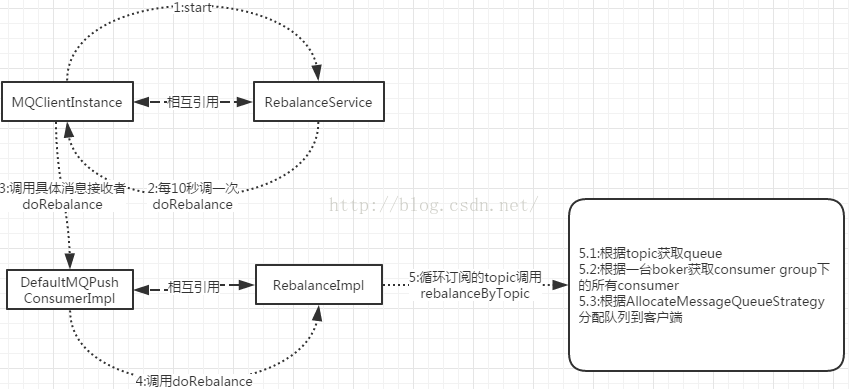

rebalance流程开始:

假设consumer1先启动,consumer1最终通过rebalance对应关系如下:

topic1 <-> broker_a q0~q3,

topic1 <-> broker_b q0~q3

接着consumer2启动,consumer2具体rebalance流程如下:

关键点在5.2,会把consumer1也抓下来,接着根据分配策略会导致consumer2只消费broker_b上topic2对应的q0~q3。

同样,consumer1也会进行rebalance,进而使其只消费broker_a的topic1对应的q0~q3,最终导致其关系变为图1中右图所示。

consumer端警告日志:

rebalance完成之后,consumer端间断打印如下异常:

14:22:04.005 [NettyClientPublicExecutor_3] WARN RocketmqClient - execute the pull request exception

com.alibaba.rocketmq.client.exception.MQBrokerException: CODE: 24 DESC: the consumer's subscription not exist

See https://github.com/alibaba/RocketMQ/issues/46 for further details.

at com.alibaba.rocketmq.client.impl.MQClientAPIImpl.processPullResponse(MQClientAPIImpl.java:500) ~[rocketmq-client-3.2.6.jar:na]

at com.alibaba.rocketmq.client.impl.MQClientAPIImpl.access$100(MQClientAPIImpl.java:78) ~[rocketmq-client-3.2.6.jar:na]

at com.alibaba.rocketmq.client.impl.MQClientAPIImpl$2.operationComplete(MQClientAPIImpl.java:455) ~[rocketmq-client-3.2.6.jar:na]

at com.alibaba.rocketmq.remoting.netty.ResponseFuture.executeInvokeCallback(ResponseFuture.java:62) [rocketmq-remoting-3.2.6.jar:na]

at com.alibaba.rocketmq.remoting.netty.NettyRemotingAbstract$2.run(NettyRemotingAbstract.java:262) [rocketmq-remoting-3.2.6.jar:na]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471) [na:1.7.0_51]

at java.util.concurrent.FutureTask.run(FutureTask.java:262) [na:1.7.0_51]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) [na:1.7.0_51]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) [na:1.7.0_51]

at java.lang.Thread.run(Thread.java:744) [na:1.7.0_51]

broker端也发现相应日志:

2015-07-31 16:38:08 WARN ClientManageThread_4 - subscription changed, group: consumerTestGroup remove topic vrs-topic-test SubscriptionData [classFilterMode=false, topic=vrs-topic-test, subString=*, tagsSet=[], codeSet=[], subVersion=1438331853269]

2015-07-31 16:38:22 WARN PullMessageThread_29 - the consumer's subscription not exist, group: consumerTestGroup

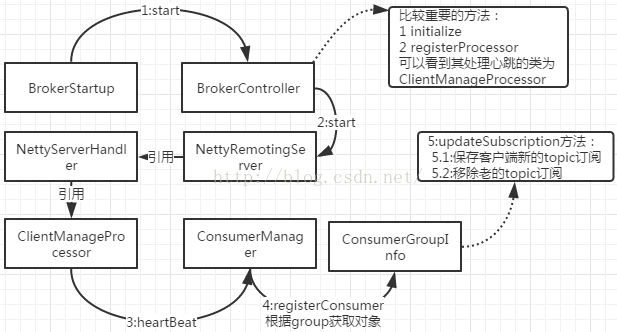

consumer&broker异常日志原因:

consumer会定时与所有broker进行心跳通信,代码详见:MQClientInstance.startScheduledTask,MQClientInstance.sendHeartbeatToAllBroker()默认每30秒心跳一次。

心跳主要作用:

会将HeartbeatData对象发送到broker端,携带consumer group和topic信息

对应到图1中,consumer1会发送类似group1,topic1

consumer2会发送group1,topic2

经过走查broker端代码发现如下代码:

重点在步骤4和5.1和5.2,现在只针对一个broker做一下分析:

假设consumer1先启动,对于broker_a一开始关系是group1->topic1

当consumer2启动并rebalance完成后,consumer2发送group1->topic2,

在步骤4,会根据group1将原先的group1->topic1取出。

在步骤5.1,添加topic2

在步骤5.2,移除topic1。

而consumer1在rebalance之后同样会进行如上步骤,导致topic1&topic2反复被remove掉,

最终导致了consumer端和broker端的异常日志不停打印。