还没有修改hosts,请先按前文修改。

还没安装java的,请按照前文配置。

(1)增加用户并设立公钥:

sudo addgroup hadoop

sudo adduser --ingroup hadoop hduser

su - hduser

cat $HOME/.ssh/id_rsa.pub >> $HOME/.ssh/authorized_keys

ssh localhost

exit

(2)把编译完的hadoop复制到/usr/local目录,并修改目录权限

cp –r /root/hadoop-2.4.0-src/hadoop-dist/target/hadoop-2.4.0 /usr/local

cd /usr/local

chown -R hduser:hadoop hadoop-2.4.0

(3)关闭ipv6

su

vi /etc/sysctl.conf

加入:

net.ipv6.conf.all.disable_ipv6 = 1

net.ipv6.conf.default.disable_ipv6 = 1

net.ipv6.conf.lo.disable_ipv6 = 1

重启:

reboot

测试:

cat /proc/sys/net/ipv6/conf/all/disable_ipv6

输出1表示ipv6已关闭。

(4)修改启动配置文件~/.bashrc

su hduser

vi ~/.bashrc

加入以下代码:

JAVA_HOME=/usr/lib/jvm/jdk1.7.0_55

JRE_HOME=${JAVA_HOME}/jre

export ANDROID_JAVA_HOME=$JAVA_HOME

export CLASSPATH=.:${JAVA_HOME}/lib:$JRE_HOME/lib:${JAVA_HOME}/lib/tools.jar:$CLASSPATH

export JAVA_PATH=${JAVA_HOME}/bin:${JRE_HOME}/bin

export JAVA_HOME;

export JRE_HOME;

export CLASSPATH;

HOME_BIN=~/bin/

export PATH=${PATH}:${JAVA_PATH}:${HOME_BIN};

export PATH=${JAVA_HOME}/bin:$PATH

export HADOOP_HOME=/usr/local/hadoop-2.4.0

unalias fs &> /dev/null

alias fs="hadoop fs"

unalias hls &> /dev/null

alias hls="fs -ls"

lzohead () {

hadoop fs -cat $1 | lzop -dc | head -1000 | less

}

export PATH=$PATH:$HADOOP_HOME/bin

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

#export HADOOP_OPTS=-Djava.net.preferIPv4Stack=true

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

使修改生效:

source ~/.bashrc

(5)在hadoop目录中创建datanode和namenode目录

mkdir -p $HADOOP_HOME/yarn/yarn_data/hdfs/namenode

mkdir -p $HADOOP_HOME/yarn/yarn_data/hdfs/datanode

(6)修改Hadoop配置参数

为了方便可以 cd $HADOOP_CONF_DIR

在$HADOOP_HOME下直接执行:

vi etc/hadoop/hadoop-env.sh

加入JAVA_HOME变量

export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_55

vi etc/hadoop/yarn-site.xml

加入以下信息:

<property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce_shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property>

建立hadoop.tmp.dir

sudo mkdir -p /app/hadoop/tmp

(如果出错:hduser is not in the sudoers file. This incident will be reported.

su

vi /etc/sudoers

加入hduser ALL=(ALL) ALL

)

#sudo chown hduser:hadoop /app/hadoop/tmp

sudo chown -R hduser:hadoop /app

sudo chmod 750 /app/hadoop/tmp

cd $HADOOP_HOME

vi etc/hadoop/core-site.xml

<property> <name>hadoop.tmp.dir</name> <value>/app/hadoop/tmp</value> <description>A base for other temporary directories.</description> </property> <property> <name>fs.default.name</name> <value>hdfs://localhost:9000</value> </property>

vi etc/hadoop/hdfs-site.xml

<property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>file:/usr/local/hadoop-2.4.0/yarn/yarn_data/hdfs/namenode</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>file:/usr/local/hadoop-2.4.0/yarn/yarn_data/hdfs/datanode</value> </property>

vi etc/hadoop/mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property>

好

(7)格式化namenode节点:

bin/hadoop namenode –format

(8)运行Hadoop 示例

sbin/hadoop-daemon.sh start namenode

sbin/hadoop-daemon.sh start datanode

sbin/hadoop-daemon.sh start secondarynamenode

sbin/yarn-daemon.sh start resourcemanager

sbin/yarn-daemon.sh start nodemanager

sbin/mr-jobhistory-daemon.sh start historyserver

(9)监测运行情况:

jps

netstat –ntlp

http://localhost:50070/ for NameNode

http://localhost:8088/cluster for ResourceManager

http://localhost:19888/jobhistory for Job History Server

(10)出错处理:

log文件存放目录:

cd $HADOOP_HOME/logs

或进入namenode网页查看log

http://192.168.85.136:50070/logs/hadoop-hduser-datanode-ubuntu.log

1.错误:

出现DataNode启动后jps进程消失,阅读以下网页查看log,

http://192.168.85.136:50070/logs/hadoop-hduser-datanode-ubuntu.log

错误信息如下:

2014-07-07 03:03:41,446 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Initialization failed for block pool Block pool <registering> (Datanode Uuid unassigned) service to localhost/127.0.0.1:9000

发现问题:./bin/hadoop namenode –format重新创建一个namenodeId,而存放datanode数据的tmp/dfs/data目录下包含了上次format下的 id,namenode format清空了namenode下的数据,但是没有清除datanode下的数据,导致启动时失败,所要做的就是每次fotmat前,清空tmp一下 的所有目录.

参考:http://stackoverflow.com/questions/22316187/datanode-not-starts-correctly

解决办法:

rm -rf /usr/local/hadoop-2.4.0/yarn/yarn_data/hdfs/*

./bin/hadoop namenode –format

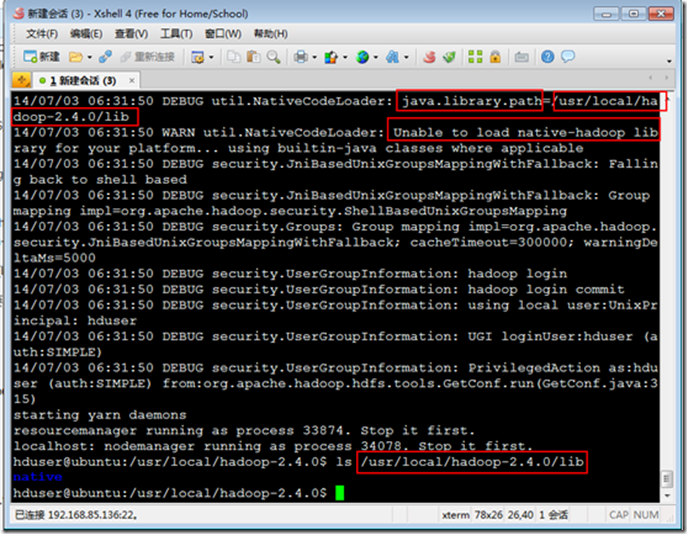

2.警告调试:

14/07/03 06:13:25 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

调试:

export HADOOP_ROOT_LOGGER=DEBUG,console

hadoop fs -text /test/data/origz/access.log.gz

解决办法:

cp /usr/local/hadoop-2.4.0/lib/native/* /usr/local/hadoop-2.4.0/lib/

(11)创建一个文本文件,把它放进Hdfs中:

mkdir in

vi in/file

Hadoop is fast

Hadoop is cool

bin/hadoop dfs -copyFromLocal in/ /in

(12)运行wordcount示例程序:

bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.4.0.jar wordcount /in /out

(13)查看运行结果:

bin/hadoop fs -ls /out

bin/hadoop dfs -cat /out/part-r-00000

或者也可以去namenode网站查询

http://localhost:50070/dfshealth.jsp

(14)关闭demo:

sbin/hadoop-daemon.sh stop namenode

sbin/hadoop-daemon.sh stop datanode

sbin/hadoop-daemon.sh stop secondarynamenode

sbin/yarn-daemon.sh stop resourcemanager

sbin/yarn-daemon.sh stop nodemanager

sbin/mr-jobhistory-daemon.sh stop historyserver

这篇文章参考了两篇非常不错的博客文章,现列在下方,以便参考:

http://www.thecloudavenue.com/2012/01/getting-started-with-nextgen-mapreduce.html

http://www.michael-noll.com/tutorials/running-hadoop-on-ubuntu-linux-single-node-cluster/