https://gameinstitute.qq.com/community/detail/119467

http://blog.atelier39.org/cg/555.html

http://www.reedbeta.com/blog/quadrilateral-interpolation-part-1/#projective-interpolation

In computer graphics we build models out of triangles, and we interpolate texture coordinates (and other vertex attributes) across surfaces using a method appropriate for triangles: linear interpolation, which allows each triangle in 3D model space to be mapped to an arbitrary triangle in texture space. While linear interpolation works well most of the time, there are situations in which it doesn’t suit our needs—for example, when mapping a square texture to a quadrilateral: using linear interpolation on each of the quad’s two triangles produces an ugly seam. In this article series, I’m going to talk about interpolation methods that allow arbitrary convex quadrilaterals to be texture-mapped without a seam along the diagonal.

First of all, what’s the problem with quads and the usual linear UV interpolation? Let me illustrate, with the help of this brick from CgTextures:

Linear interpolation allows for arbitrary affine transforms to be applied to a texture image. This includes any combination of translation, scaling, rotation, and shearing:

As you can see, these transforms work perfectly well on a quad; you can’t see the seam between the two triangles. However, if I move one of the quad’s verts so that it’s no longer a parallelogram, you can see the seam:

This happened because the triangles are still congruent in UV space (each covers half the texture, as before), but they are no longer congruent in model space. The affine transforms for the two triangles are no longer equal; although the UV mapping is still continuous along the seam, its derivatives (the tangent and bitangent vectors) are discontinuous there, resulting in ugliness.

Ordinarily, when building irregularly-shaped geometry like this, you wouldn’t assign UVs this way. For example, a level designer creating an irregularly-shaped wall piece would apply a single UV projection to the whole wall, giving all the triangles the same affine transform. This implies that not all of the texture is seen: it’s clipped and cropped so the shape of the mesh in UV space matches its shape in model space, preventing seams.

But what if we really do want to get a texture onto an arbitrary (convex) quadrilateral, without cropping out part of it?

Affine transforms allow arbitrary triangle-to-triangle mappings: you can create an affine mapping between any two triangles, no matter how different their shapes. This is just what happens when you apply a texture to a triangle: by setting up UVs, you implicitly create an affine map between model space and UV space. When rendering, the rasterizer evaluates this mapping to find the appropriate texture sample point for each pixel.

Geometrically, as long as the quad remains a parallelogram, the affine transforms for its two triangles are equal and you can’t see the seam. But when the quad isn’t a parallelogram, affine transforms and linear interpolation cannot smoothly map the whole texture to the quad.

To solve this problem, we must leave the world of linear interpolation and affine transforms behind! There are more-sophisticated interpolation methods that can help here, each with its own pros and cons. In this article I’m going to talk about one in particular, called projective interpolation. Later articles in this series will cover alternative methods.

Projective Interpolation

Just as linear interpolation is based on affine transforms, projective interpolation is based on the family of projective transforms. These transforms are very familiar in 3D graphics: they’re exactly the same ones used to map a 3D scene onto a 2D image, simulating perspective! But how can this help us with interpolation?

The intuition is that if you have a 3D scene consisting of a single quad, as you move the camera around and look at it from different positions, its projected shape on the 2D screen will be, in general, a different quad. In fact, it turns out you can map any convex quad to any other convex quad this way, by finding an appropriate camera setup.

Moreover, we know how to interpolate UVs in such a way that a 3D quad doesn’t show a seam when it’s projected to the 2D screen; such perspective-correct interpolation is done all the time. This suggests that we should be able to texture-map a quad without a seam by using the same math used for perspective-correct interpolation. And indeed this works:

The entire texture is now warped to the irregular shape of the quad, with no visible seam!

However, this image is a little odd: it doesn’t really look like a 2D quad anymore. It actually looks a lot like a wall in a 3D engine, with the camera turned to the side so that the wall recedes into the distance. That’s the nature of projective interpolation. Because it uses the same math that’s involved in 3D-to-2D perspective, this method gives results that tend to look like a 3D scene, even though the quad is completely 2D.

With that caveat in mind, here’s how you implement projective interpolation.

It’s well-known that to do perspective-correct interpolation for a triangle, you must calculate u/zu/z, v/zv/z, and 1/z1/z at each vertex, interpolate those linearly in screen space, then calculate u = (u/z) / (1/z)u=(u/z)/(1/z) and v = (v/z) / (1/z)v=(v/z)/(1/z) at each pixel. GPU rasterizers do this automatically, behind the scenes, for every interpolated attribute. We use the same idea here: our vertex shader will output uquq, vqvq, and qq, the GPU will interpolate those quantities linearly in model space, then we’ll divide by qq at each pixel. Here, qq is a per-vertex value that plays the role of 1/z1/z. However, this qq will be determined by the shape of the quadrilateral. It’s a made-up “depth” chosen to give the right projective transform to eliminate the seam.

The vertex shader and pixel shader for projective interpolation will look something like this:

float4x4 g_matLocalToClip;

Texture2D g_texColor;

SamplerState g_ss;

struct VertexData

{

float3 pos : POSITION;

float3 uvq : TEXCOORD0;

};

void Vs (

VertexData vtx,

out float3 uvq : TEXCOORD0,

out float4 posClip : SV_Position)

{

posClip = mul(float4(vtx.pos, 1.0), g_matLocalToClip);

uvq = vtx.uvq;

}

void Ps (

float3 uvq : TEXCOORD0,

out half4 o_rgba : SV_Target)

{

o_rgba = g_texColor.Sample(g_ss, uvq.xy / uvq.z);

}

Here, the important parts are: (a) the UVs are float3 instead of the usual float2, with qq in the third component; and (b) the pixel shader divides by qq before sampling the texture. The uvq values are precomputed and stored in the vertex data, so the vertex shader just passes them through.

The real trick here is how to calculate the right qq value for each vertex of the quad. This is fairly subtle—at least, it took me awhile to work it out!—and I’ll spare you the derivation. To find the qqs, first find the intersection point of the two diagonals of the quad (e.g., intersect one diagonal with the plane defined by the other diagonal and the quad’s normal vector), and calculate the distances from this point to each of the four vertices. I’ll call those distances d_0 ldots d_3d0…d3:

Then, each qq is computed using the dds for that vertex and the opposite one, as follows:uvq_i = mathtt{float3}(u_i, v_i, 1) imes frac{d_i + d_{i+2}}{d_{i+2}} qquad (i = 0 ldots 3)uvqi=float3(ui,vi,1)×di+2di+di+2(i=0…3)Store those values in your vertex data, and you’ll have projective interpolation!

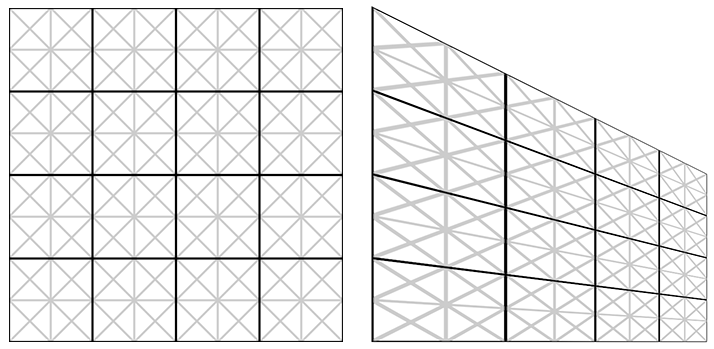

Projective interpolation does the job we set out to do—it maps a texture smoothly onto an arbitrary convex quad. However, there are some potentially-problematic oddities with this method. As we saw above, it can generate results that appear 3D even when they’re not supposed to. This is related to how projective interpolation alters the spacing of points along a line nonuniformly, as can be seen by applying the interpolation to a grid:

The vertical grid lines, which are evenly spaced in the texture, are no longer evenly spaced after interpolation; they’re closer together at one end of the quad and farther apart at the other. Again, this is a consequence of “perspective” scaling things down when they’re “farther” from the camera. Unfortunately, this nonuniform spacing is completely dependent on the shape of the quad, and won’t generally match when two quads share an edge:

This is a lot like the original problem we were trying to solve: two adjoining triangles with different shapes would have different affine transforms, producing a seam. Here, two adjoining quads with different shapes have different projective transforms, producing a seam. If you’re trying to use this in a situation where you have multiple quads that need to join smoothly, this problem is pretty much a deal-breaker for projective interpolation.

In future installments of this series, I’ll talk about alternatives to projective interpolation that can also smoothly map a texture onto a quad, but with different features and caveats.