Hadoop---集群的搭建

我有一个虚拟机是用来克隆的,里面设置了java环境,开启不启动防火墙的配置。

准备:

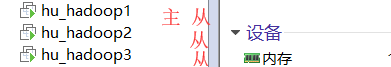

1.我的3个虚拟机:

hu_hadoop1(主+从):nameNode+dataNode+ResouceManager

hu_hadoop2(从):dataNode+nodeManager+secondaryNameNode(秘书)

hu_hadoop3(从):dataNode+nodeManager

这样的话就是有一主三从。

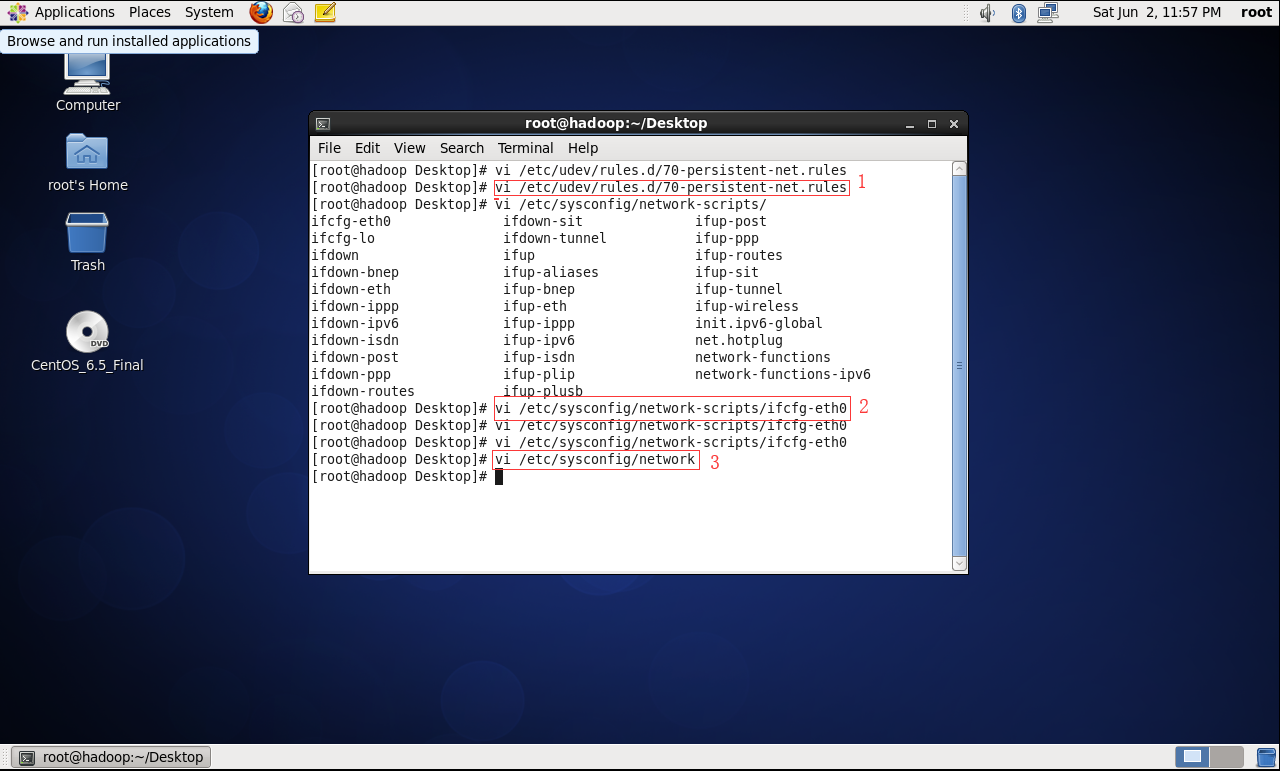

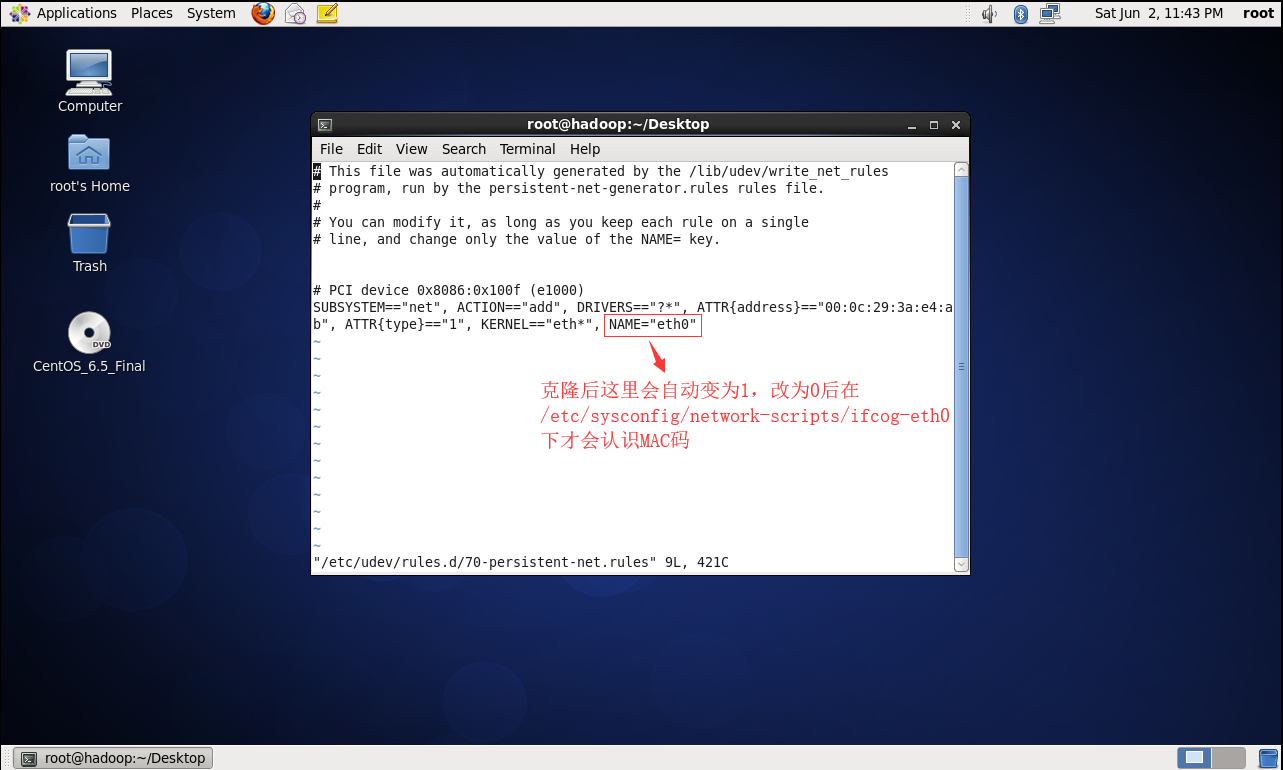

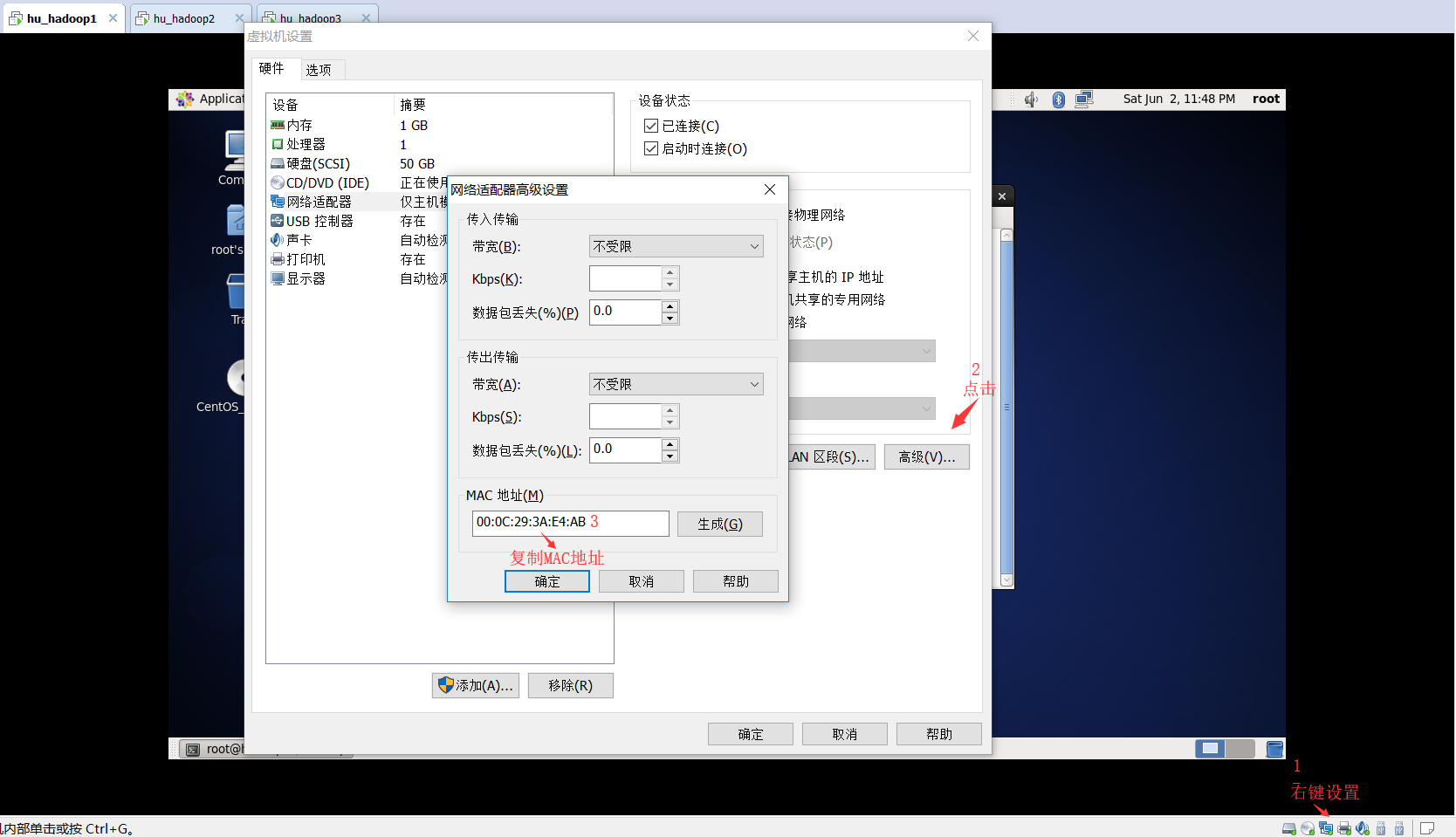

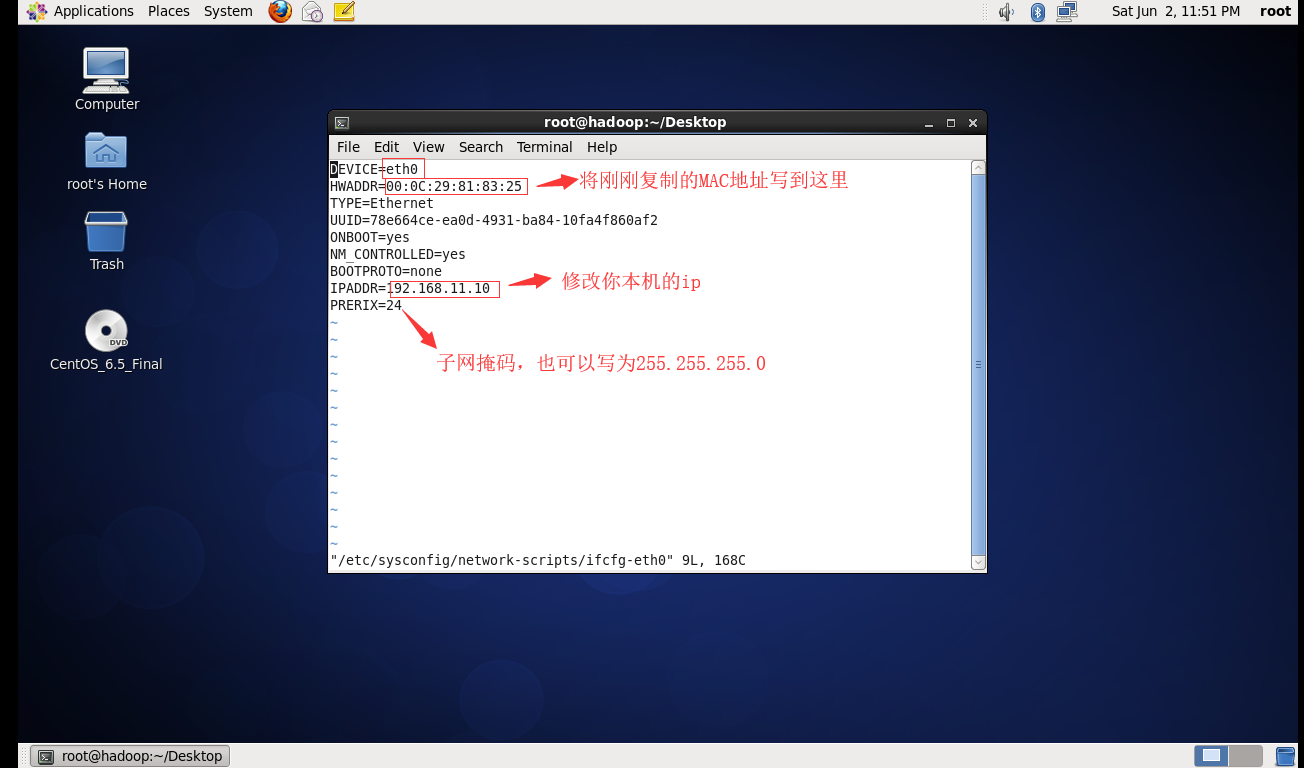

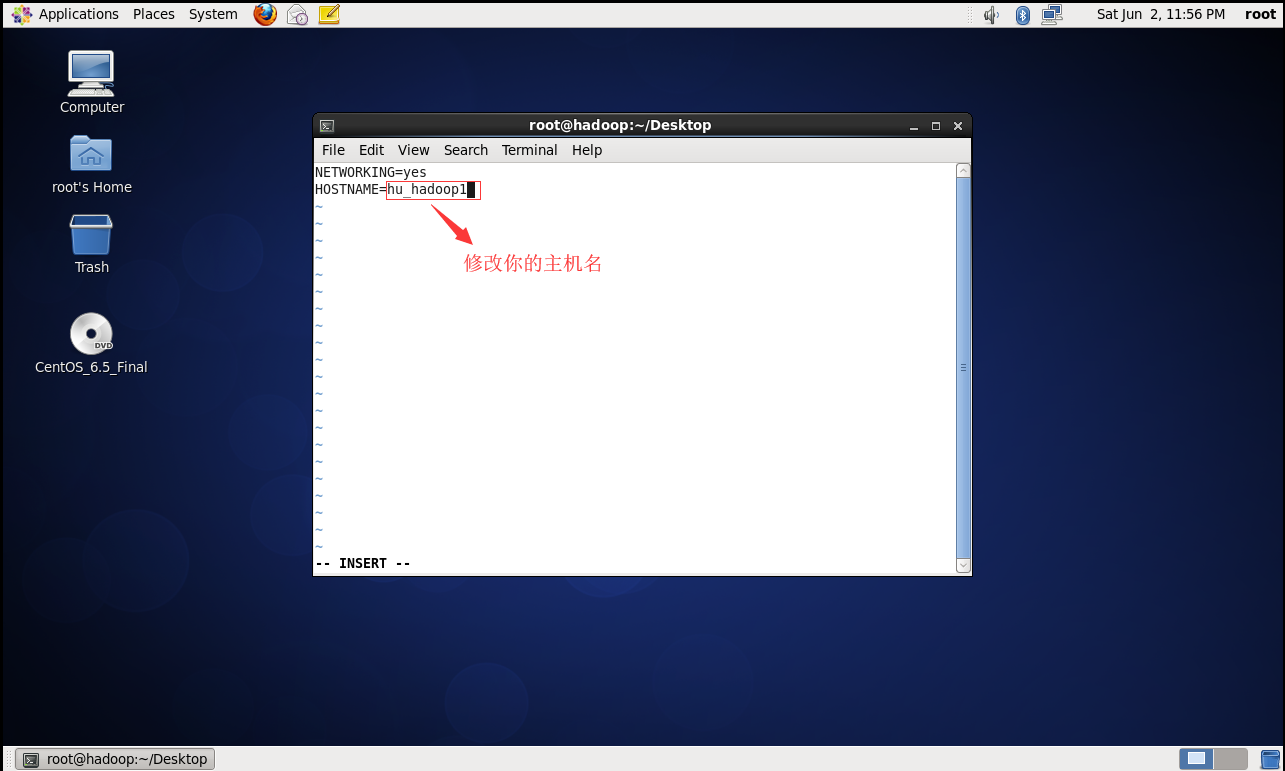

2.开机启动,修改配置文件:

完成后重启 init 6 (每台机器都做这样的配置)

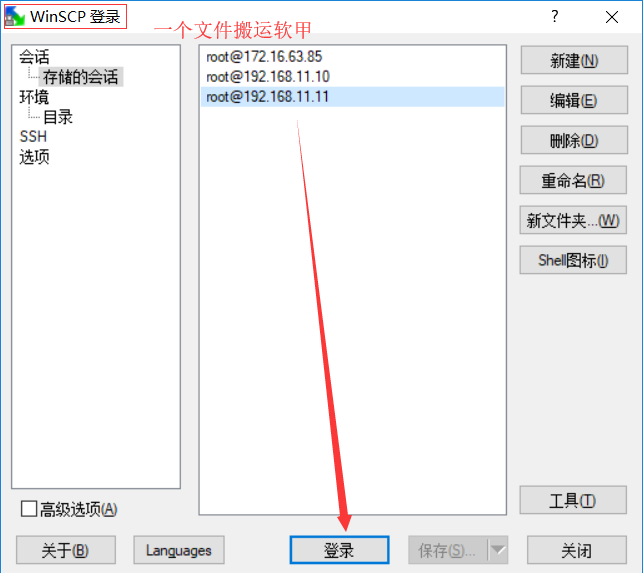

3.使用shell5连接三台机器:

https://www.cnblogs.com/meiLinYa/p/9125745.html(连接过程)

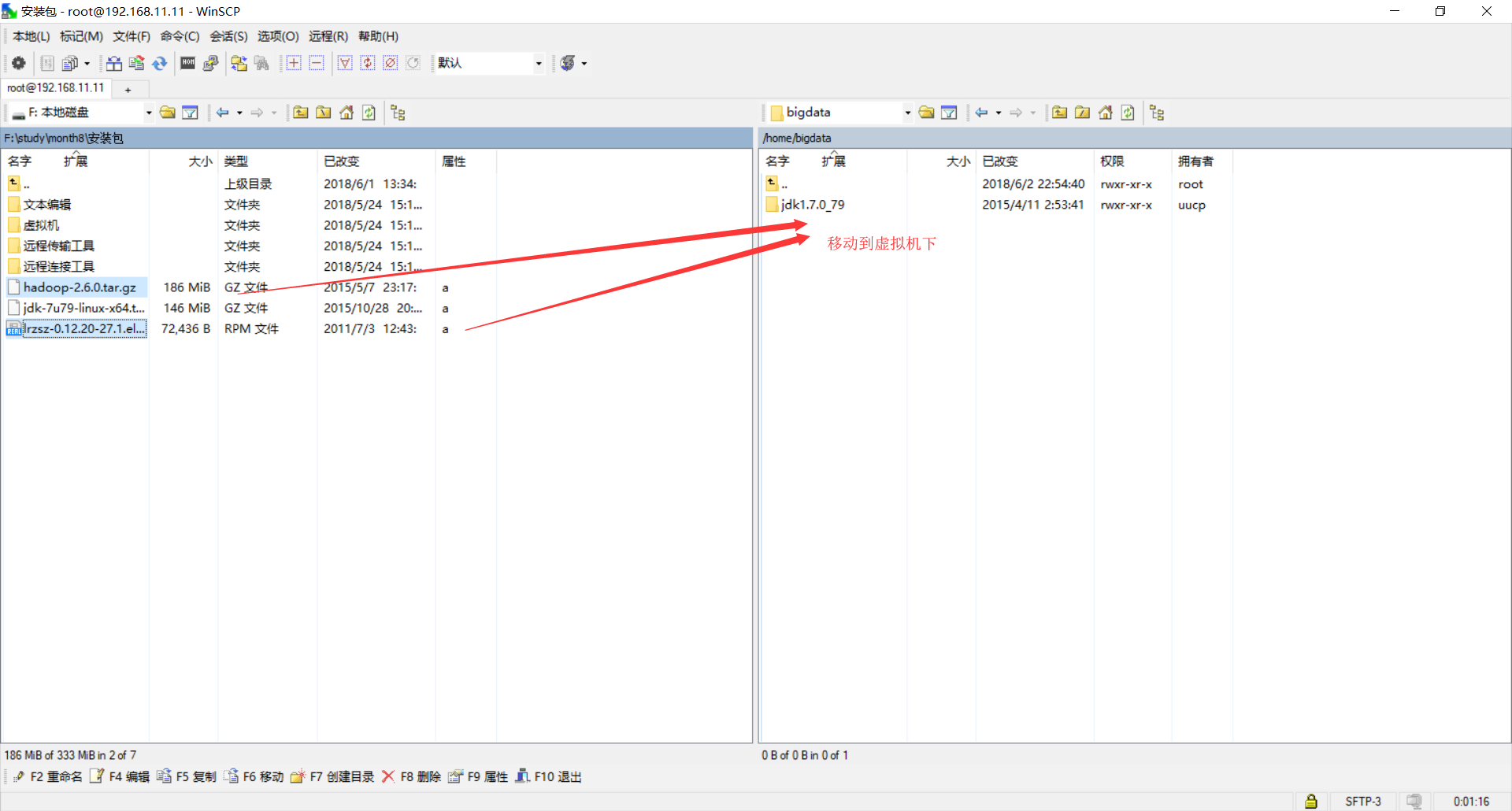

4.将压缩报放到Linux下:

开始配置集群:

hadoop环境的配置:

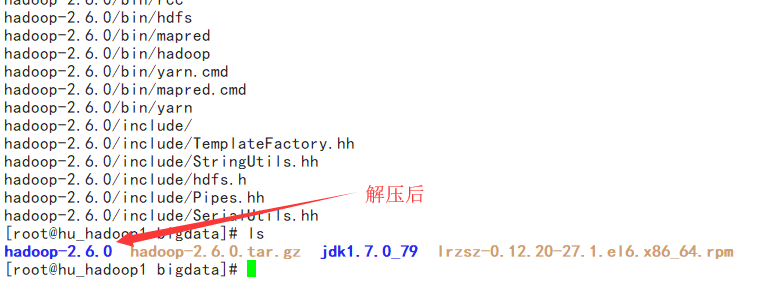

解压tar文件:tar -xzvf hadoop-2.6.0.tar.gz

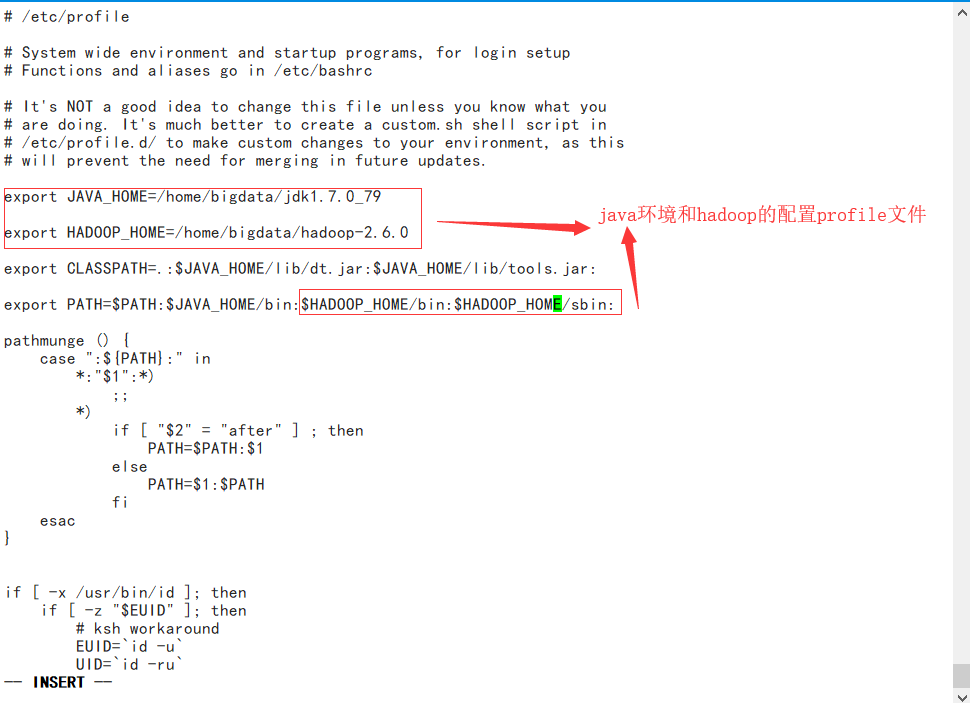

配置hadoop环境:vi /etc/profile

测试:

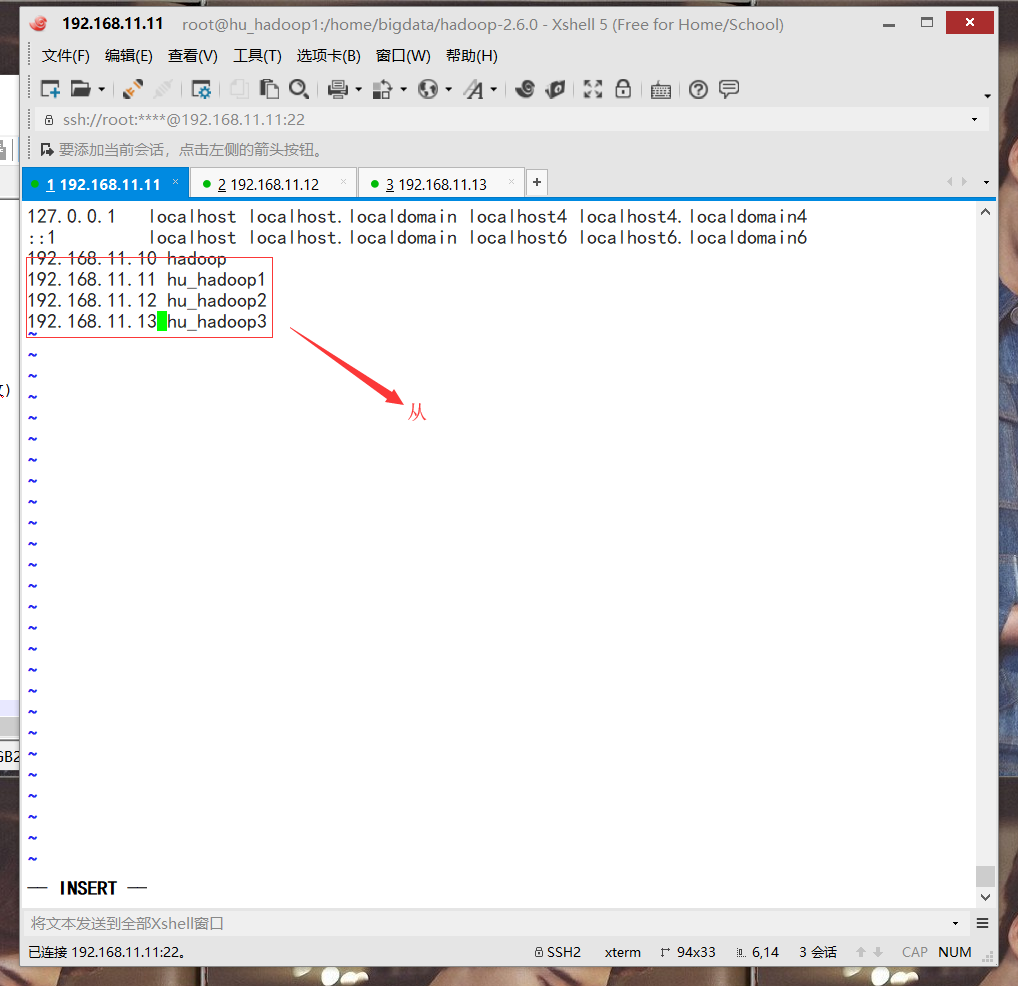

修改hosts文件时主可以认识从机:

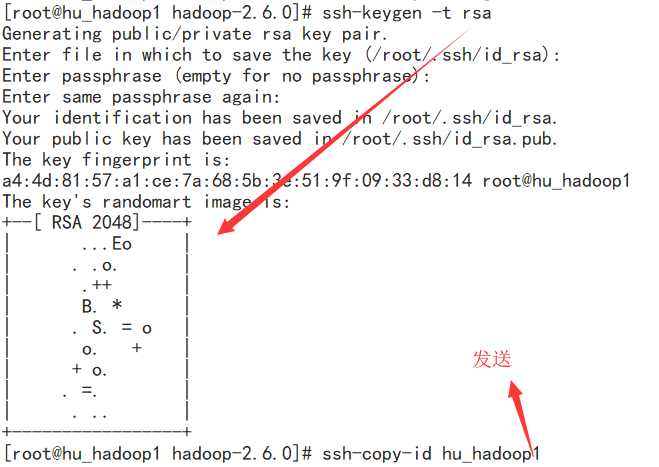

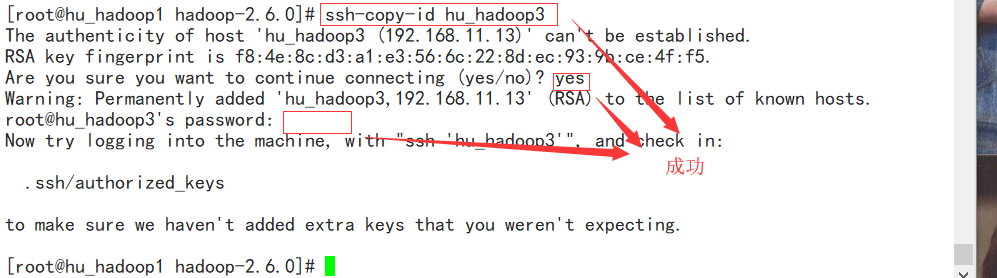

生成密钥使主机无条件不用输入密码访问从:修改hosts文件后 service network restat

ssh-keygen -t rsa 生产密钥

ssh-copy-id 从机名称

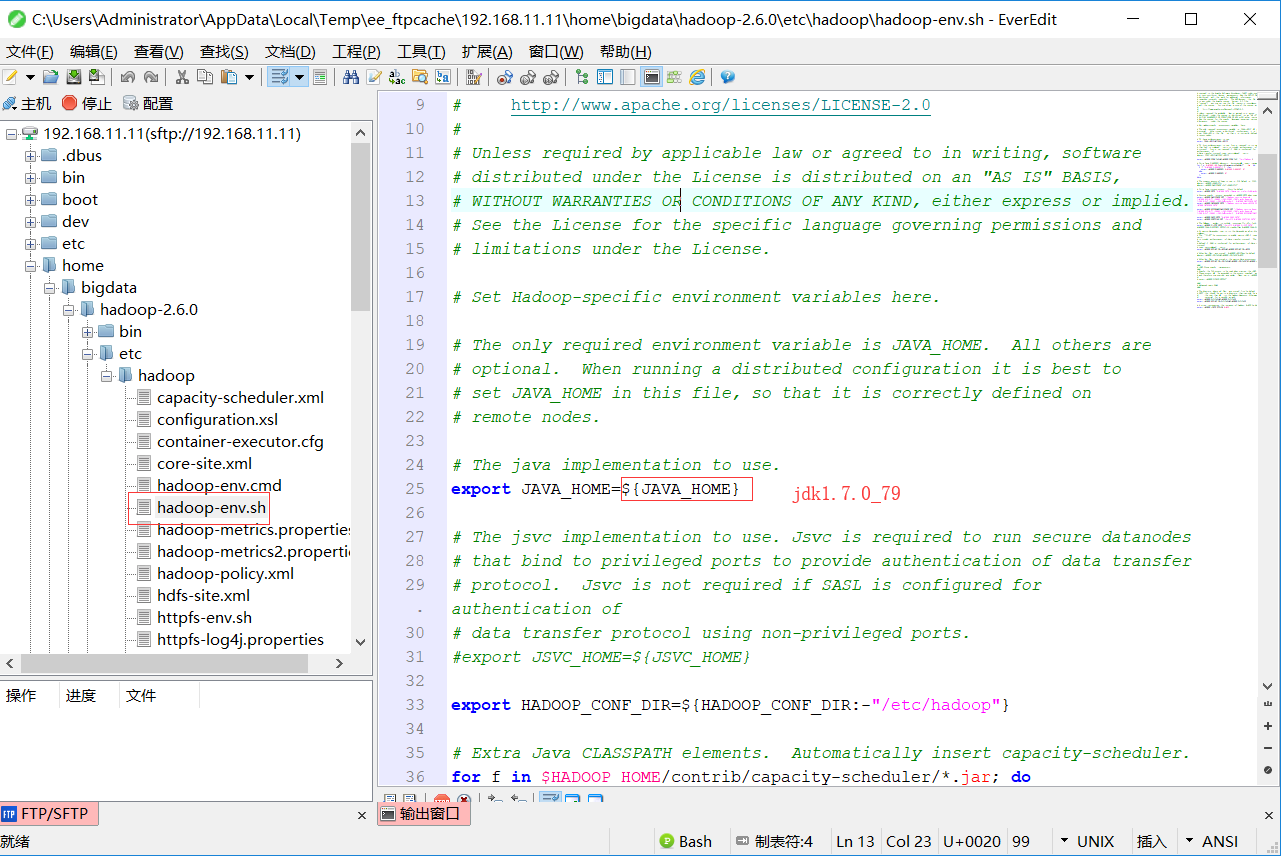

使用编辑器连接后修改Hadoop配置文件(修改6个配置文件):

1.hadoop-env.sh

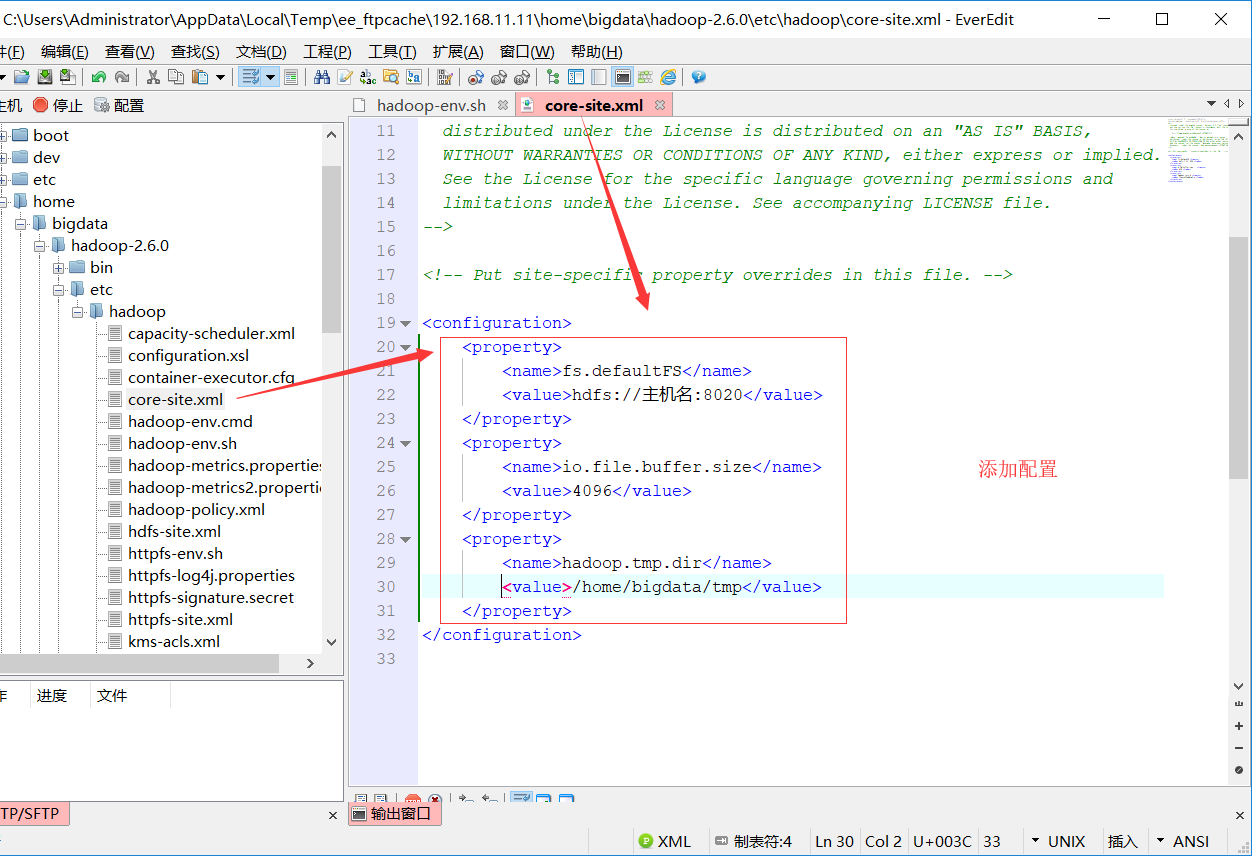

2.core-site.xml

<property> <name>fs.defaultFS</name> <value>hdfs://hu-hadoop1:8020</value> </property> <property> <name>io.file.buffer.size</name> <value>4096</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/home/bigdata/tmp</value> </property>

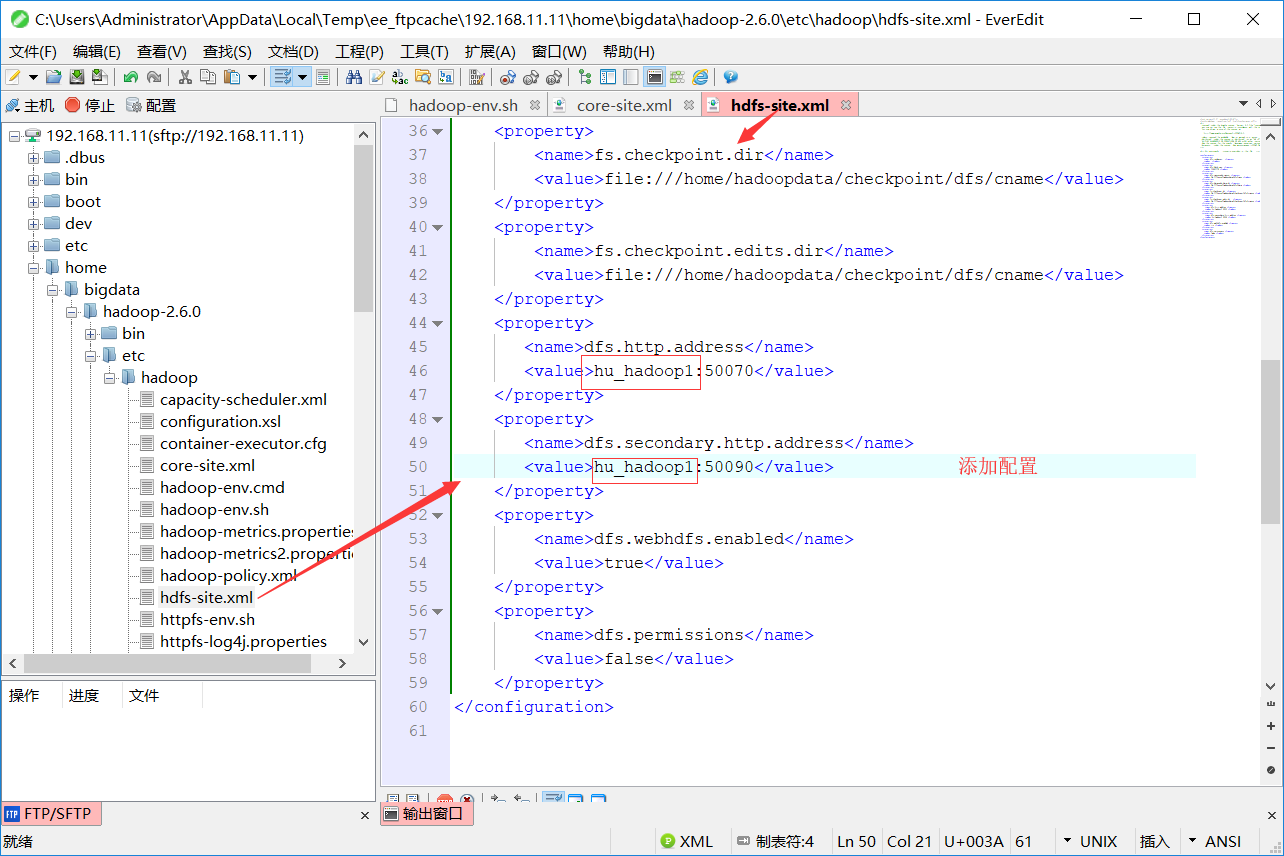

3.hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.block.size</name>

<value>134217728</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///home/hadoopdata/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///home/hadoopdata/dfs/data</value>

</property>

<property>

<name>fs.checkpoint.dir</name>

<value>file:///home/hadoopdata/checkpoint/dfs/cname</value>

</property>

<property>

<name>fs.checkpoint.edits.dir</name>

<value>file:///home/hadoopdata/checkpoint/dfs/cname</value>

</property>

<property>

<name>dfs.http.address</name>

<value>hu-hadoop1:50070</value>

</property>

<property>

<name>dfs.secondary.http.address</name>

<value>hu-hadoop2:50090</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

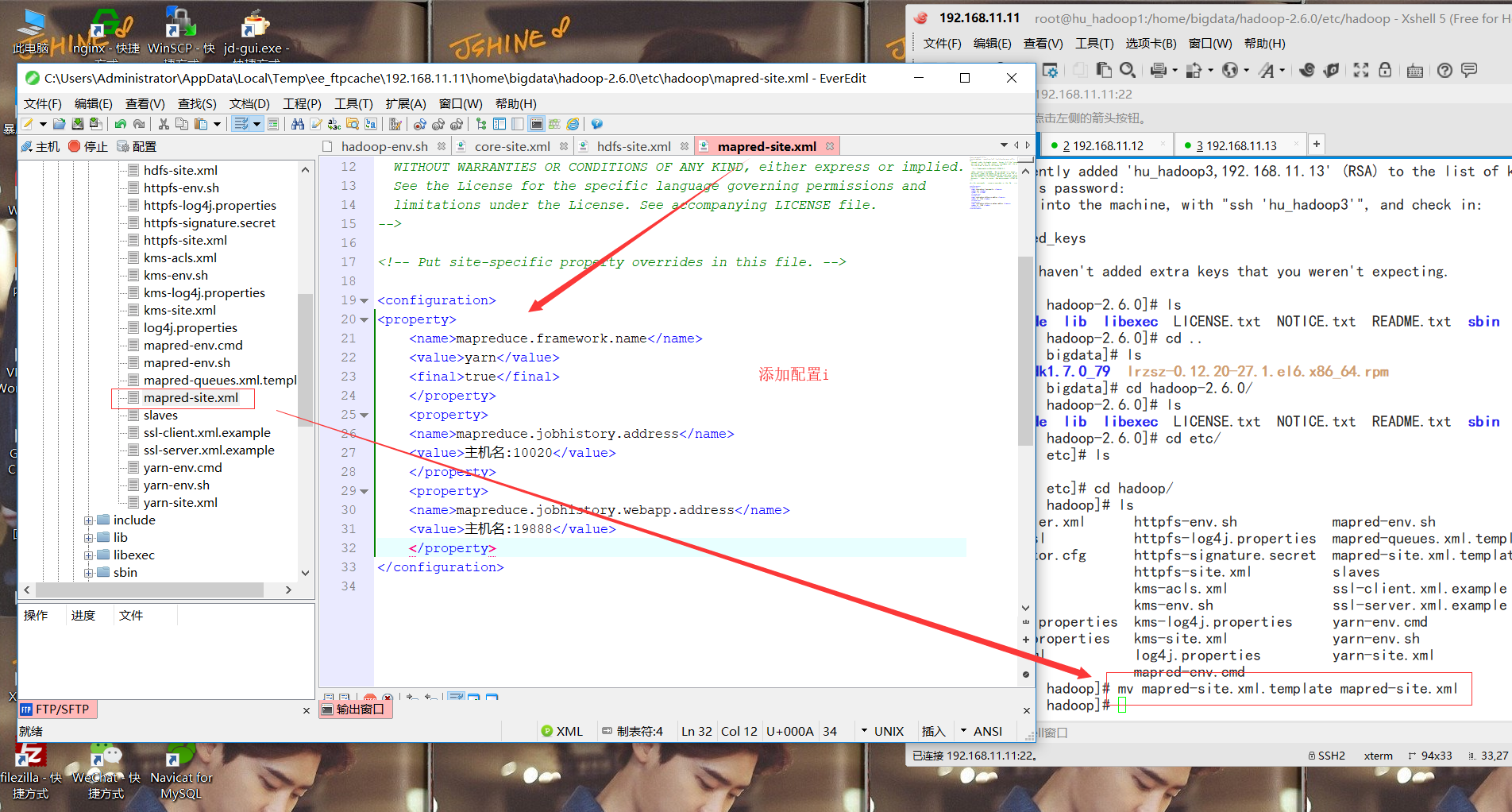

4.将 mapred-site.xml.template改为mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<final>true</final>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>hu-hadoop1:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hu-hadoop1:19888</value>

</property>

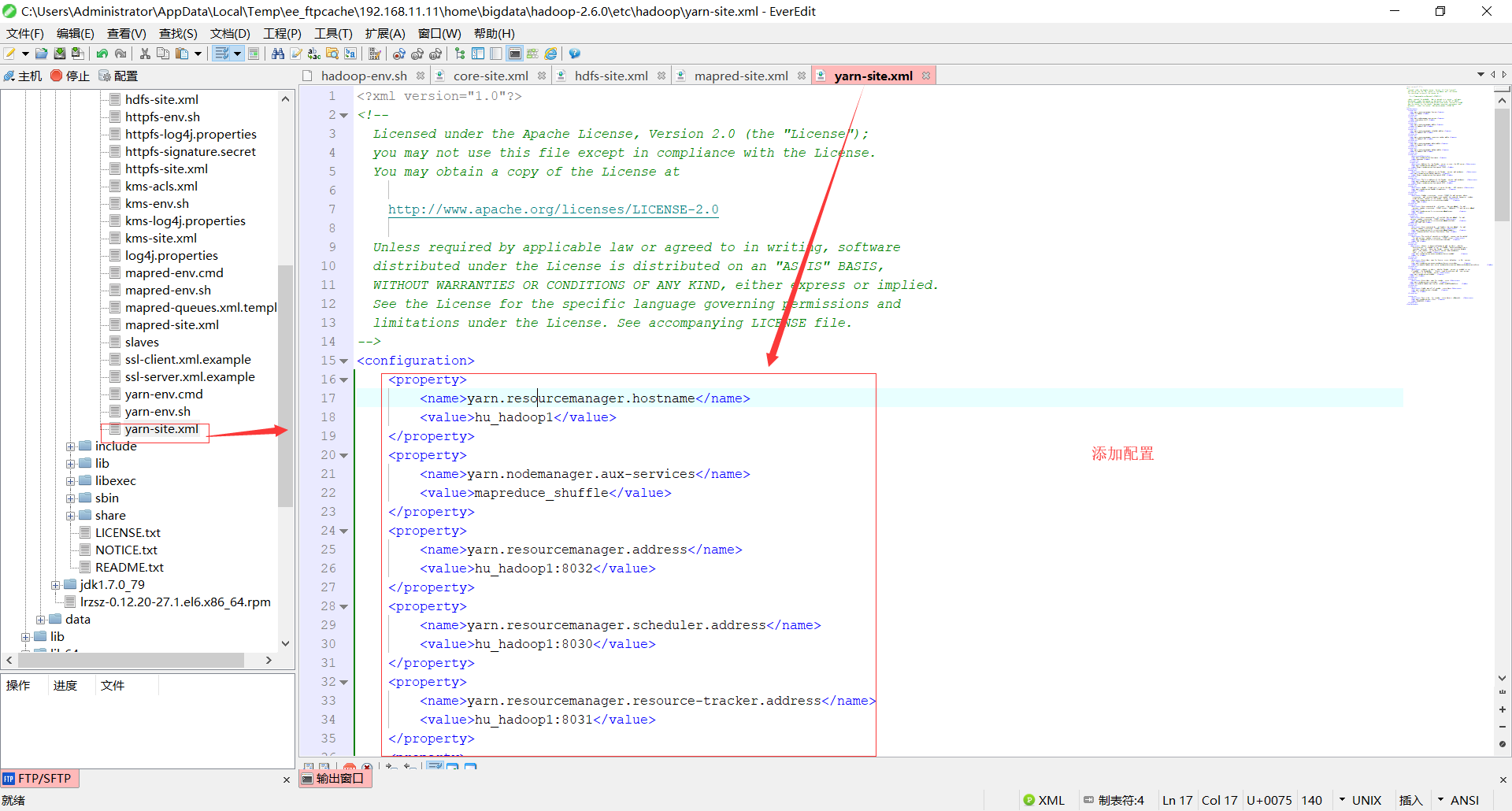

5.yarn-site.cml

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hu-hadoop1</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hu-hadoop1:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hu-hadoop1:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hu-hadoop1:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hu-hadoop1:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>hu-hadoop1:8088</value>

</property>

<property>

<description></description>

<name>yarn.timeline-service.hostname</name>

<value>hu-hadoop1</value>

</property>

<property>

<description>Address for the Timeline server to start the RPC server.</description>

<name>yarn.timeline-service.address</name>

<value>${yarn.timeline-service.hostname}:10200</value>

</property>

<property>

<description>The http address of the Timeline service web application.</description>

<name>yarn.timeline-service.webapp.address</name>

<value>${yarn.timeline-service.hostname}:8188</value>

</property>

<property>

<description>The https address of the Timeline service web application.</description>

<name>yarn.timeline-service.webapp.https.address</name>

<value>${yarn.timeline-service.hostname}:8190</value>

</property>

<property>

<description>Handler thread count to serve the client RPC requests.</description>

<name>yarn.timeline-service.handler-thread-count</name>

<value>10</value>

</property>

<property>

<description>Enables cross-origin support (CORS) for web services where

cross-origin web response headers are needed. For example, javascript making

a web services request to the timeline server.</description>

<name>yarn.timeline-service.http-cross-origin.enabled</name>

<value>false</value>

</property>

<property>

<description>Comma separated list of origins that are allowed for web

services needing cross-origin (CORS) support. Wildcards (*) and patterns

allowed</description>

<name>yarn.timeline-service.http-cross-origin.allowed-origins</name>

<value>*</value>

</property>

<property>

<description>Comma separated list of methods that are allowed for web

services needing cross-origin (CORS) support.</description>

<name>yarn.timeline-service.http-cross-origin.allowed-methods</name>

<value>GET,POST,HEAD</value>

</property>

<property>

<description>Comma separated list of headers that are allowed for web

services needing cross-origin (CORS) support.</description>

<name>yarn.timeline-service.http-cross-origin.allowed-headers</name>

<value>X-Requested-With,Content-Type,Accept,Origin</value>

</property>

<property>

<description>The number of seconds a pre-flighted request can be cached

for web services needing cross-origin (CORS) support.</description>

<name>yarn.timeline-service.http-cross-origin.max-age</name>

<value>1800</value>

</property>

<property>

<description>Indicate to ResourceManager as well as clients whether

history-service is enabled or not. If enabled, ResourceManager starts

recording historical data that Timelien service can consume. Similarly,

clients can redirect to the history service when applications

finish if this is enabled.</description>

<name>yarn.timeline-service.generic-application-history.enabled</name>

<value>true</value>

</property>

<property>

<description>Store class name for history store, defaulting to file system

store</description>

<name>yarn.timeline-service.generic-application-history.store-class</name>

<value>org.apache.hadoop.yarn.server.applicationhistoryservice.FileSystemApplicationHistoryStore</value>

</property>

<property>

<description>Indicate to clients whether Timeline service is enabled or not.

If enabled, the TimelineClient library used by end-users will post entities

and events to the Timeline server.</description>

<name>yarn.timeline-service.enabled</name>

<value>true</value>

</property>

<property>

<description>Store class name for timeline store.</description>

<name>yarn.timeline-service.store-class</name>

<value>org.apache.hadoop.yarn.server.timeline.LeveldbTimelineStore</value>

</property>

<property>

<description>Enable age off of timeline store data.</description>

<name>yarn.timeline-service.ttl-enable</name>

<value>true</value>

</property>

<property>

<description>Time to live for timeline store data in milliseconds.</description>

<name>yarn.timeline-service.ttl-ms</name>

<value>6048000000</value>

</property>

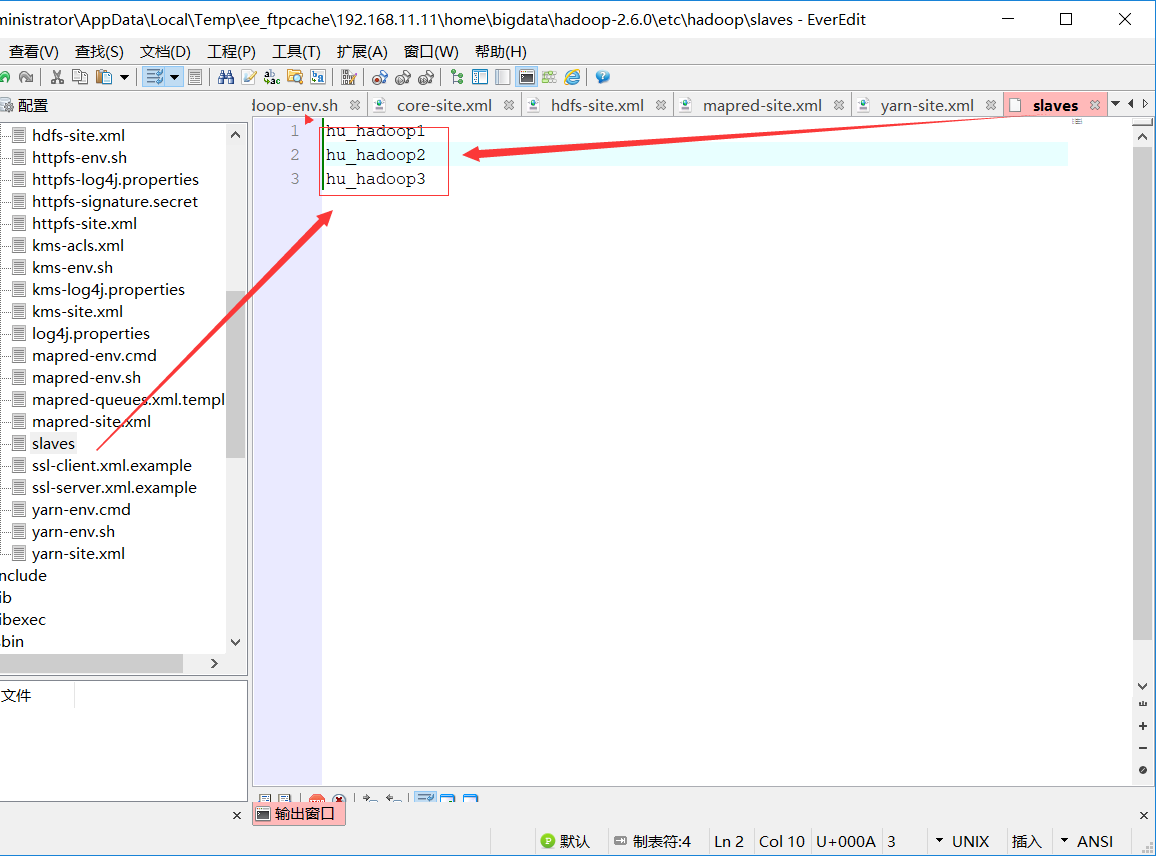

6.slaves

hu-hadoop1 hu-hadoop1 hu-hadoop2 hu-hadoop3

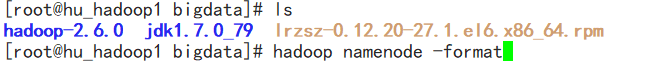

format nameNode:

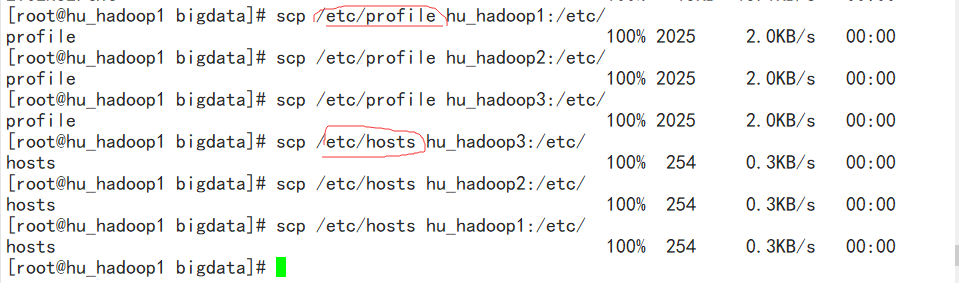

将profile和hosts和hadoop-2.6.0发送给3个从机 scp hadoop2.6.0 从机名:/`pwd`

![]()

分别在从机上运行:source /etc/profile(刷新配置i文件)service network restart(从启网络服务)

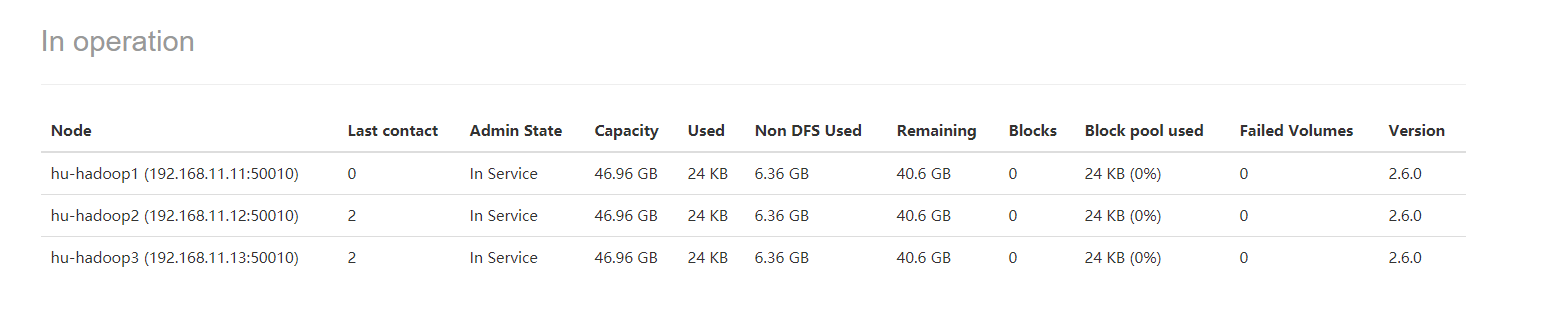

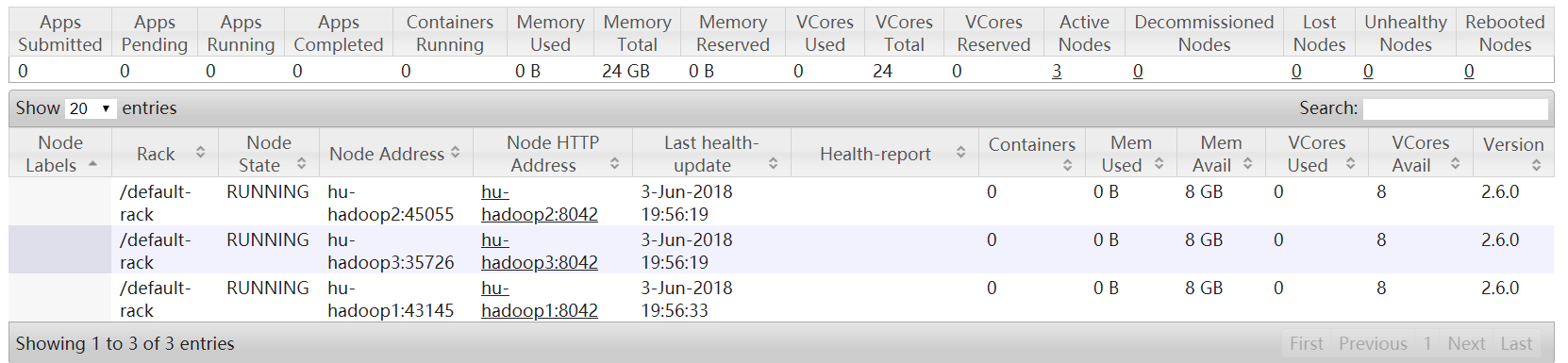

启动服务:start-all.sh 看网页效果(这里我报错了,原因时hostsname不能带有 “_” 所以我改个名字改为了hu-hadoop1)

192.168.11.11:50070

192.168.11.11:8088

网页也可以展示出来!!就可以了

huhu_k: 越是没有人爱越要爱自己。