kafka是一个消息队列, 和activeMQ, RabbitMQ类似, 一般都只是用到消息定订阅和发布。

环境

环境我们还是依赖docker来完成

-- 拉镜像

docker pull wurstmeister/kafka

docker pull wurstmeister/zookeeper

docker pull zookeeper

docker pull kafka

--启动zookeeper

docker run -d --restart=always --name zookeeper -p 2181:2181 -t wurstmeister/zookeeper

--启动kafka

docker run -d --restart=always --name kafka01 -p 9092:9092 -e KAFKA_BROKER_ID=0

-e KAFKA_ZOOKEEPER_CONNECT=192.168.100.19:2181

-e KAFKA_ADVERTISED_LISTENERS=PLAINTEXT://192.168.100.19:9092

-e KAFKA_LISTENERS=PLAINTEXT://0.0.0.0:9092

-d wurstmeister/kafka

go实现

package main import ( "fmt" "sync" "time" "github.com/Shopify/sarama" ) var ( address = "192.168.100.19:9092" topic = "test" wg sync.WaitGroup ) func main() { go producer_test() go consumer_test() select {} } func producer_test() { config := sarama.NewConfig() // 等待服务器所有副本都保存成功后的响应 config.Producer.RequiredAcks = sarama.WaitForAll // 随机的分区类型:返回一个分区器,该分区器每次选择一个随机分区 config.Producer.Partitioner = sarama.NewRandomPartitioner // 是否等待成功和失败后的响应 config.Producer.Return.Successes = true // 使用给定代理地址和配置创建一个同步生产者 producer, err := sarama.NewSyncProducer([]string{address}, config) if err != nil { panic(err) } defer producer.Close() //构建发送的消息, msg := &sarama.ProducerMessage{ Topic: topic, //包含了消息的主题 Partition: int32(10), // Key: sarama.StringEncoder("key"), // } var i = 0 for { i++ //将字符串转换为字节数组 msg.Value = sarama.ByteEncoder(fmt.Sprintf("this is a message:%d", i)) //SendMessage:该方法是生产者生产给定的消息 partition, offset, err := producer.SendMessage(msg) //生产失败的时候返回error if err != nil { fmt.Println("Send message Fail") } //生产成功的时候返回该消息的分区和所在的偏移量 fmt.Printf("send message Partition = %d, offset=%d ", partition, offset) time.Sleep(time.Second * 5) } } func consumer_test() { // 根据给定的代理地址和配置创建一个消费者 consumer, err := sarama.NewConsumer([]string{address}, nil) if err != nil { panic(err) } //Partitions(topic):该方法返回了该topic的所有分区id partitionList, err := consumer.Partitions(topic) if err != nil { panic(err) } for partition := range partitionList { //ConsumePartition方法根据主题,分区和给定的偏移量创建创建了相应的分区消费者 //如果该分区消费者已经消费了该信息将会返回error //sarama.OffsetNewest:表明了为最新消息 pc, err := consumer.ConsumePartition(topic, int32(partition), sarama.OffsetNewest) if err != nil { panic(err) } defer pc.AsyncClose() wg.Add(1) go func(sarama.PartitionConsumer) { defer wg.Done() //Messages()该方法返回一个消费消息类型的只读通道,由代理产生 for msg := range pc.Messages() { fmt.Printf("receive message %s---Partition:%d, Offset:%d, Key:%s, Value:%s ", msg.Topic, msg.Partition, msg.Offset, string(msg.Key), string(msg.Value)) } }(pc) } wg.Wait() consumer.Close() }

运行效果:

D:ProjectGoProjectsrcmain>go run main.go send message Partition = 0, offset=0 receive message test---Partition:0, Offset:0, Key:key, Value:this is a message:1 send message Partition = 0, offset=1 receive message test---Partition:0, Offset:1, Key:key, Value:this is a message:2 send message Partition = 0, offset=2 receive message test---Partition:0, Offset:2, Key:key, Value:this is a message:3 send message Partition = 0, offset=3 receive message test---Partition:0, Offset:3, Key:key, Value:this is a message:4

C#实现

我们使用官方推荐的Confluent.Kafka

using Confluent.Kafka; using Google.Protobuf; using Grpc.Core; using Grpc.Net.Client; using GrpcStream; using System; using System.Collections.Generic; using System.IO; using System.Linq; using System.Threading; using System.Threading.Tasks; namespace T.GrpcStreamClient { class Program { static void Main(string[] args) { Task.Run(() => { Produce(); }); Task.Run(()=> { Consumer(); }); Console.ReadKey(); } static void Produce() { var config = new ProducerConfig { BootstrapServers = "192.168.100.19:9092" }; var builder =new ProducerBuilder<Null, string>(config); builder.SetErrorHandler((p,e)=> { Console.WriteLine($"Producer_Erro信息:Code:{e.Code};Reason:{e.Reason};IsError:{e.IsError}"); }); using (var producer = builder.Build()) { int i = 0; while (true) { i++; producer.Produce("test", new Message<Null, string> { Value = $"hello {i}" }, d => { Console.WriteLine($"Producer message:Partition:{d.Partition.Value},message={d.Message.Value}"); }); //Flush到磁盘 producer.Flush(TimeSpan.FromSeconds(3)); } } } static void Consumer() { Console.WriteLine("Hello World!"); var conf = new ConsumerConfig { GroupId = "test-consumer-group", BootstrapServers = "192.168.100.19:9092", AutoOffsetReset = AutoOffsetReset.Earliest, }; var builder = new ConsumerBuilder<Ignore, string>(conf); builder.SetErrorHandler((c,e)=> { Console.WriteLine($"Consumer_Error信息:Code:{e.Code};Reason:{e.Reason};IsError:{e.IsError}"); }); using (var consumer = builder.Build()) { consumer.Subscribe("test"); while (true) { try { var consume = consumer.Consume(); string receiveMsg = consume.Message.Value; Console.WriteLine($"Consumed message '{receiveMsg}' at: '{consume.TopicPartitionOffset}'."); // 开始我的业务逻辑 } catch (ConsumeException e) { Console.WriteLine($"Consumer_Error occured: {e.Error.Reason}"); } } } } } }

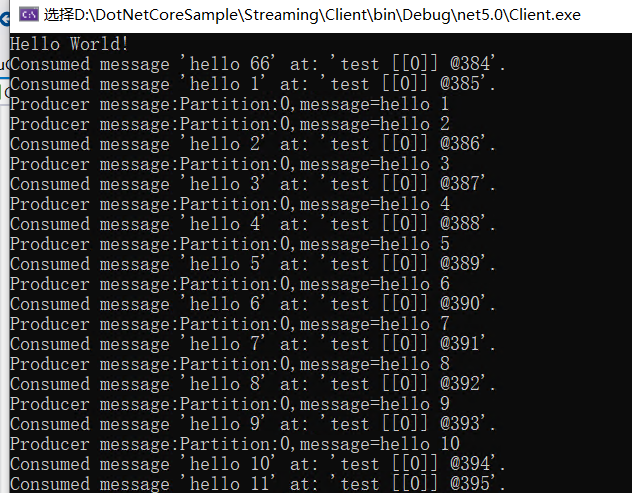

运行效果:

参考:

https://studygolang.com/articles/17912

https://www.cnblogs.com/gwyy/p/13266589.html

https://www.cnblogs.com/hsxian/p/12907542.html