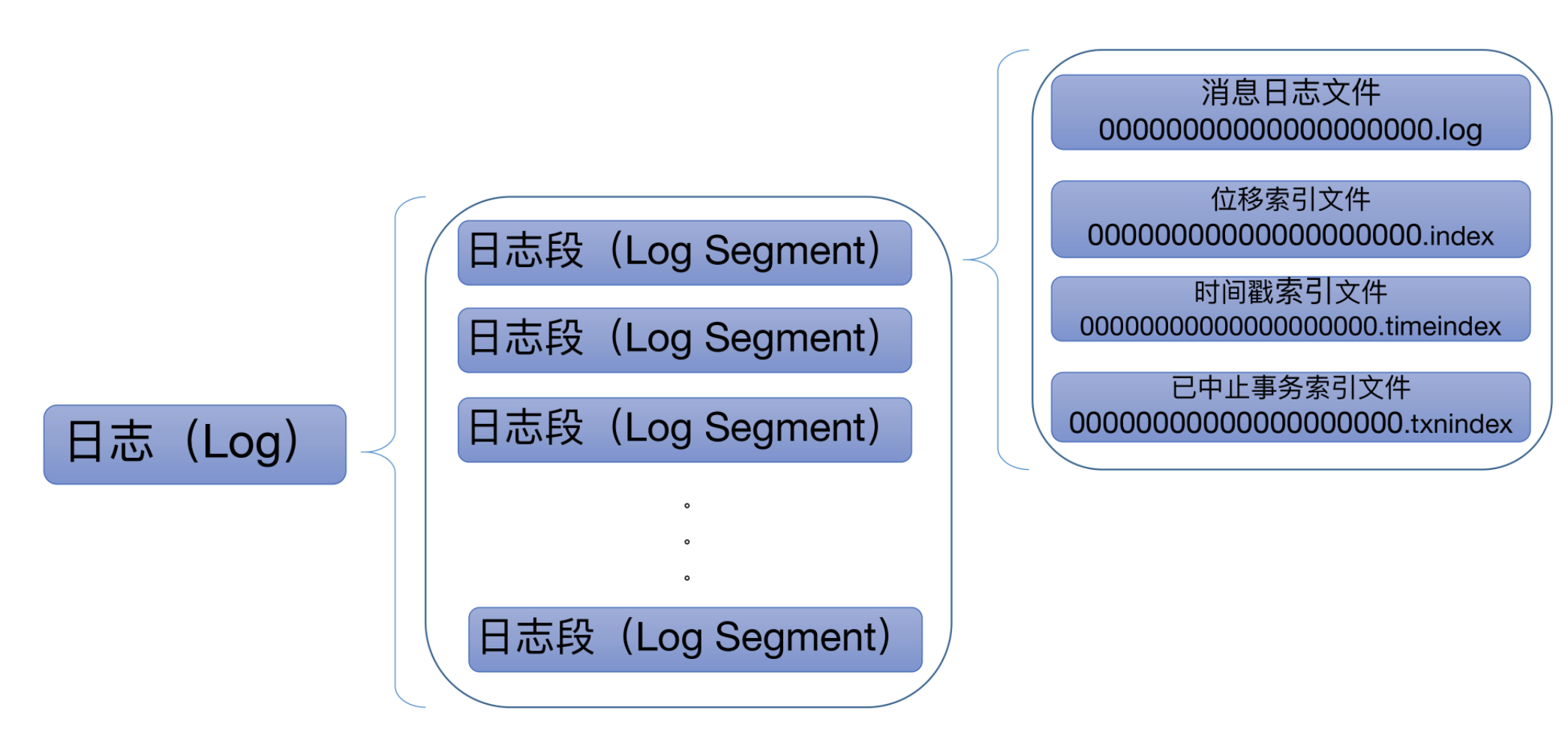

我们先回想一下Kafka的日志结构是怎样的?

Kafka 日志对象由多个日志段对象组成,而每个日志段对象会在磁盘上创建一组文件,包括消息日志文件(.log)、位移索引文件(.index)、时间戳索引文件(.timeindex)以及已中止(Aborted)事务的索引文件(.txnindex)。当然,如果你没有使用 Kafka 事务,已中止事务的索引文件是不会被创建出来的。

下面我们看一下LogSegment的实现情况,具体文件位置是 core/src/main/scala/kafka/log/LogSegment.scala。

LogSegment

LogSegment.scala这个文件里面定义了三个对象:

- LogSegment class;

- LogSegment object;

- LogFlushStats object。LogFlushStats 结尾有个 Stats,它是做统计用的,主要负责为日志落盘进行计时。

我这里贴一下LogSegment.scala这个文件上面的注释,介绍了LogSegment的构成:

A segment of the log. Each segment has two components: a log and an index. The log is a FileRecords containing the actual messages. The index is an OffsetIndex that maps from logical offsets to physical file positions. Each segment has a base offset which is an offset <= the least offset of any message in this segment and > any offset in any previous segment

这段注释清楚的写了每个日志段由两个核心组件构成:日志和索引。每个日志段都有一个起始位置:base offset,而该位移值是此日志段所有消息中最小的位移值,同时,该值却又比前面任何日志段中消息的位移值都大。

LogSegment构造参数

class LogSegment private[log] (val log: FileRecords,

val lazyOffsetIndex: LazyIndex[OffsetIndex],

val lazyTimeIndex: LazyIndex[TimeIndex],

val txnIndex: TransactionIndex,

val baseOffset: Long,

val indexIntervalBytes: Int,

val rollJitterMs: Long,

val time: Time) extends Logging { … }

FileRecords是实际保存 Kafka 消息的对象。

lazyOffsetIndex、lazyTimeIndex 和 txnIndex 分别对应位移索引文件、时间戳索引文件、已中止事务索引文件。

baseOffset是每个日志段对象的起始位移,每个 LogSegment 对象实例一旦被创建,它的起始位移就是固定的了,不能再被更改。

indexIntervalBytes 值其实就是 Broker 端参数 log.index.interval.bytes 值,它控制了日志段对象新增索引项的频率。默认情况下,日志段至少新写入 4KB 的消息数据才会新增一条索引项。

time 是用于统计计时的一个实现类。

append

@nonthreadsafe

def append(largestOffset: Long,

largestTimestamp: Long,

shallowOffsetOfMaxTimestamp: Long,

records: MemoryRecords): Unit = {

// 判断是否日志段是否为空

if (records.sizeInBytes > 0) {

trace(s"Inserting ${records.sizeInBytes} bytes at end offset $largestOffset at position ${log.sizeInBytes} " +

s"with largest timestamp $largestTimestamp at shallow offset $shallowOffsetOfMaxTimestamp")

val physicalPosition = log.sizeInBytes()

if (physicalPosition == 0)

rollingBasedTimestamp = Some(largestTimestamp)

// 确保输入参数最大位移值是合法的

ensureOffsetInRange(largestOffset)

// append the messages

// 执行真正的写入

val appendedBytes = log.append(records)

trace(s"Appended $appendedBytes to ${log.file} at end offset $largestOffset")

// Update the in memory max timestamp and corresponding offset.

// 更新日志段的最大时间戳以及最大时间戳所属消息的位移值属性

if (largestTimestamp > maxTimestampSoFar) {

maxTimestampSoFar = largestTimestamp

offsetOfMaxTimestampSoFar = shallowOffsetOfMaxTimestamp

}

// append an entry to the index (if needed)

// 当已写入字节数超过了 4KB 之后,append 方法会调用索引对象的 append 方法新增索引项,同时清空已写入字节数

if (bytesSinceLastIndexEntry > indexIntervalBytes) {

offsetIndex.append(largestOffset, physicalPosition)

timeIndex.maybeAppend(maxTimestampSoFar, offsetOfMaxTimestampSoFar)

bytesSinceLastIndexEntry = 0

}

bytesSinceLastIndexEntry += records.sizeInBytes

}

}

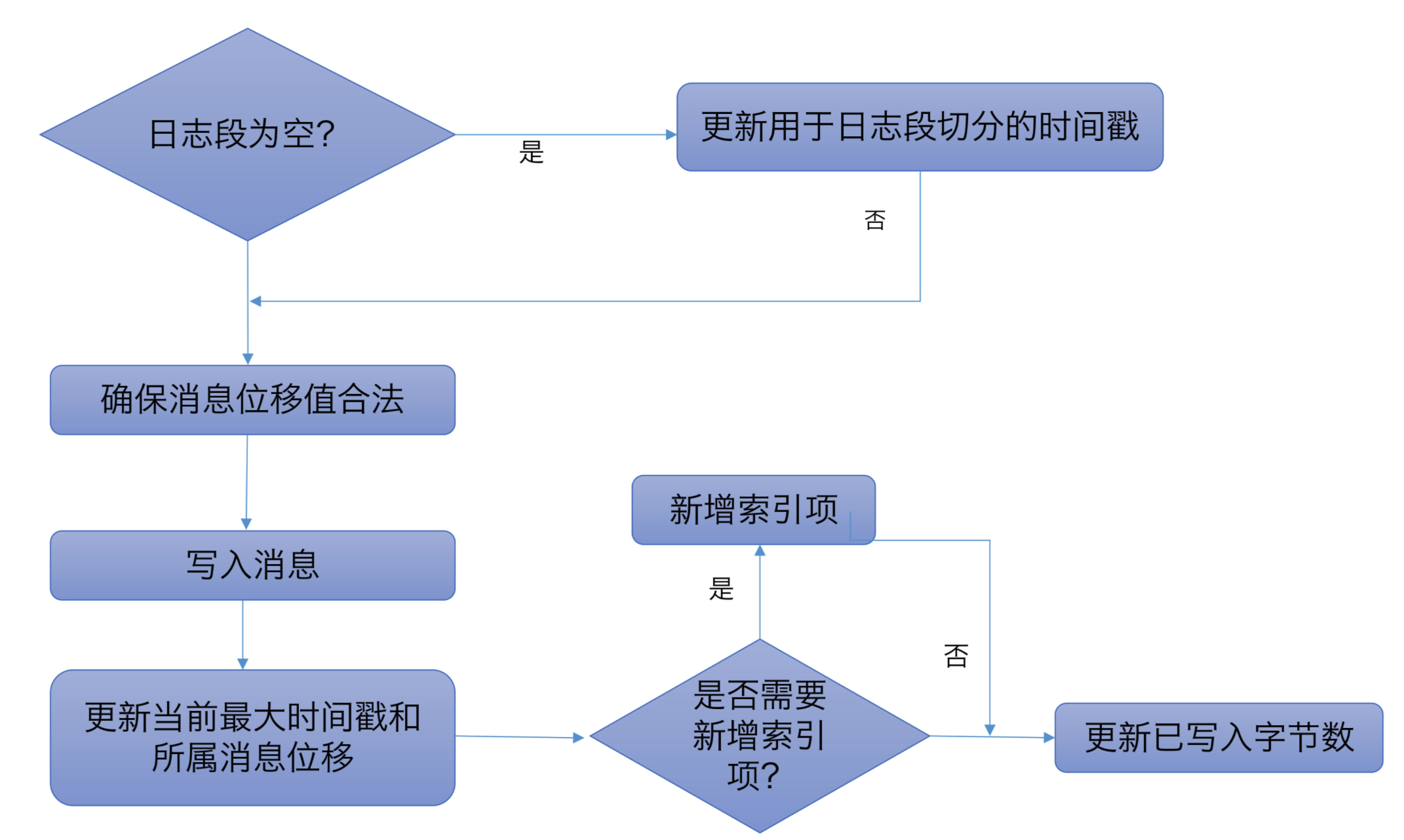

这个方法主要做了那么几件事:

- 判断日志段是否为空,不为空则往下进行操作

- 调用ensureOffsetInRange方法,确保输入参数最大位移值是合法的。

- 调用 FileRecords 的 append 方法执行真正的写入。

- 更新日志段的最大时间戳以及最大时间戳所属消息的位移值属性。

- 更新索引项和写入的字节数,日志段每写入 4KB 数据就要写入一个索引项。当已写入字节数超过了 4KB 之后,append 方法会调用索引对象的 append 方法新增索引项,同时清空已写入字节数。

我们下面再看看ensureOffsetInRange方法是怎么校验最大位移的:

private def ensureOffsetInRange(offset: Long): Unit = {

if (!canConvertToRelativeOffset(offset))

throw new LogSegmentOffsetOverflowException(this, offset)

}

这个方法最终会调用到AbstractIndex的toRelative方法中:

private def toRelative(offset: Long): Option[Int] = {

val relativeOffset = offset - baseOffset

if (relativeOffset < 0 || relativeOffset > Int.MaxValue)

None

else

Some(relativeOffset.toInt)

}

可见这个方法会将offset和baseOffset做对比,当offset小于baseOffset或者当offset和baseOffset相减后大于Int的最大值,那么都是异常的情况,那么这时就会抛出LogSegmentOffsetOverflowException异常。

read

def read(startOffset: Long,

maxSize: Int,

maxPosition: Long = size,

minOneMessage: Boolean = false): FetchDataInfo = {

if (maxSize < 0)

throw new IllegalArgumentException(s"Invalid max size $maxSize for log read from segment $log")

// 将位移索引转换成物理文件位置索引

val startOffsetAndSize = translateOffset(startOffset)

// if the start position is already off the end of the log, return null

if (startOffsetAndSize == null)

return null

val startPosition = startOffsetAndSize.position

val offsetMetadata = LogOffsetMetadata(startOffset, this.baseOffset, startPosition)

val adjustedMaxSize =

if (minOneMessage) math.max(maxSize, startOffsetAndSize.size)

else maxSize

// return a log segment but with zero size in the case below

if (adjustedMaxSize == 0)

return FetchDataInfo(offsetMetadata, MemoryRecords.EMPTY)

// calculate the length of the message set to read based on whether or not they gave us a maxOffset

// 计算要读取的总字节数

val fetchSize: Int = min((maxPosition - startPosition).toInt, adjustedMaxSize)

// log.slice读取消息后封装成FetchDataInfo返回

FetchDataInfo(offsetMetadata, log.slice(startPosition, fetchSize),

firstEntryIncomplete = adjustedMaxSize < startOffsetAndSize.size)

}

这段代码中,主要做了这几件事:

-

调用 translateOffset 方法定位要读取的起始文件位置 (startPosition)。

举个例子,假设 maxSize=100,maxPosition=300,startPosition=250,那么 read 方法只能读取 50 字节,因为 maxPosition - startPosition = 50。我们把它和 maxSize 参数相比较,其中的最小值就是最终能够读取的总字节数。

-

调用 FileRecords 的 slice 方法,从指定位置读取指定大小的消息集合。

recover

这个方法是恢复日志段,Broker 在启动时会从磁盘上加载所有日志段信息到内存中,并创建相应的 LogSegment 对象实例。在这个过程中,它需要执行一系列的操作。

def recover(producerStateManager: ProducerStateManager, leaderEpochCache: Option[LeaderEpochFileCache] = None): Int = {

//情况索引文件

offsetIndex.reset()

timeIndex.reset()

txnIndex.reset()

var validBytes = 0

var lastIndexEntry = 0

maxTimestampSoFar = RecordBatch.NO_TIMESTAMP

try {

//遍历日志段中所有消息集合

for (batch <- log.batches.asScala) {

// 校验

batch.ensureValid()

// 校验消息中最后一条消息的位移不能越界

ensureOffsetInRange(batch.lastOffset)

// The max timestamp is exposed at the batch level, so no need to iterate the records

// 获取最大时间戳及所属消息位移

if (batch.maxTimestamp > maxTimestampSoFar) {

maxTimestampSoFar = batch.maxTimestamp

offsetOfMaxTimestampSoFar = batch.lastOffset

}

// Build offset index

// 当已写入字节数超过了 4KB 之后,调用索引对象的 append 方法新增索引项,同时清空已写入字节数

if (validBytes - lastIndexEntry > indexIntervalBytes) {

offsetIndex.append(batch.lastOffset, validBytes)

timeIndex.maybeAppend(maxTimestampSoFar, offsetOfMaxTimestampSoFar)

lastIndexEntry = validBytes

}

// 更新总消息字节数

validBytes += batch.sizeInBytes()

// 更新Porducer状态和Leader Epoch缓存

if (batch.magic >= RecordBatch.MAGIC_VALUE_V2) {

leaderEpochCache.foreach { cache =>

if (batch.partitionLeaderEpoch > 0 && cache.latestEpoch.forall(batch.partitionLeaderEpoch > _))

cache.assign(batch.partitionLeaderEpoch, batch.baseOffset)

}

updateProducerState(producerStateManager, batch)

}

}

} catch {

case e@ (_: CorruptRecordException | _: InvalidRecordException) =>

warn("Found invalid messages in log segment %s at byte offset %d: %s. %s"

.format(log.file.getAbsolutePath, validBytes, e.getMessage, e.getCause))

}

// 遍历完后将 遍历累加的值和日志总字节数比较,

val truncated = log.sizeInBytes - validBytes

if (truncated > 0)

debug(s"Truncated $truncated invalid bytes at the end of segment ${log.file.getAbsoluteFile} during recovery")

//执行日志截断操作

log.truncateTo(validBytes)

// 调整索引文件大小

offsetIndex.trimToValidSize()

// A normally closed segment always appends the biggest timestamp ever seen into log segment, we do this as well.

timeIndex.maybeAppend(maxTimestampSoFar, offsetOfMaxTimestampSoFar, skipFullCheck = true)

timeIndex.trimToValidSize()

truncated

}

这个方法主要做了以下几件事:

- 清空索引文件

- 遍历日吹端中多有消息集合

- 校验日志段中的消息

- 获取最大时间戳及所属消息位移

- 更新索引项

- 更新总消息字节数

- 更新Porducer状态和Leader Epoch缓存

- 执行消息日志索引文件截断

- 调整索引文件大小

下面我们进入到truncateTo方法中,看一下截断操作是怎么做的:

public int truncateTo(int targetSize) throws IOException {

int originalSize = sizeInBytes();

// 要截断的目标大小不能超过当前文件的大小

if (targetSize > originalSize || targetSize < 0)

throw new KafkaException("Attempt to truncate log segment " + file + " to " + targetSize + " bytes failed, " +

" size of this log segment is " + originalSize + " bytes.");

//如果目标大小小于当前文件大小,那么执行截断

if (targetSize < (int) channel.size()) {

channel.truncate(targetSize);

size.set(targetSize);

}

return originalSize - targetSize;

}

Kafka 会将日志段当前总字节数和刚刚累加的已读取字节数进行比较,如果发现前者比后者大,说明日志段写入了一些非法消息,需要执行截断操作,将日志段大小调整回合法的数值。

truncateTo

这个方法会将日志段中的数据强制截断到指定的位移处。

def truncateTo(offset: Long): Int = {

// Do offset translation before truncating the index to avoid needless scanning

// in case we truncate the full index

// 将位置值转换成物理文件位置

val mapping = translateOffset(offset)

// 移动索引到指定位置

offsetIndex.truncateTo(offset)

timeIndex.truncateTo(offset)

txnIndex.truncateTo(offset)

// After truncation, reset and allocate more space for the (new currently active) index

// 因为位置变了,为了节省内存,做一次resize操作

offsetIndex.resize(offsetIndex.maxIndexSize)

timeIndex.resize(timeIndex.maxIndexSize)

val bytesTruncated = if (mapping == null) 0 else log.truncateTo(mapping.position)

// 如果调整到初始位置,那么重新记录一下创建时间

if (log.sizeInBytes == 0) {

created = time.milliseconds

rollingBasedTimestamp = None

}

//调整索引项

bytesSinceLastIndexEntry = 0

//调整最大的索引位置

if (maxTimestampSoFar >= 0)

loadLargestTimestamp()

bytesTruncated

}

- 将位置值转换成物理文件位置

- 移动索引到指定位置,位移索引文件、时间戳索引文件、已中止事务索引文件等位置

- 将索引做一次resize操作,节省内存空间

- 调整日志段日志位置

我们到OffsetIndex的truncateTo方法中看一下:

override def truncateTo(offset: Long): Unit = {

inLock(lock) {

val idx = mmap.duplicate

//根据指定位移返回消息中位移

val slot = largestLowerBoundSlotFor(idx, offset, IndexSearchType.KEY)

/* There are 3 cases for choosing the new size

* 1) if there is no entry in the index <= the offset, delete everything

* 2) if there is an entry for this exact offset, delete it and everything larger than it

* 3) if there is no entry for this offset, delete everything larger than the next smallest

*/

val newEntries =

//如果没有消息的位移值小于指定位移值,那么就直接从头开始

if(slot < 0)

0

// 跳到执行的位移位置

else if(relativeOffset(idx, slot) == offset - baseOffset)

slot

// 指定位移位置大于消息中所有位移,那么跳到消息位置中最大的一个的下一个位置

else

slot + 1

// 执行位置跳转

truncateToEntries(newEntries)

}

}

- 根据指定位移返回消息中的槽位。

- 如果返回的槽位小于零,说明没有消息位移小于指定位移,所以newEntries返回0。

- 如果指定位移在消息位移中,那么返回slot槽位。

- 如果指定位移位置大于消息中所有位移,那么跳到消息位置中最大的一个的下一个位置。

讲完了LogSegment之后,我们在来看看Log。

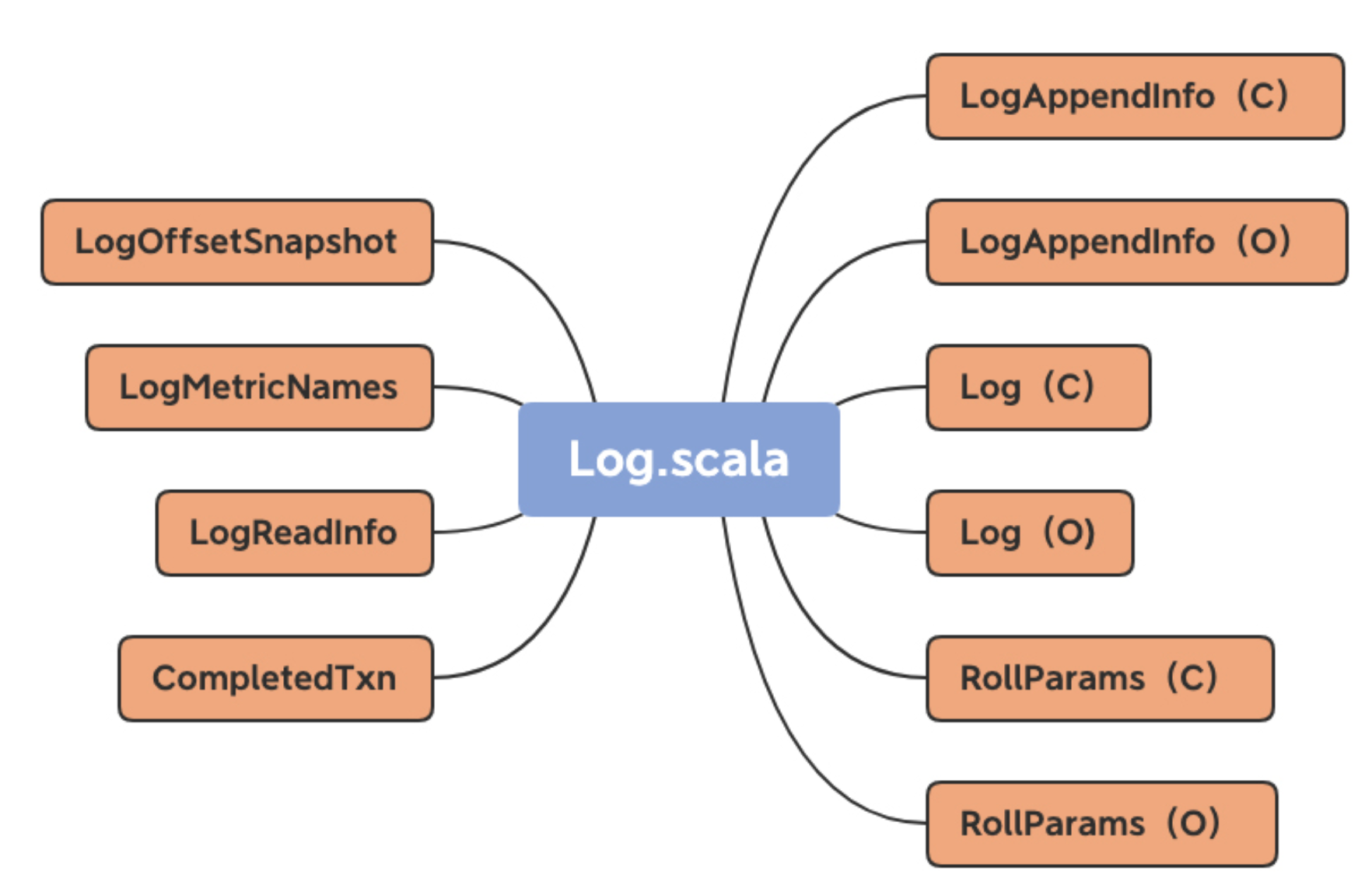

Log

Log 源码结构

Log.scala定义了 10 个类和对象,图中括号里的 C 表示 Class,O 表示 Object。

我们主要看的是Log类:

Log类的定义

class Log(@volatile var dir: File,

@volatile var config: LogConfig,

@volatile var logStartOffset: Long,

@volatile var recoveryPoint: Long,

scheduler: Scheduler,

brokerTopicStats: BrokerTopicStats,

val time: Time,

val maxProducerIdExpirationMs: Int,

val producerIdExpirationCheckIntervalMs: Int,

val topicPartition: TopicPartition,

val producerStateManager: ProducerStateManager,

logDirFailureChannel: LogDirFailureChannel) extends Logging with KafkaMetricsGroup {

……

}

主要的属性有两个dir和logStartOffset,分别表示个日志所在的文件夹路径,也就是主题分区的路径以及日志的当前最早位移。

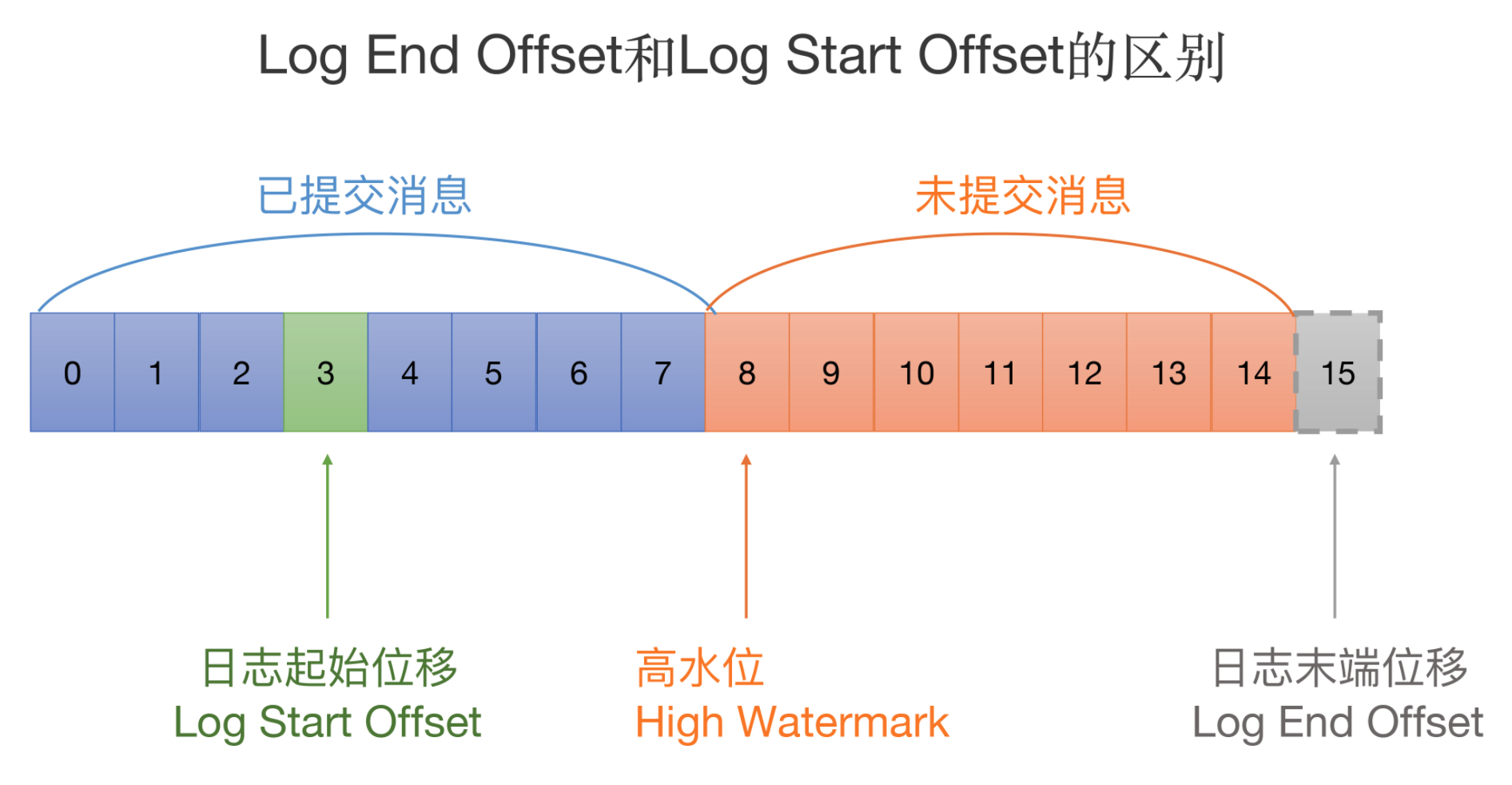

在kafka中,我们用Log End Offset(LEO)表示日志下一条待插入消息的位移值,也就是日志的末端位移。

Log Start Offset表示日志当前对外可见的最早一条消息的位移值。

再看看其他属性:

@volatile private var nextOffsetMetadata: LogOffsetMetadata = _

@volatile private var highWatermarkMetadata: LogOffsetMetadata = LogOffsetMetadata(logStartOffset)

private val segments: ConcurrentNavigableMap[java.lang.Long, LogSegment] = new ConcurrentSkipListMap[java.lang.Long, LogSegment]

@volatile var leaderEpochCache: Option[LeaderEpochFileCache] = None

nextOffsetMetadata基本上等同于LEO。

highWatermarkMetadata是分区日志高水位值。

segments保存了分区日志下所有的日志段信息。

Leader Epoch Cache 对象保存了分区 Leader 的 Epoch 值与对应位移值的映射关系。

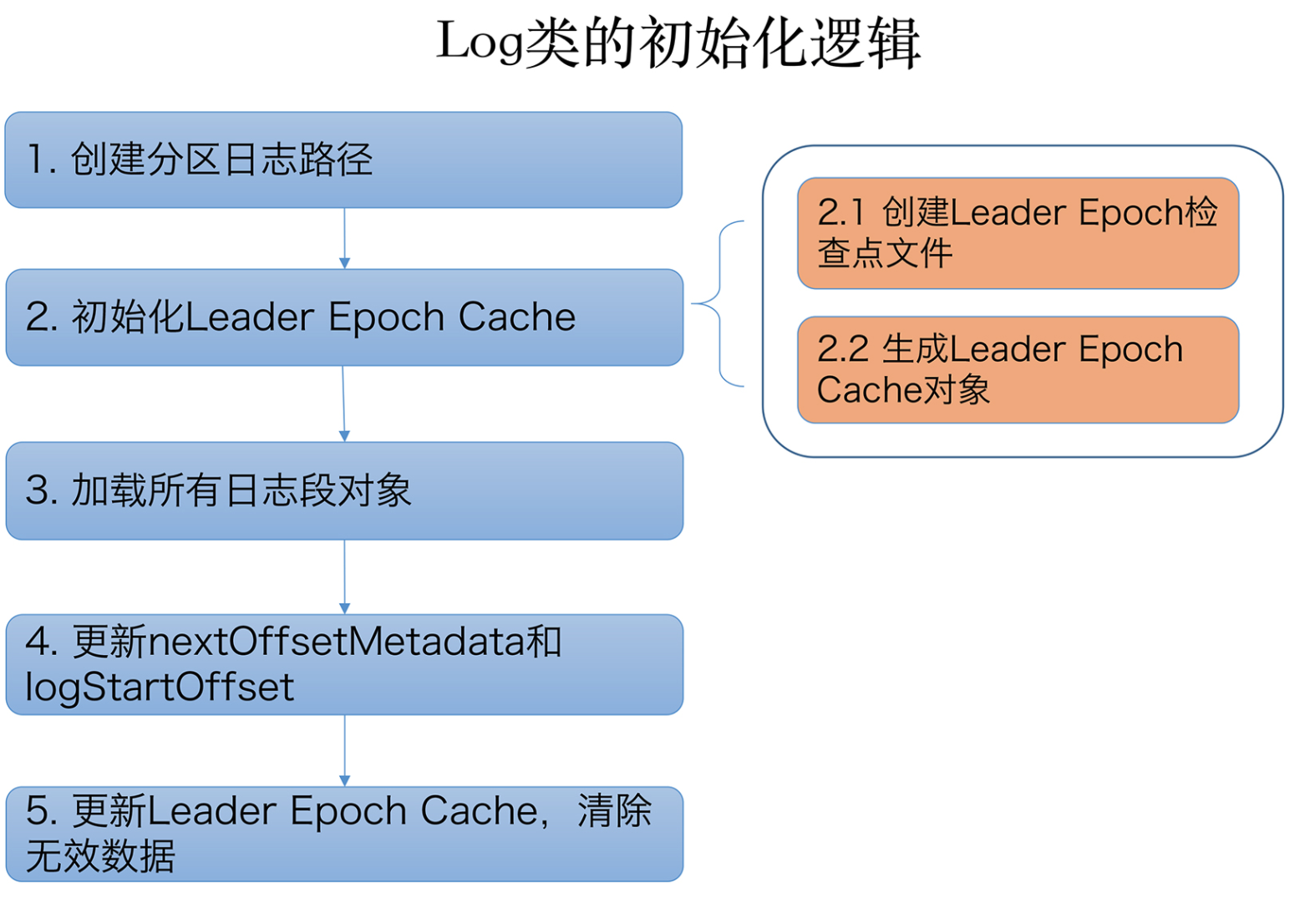

Log类初始化代码

locally {

val startMs = time.milliseconds

// create the log directory if it doesn't exist

//创建分区日志路径

Files.createDirectories(dir.toPath)

//初始化Leader Epoch Cache

initializeLeaderEpochCache()

//加载所有日志段对象

val nextOffset = loadSegments()

/* Calculate the offset of the next message */

nextOffsetMetadata = LogOffsetMetadata(nextOffset, activeSegment.baseOffset, activeSegment.size)

leaderEpochCache.foreach(_.truncateFromEnd(nextOffsetMetadata.messageOffset))

logStartOffset = math.max(logStartOffset, segments.firstEntry.getValue.baseOffset)

// The earliest leader epoch may not be flushed during a hard failure. Recover it here.

//更新Leader Epoch Cache,清除无效数据

leaderEpochCache.foreach(_.truncateFromStart(logStartOffset))

// Any segment loading or recovery code must not use producerStateManager, so that we can build the full state here

// from scratch.

if (!producerStateManager.isEmpty)

throw new IllegalStateException("Producer state must be empty during log initialization")

loadProducerState(logEndOffset, reloadFromCleanShutdown = hasCleanShutdownFile)

info(s"Completed load of log with ${segments.size} segments, log start offset $logStartOffset and " +

s"log end offset $logEndOffset in ${time.milliseconds() - startMs} ms")

}

这个代码里面主要做了这几件事:

Leader Epoch暂且不表,我们看看loadSegments是如何加载日志段的。

loadSegments

private def loadSegments(): Long = {

// first do a pass through the files in the log directory and remove any temporary files

// and find any interrupted swap operations

//移除上次 Failure 遗留下来的各种临时文件(包括.cleaned、.swap、.deleted 文件等)

val swapFiles = removeTempFilesAndCollectSwapFiles()

// Now do a second pass and load all the log and index files.

// We might encounter legacy log segments with offset overflow (KAFKA-6264). We need to split such segments. When

// this happens, restart loading segment files from scratch.

//清空所有日志段对象,并且再次遍历分区路径,重建日志段 segments Map 并删除无对应日志段文件的孤立索引文件。

retryOnOffsetOverflow {

// In case we encounter a segment with offset overflow, the retry logic will split it after which we need to retry

// loading of segments. In that case, we also need to close all segments that could have been left open in previous

// call to loadSegmentFiles().

//先清空日志段信息

logSegments.foreach(_.close())

segments.clear()

//从文件中装载日志段

loadSegmentFiles()

}

// Finally, complete any interrupted swap operations. To be crash-safe,

// log files that are replaced by the swap segment should be renamed to .deleted

// before the swap file is restored as the new segment file.

//完成未完成的 swap 操作

completeSwapOperations(swapFiles)

if (!dir.getAbsolutePath.endsWith(Log.DeleteDirSuffix)) {

val nextOffset = retryOnOffsetOverflow {

recoverLog()

}

// reset the index size of the currently active log segment to allow more entries

activeSegment.resizeIndexes(config.maxIndexSize)

nextOffset

} else {

if (logSegments.isEmpty) {

addSegment(LogSegment.open(dir = dir,

baseOffset = 0,

config,

time = time,

fileAlreadyExists = false,

initFileSize = this.initFileSize,

preallocate = false))

}

0

}

}

这个方法首先会调用removeTempFilesAndCollectSwapFiles方法移除上次 Failure 遗留下来的各种临时文件(包括.cleaned、.swap、.deleted 文件等)。

然后它会清空所有日志段对象,并且再次遍历分区路径,重建日志段 segments Map 并删除无对应日志段文件的孤立索引文件。

待执行完这两次遍历之后,它会完成未完成的 swap 操作,即调用 completeSwapOperations 方法。等这些都做完之后,再调用 recoverLog 方法恢复日志段对象,然后返回恢复之后的分区日志 LEO 值。

removeTempFilesAndCollectSwapFiles

private def removeTempFilesAndCollectSwapFiles(): Set[File] = {

// 在方法内部定义一个名为deleteIndicesIfExist的方法,用于删除日志文件对应的索引文件

def deleteIndicesIfExist(baseFile: File, suffix: String = ""): Unit = {

info(s"Deleting index files with suffix $suffix for baseFile $baseFile")

val offset = offsetFromFile(baseFile)

Files.deleteIfExists(Log.offsetIndexFile(dir, offset, suffix).toPath)

Files.deleteIfExists(Log.timeIndexFile(dir, offset, suffix).toPath)

Files.deleteIfExists(Log.transactionIndexFile(dir, offset, suffix).toPath)

}

var swapFiles = Set[File]()

var cleanFiles = Set[File]()

var minCleanedFileOffset = Long.MaxValue

for (file <- dir.listFiles if file.isFile) {

if (!file.canRead)

throw new IOException(s"Could not read file $file")

val filename = file.getName

//如果是以.deleted结尾的文件

if (filename.endsWith(DeletedFileSuffix)) {

debug(s"Deleting stray temporary file ${file.getAbsolutePath}")

// 说明是上次Failure遗留下来的文件,直接删除

Files.deleteIfExists(file.toPath)

// 如果是以.cleaned结尾的文件

} else if (filename.endsWith(CleanedFileSuffix)) {

minCleanedFileOffset = Math.min(offsetFromFileName(filename), minCleanedFileOffset)

cleanFiles += file

// .swap结尾的文件

} else if (filename.endsWith(SwapFileSuffix)) {

// we crashed in the middle of a swap operation, to recover:

// if a log, delete the index files, complete the swap operation later

// if an index just delete the index files, they will be rebuilt

//更改文件名

val baseFile = new File(CoreUtils.replaceSuffix(file.getPath, SwapFileSuffix, ""))

info(s"Found file ${file.getAbsolutePath} from interrupted swap operation.")

//如果该.swap文件原来是索引文件

if (isIndexFile(baseFile)) {

// 删除原来的索引文件

deleteIndicesIfExist(baseFile)

// 如果该.swap文件原来是日志文件

} else if (isLogFile(baseFile)) {

// 删除掉原来的索引文件

deleteIndicesIfExist(baseFile)

// 加入待恢复的.swap文件集合中

swapFiles += file

}

}

}

// KAFKA-6264: Delete all .swap files whose base offset is greater than the minimum .cleaned segment offset. Such .swap

// files could be part of an incomplete split operation that could not complete. See Log#splitOverflowedSegment

// for more details about the split operation.

// 从待恢复swap集合中找出那些起始位移值大于minCleanedFileOffset值的文件,直接删掉这些无效的.swap文件

val (invalidSwapFiles, validSwapFiles) = swapFiles.partition(file => offsetFromFile(file) >= minCleanedFileOffset)

invalidSwapFiles.foreach { file =>

debug(s"Deleting invalid swap file ${file.getAbsoluteFile} minCleanedFileOffset: $minCleanedFileOffset")

val baseFile = new File(CoreUtils.replaceSuffix(file.getPath, SwapFileSuffix, ""))

deleteIndicesIfExist(baseFile, SwapFileSuffix)

Files.deleteIfExists(file.toPath)

}

// Now that we have deleted all .swap files that constitute an incomplete split operation, let's delete all .clean files

// 清除所有待删除文件集合中的文件

cleanFiles.foreach { file =>

debug(s"Deleting stray .clean file ${file.getAbsolutePath}")

Files.deleteIfExists(file.toPath)

}

// 最后返回当前有效的.swap文件集合

validSwapFiles

}

- 定义了一个内部方法deleteIndicesIfExist,用于删除日志文件对应的索引文件。

- 遍历文件列表删除遗留文件,并筛选出.cleaned结尾的文件和.swap结尾的文件。

- 根据minCleanedFileOffset删除无效的.swap文件。

- 最后返回当前有效的.swap文件集合

处理完了removeTempFilesAndCollectSwapFiles方法,然后进入到loadSegmentFiles方法中。

loadSegmentFiles

private def loadSegmentFiles(): Unit = {

// load segments in ascending order because transactional data from one segment may depend on the

// segments that come before it

for (file <- dir.listFiles.sortBy(_.getName) if file.isFile) {

//如果不是以.log结尾的文件,如.index、.timeindex、.txnindex

if (isIndexFile(file)) {

// if it is an index file, make sure it has a corresponding .log file

val offset = offsetFromFile(file)

val logFile = Log.logFile(dir, offset)

// 确保存在对应的日志文件,否则记录一个警告,并删除该索引文件

if (!logFile.exists) {

warn(s"Found an orphaned index file ${file.getAbsolutePath}, with no corresponding log file.")

Files.deleteIfExists(file.toPath)

}

// 如果是以.log结尾的文件

} else if (isLogFile(file)) {

// if it's a log file, load the corresponding log segment

val baseOffset = offsetFromFile(file)

val timeIndexFileNewlyCreated = !Log.timeIndexFile(dir, baseOffset).exists()

// 创建对应的LogSegment对象实例,并加入segments中

val segment = LogSegment.open(dir = dir,

baseOffset = baseOffset,

config,

time = time,

fileAlreadyExists = true)

try segment.sanityCheck(timeIndexFileNewlyCreated)

catch {

case _: NoSuchFileException =>

error(s"Could not find offset index file corresponding to log file ${segment.log.file.getAbsolutePath}, " +

"recovering segment and rebuilding index files...")

recoverSegment(segment)

case e: CorruptIndexException =>

warn(s"Found a corrupted index file corresponding to log file ${segment.log.file.getAbsolutePath} due " +

s"to ${e.getMessage}}, recovering segment and rebuilding index files...")

recoverSegment(segment)

}

addSegment(segment)

}

}

}

- 遍历文件目录

- 如果文件是索引文件,那么检查一下是否存在相应的日志文件。

- 如果是日志文件,那么创建对应的LogSegment对象实例,并加入segments中。

接下来调用completeSwapOperations方法处理有效.swap 文件集合。

completeSwapOperations

private def completeSwapOperations(swapFiles: Set[File]): Unit = {

// 遍历所有有效.swap文件

for (swapFile <- swapFiles) {

val logFile = new File(CoreUtils.replaceSuffix(swapFile.getPath, SwapFileSuffix, ""))

val baseOffset = offsetFromFile(logFile)// 拿到日志文件的起始位移值

// 创建对应的LogSegment实例

val swapSegment = LogSegment.open(swapFile.getParentFile,

baseOffset = baseOffset,

config,

time = time,

fileSuffix = SwapFileSuffix)

info(s"Found log file ${swapFile.getPath} from interrupted swap operation, repairing.")

// 执行日志段恢复操作

recoverSegment(swapSegment)

// We create swap files for two cases:

// (1) Log cleaning where multiple segments are merged into one, and

// (2) Log splitting where one segment is split into multiple.

//

// Both of these mean that the resultant swap segments be composed of the original set, i.e. the swap segment

// must fall within the range of existing segment(s). If we cannot find such a segment, it means the deletion

// of that segment was successful. In such an event, we should simply rename the .swap to .log without having to

// do a replace with an existing segment.

// 确认之前删除日志段是否成功,是否还存在老的日志段文件

val oldSegments = logSegments(swapSegment.baseOffset, swapSegment.readNextOffset).filter { segment =>

segment.readNextOffset > swapSegment.baseOffset

}

// 如果存在,直接把.swap文件重命名成.log

replaceSegments(Seq(swapSegment), oldSegments.toSeq, isRecoveredSwapFile = true)

}

}

- 遍历所有有效.swap文件;

- 创建对应的LogSegment实例;

- 执行日志段恢复操作,恢复部分的源码已经在LogSegment里面讲了;

- 把.swap文件重命名成.log;

最后是执行recoverLog部分代码。

recoverLog

private def recoverLog(): Long = {

// if we have the clean shutdown marker, skip recovery

// 如果不存在以.kafka_cleanshutdown结尾的文件。通常都不存在

if (!hasCleanShutdownFile) {

// okay we need to actually recover this log

// 获取到上次恢复点以外的所有unflushed日志段对象

val unflushed = logSegments(this.recoveryPoint, Long.MaxValue).toIterator

var truncated = false

// 遍历这些unflushed日志段

while (unflushed.hasNext && !truncated) {

val segment = unflushed.next

info(s"Recovering unflushed segment ${segment.baseOffset}")

val truncatedBytes =

try {

// 执行恢复日志段操作

recoverSegment(segment, leaderEpochCache)

} catch {

case _: InvalidOffsetException =>

val startOffset = segment.baseOffset

warn("Found invalid offset during recovery. Deleting the corrupt segment and " +

s"creating an empty one with starting offset $startOffset")

segment.truncateTo(startOffset)

}

if (truncatedBytes > 0) {// 如果有无效的消息导致被截断的字节数不为0,直接删除剩余的日志段对象

// we had an invalid message, delete all remaining log

warn(s"Corruption found in segment ${segment.baseOffset}, truncating to offset ${segment.readNextOffset}")

removeAndDeleteSegments(unflushed.toList, asyncDelete = true)

truncated = true

}

}

}

// 这些都做完之后,如果日志段集合不为空

if (logSegments.nonEmpty) {

val logEndOffset = activeSegment.readNextOffset

if (logEndOffset < logStartOffset) {

warn(s"Deleting all segments because logEndOffset ($logEndOffset) is smaller than logStartOffset ($logStartOffset). " +

"This could happen if segment files were deleted from the file system.")

removeAndDeleteSegments(logSegments, asyncDelete = true)

}

}

// 这些都做完之后,如果日志段集合为空了

if (logSegments.isEmpty) {

// no existing segments, create a new mutable segment beginning at logStartOffset

// 至少创建一个新的日志段,以logStartOffset为日志段的起始位移,并加入日志段集合中

addSegment(LogSegment.open(dir = dir,

baseOffset = logStartOffset,

config,

time = time,

fileAlreadyExists = false,

initFileSize = this.initFileSize,

preallocate = config.preallocate))

}

// 更新上次恢复点属性,并返回

recoveryPoint = activeSegment.readNextOffset

recoveryPoint

}