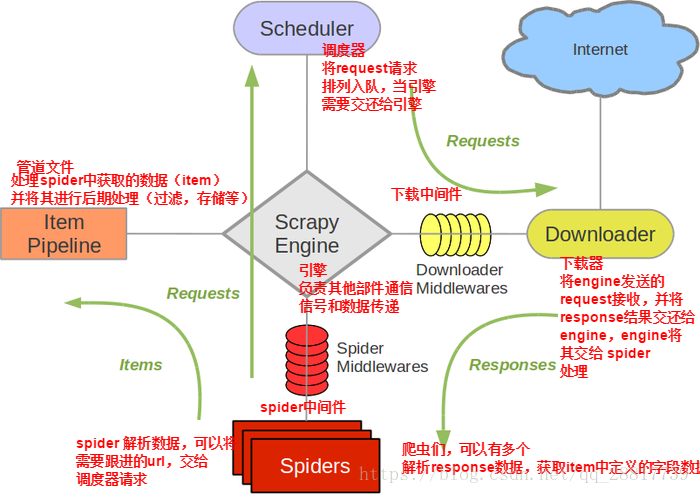

python scrapy架构图:https://www.cnblogs.com/iamjianghao/p/10862947.htm

一.下载中间件(Downloader Middlewares) 位于scrapy引擎和下载器之间的一层组件。

作用: (1)引擎将请求传递给下载器过程中, 下载中间件可以对请求进行一系列处理。比如设置请求的 User-Agent,设置代理等 (2)在下载器完成将Response传递给引擎中,下载中间件可以对响应进行一系列处理。比如进行gzip解压等。 我们主要使用下载中间件处理请求,一般会对请求设置随机的User-Agent ,设置随机的代理。目的在于防止爬取网站的反爬虫策略。

二.UA池:User-Agent池

作用:尽可能多的将scrapy工程中的请求伪装成不同类型的浏览器身份。 操作流程: 1.在下载中间件中拦截请求 2.将拦截到的请求的请求头信息中的UA进行篡改伪装 3.在配置文件中开启下载中间件

#导包 from scrapy.contrib.downloadermiddleware.useragent import UserAgentMiddleware import random #UA池代码的编写(单独给UA池封装一个下载中间件的一个类) class RandomUserAgent(UserAgentMiddleware): def process_request(self, request, spider): #从列表中随机抽选出一个ua值 ua = random.choice(user_agent_list) #ua值进行当前拦截到请求的ua的写入操作 request.headers.setdefault('User-Agent',ua) user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ]

三。代理池

作用:尽可能多的将scrapy工程中的请求的IP设置成不同的。 操作流程: 1.在下载中间件中拦截请求 2.将拦截到的请求的IP修改成某一代理IP 3.在配置文件中开启下载中间件

#批量对拦截到的请求进行ip更换 #单独封装下载中间件类 class Proxy(object): def process_request(self, request, spider): #对拦截到请求的url进行判断(协议头到底是http还是https) #request.url返回值:http://www.xxx.com h = request.url.split(':')[0] #请求的协议头 if h == 'https': ip = random.choice(PROXY_https) request.meta['proxy'] = 'https://'+ip else: ip = random.choice(PROXY_http) request.meta['proxy'] = 'http://' + ip #可被选用的代理IP PROXY_http = [ '153.180.102.104:80', '195.208.131.189:56055', ] PROXY_https = [ '120.83.49.90:9000', '95.189.112.214:35508', ]

四。示例代码演示

需求;爬取校花网名称以及图片地址(实现UA伪装以及ip代理池)

# -*- coding: utf-8 -*- import scrapy from xiaoHuaPro.items import XiaohuaproItem class XiaohuaSpider(scrapy.Spider): name = 'xiaohua' aaa = 110 # allowed_domains = ['www.xxx.com'] start_urls = ['http://www.521609.com/daxuemeinv/list81.html'] url = 'http://www.521609.com/daxuemeinv/list8%d.html' page = 1 def parse(self, response): li_list = response.xpath('//div[@class="index_img list_center"]/ul/li') for li in li_list: img_src = li.xpath('./a[1]/img/@src').extract_first() img_name = li.xpath('./a[1]/img/@alt').extract_first() item = XiaohuaproItem() item['name'] = img_name item['src'] = img_src yield item if self.page < 4: self.page += 1 new_url = format(self.url%self.page) #手动发起一个请求 yield scrapy.Request(url=new_url,callback=self.parse)

# -*- coding: utf-8 -*- import scrapy class XiaohuaproItem(scrapy.Item): # define the fields for your item here like: name = scrapy.Field() src = scrapy.Field()

# -*- coding: utf-8 -*- # Define here the models for your spider middleware # # See documentation in: # https://doc.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals import random class XiaohuaproDownloaderMiddleware(object): # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. #拦截正常的请求 # user_agent_list = [ "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1", "Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 " "(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 " "(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 " "(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5", "Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 " "(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3", "Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 " "(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24", "Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 " "(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24" ] PROXY_http = [ '153.180.102.104:80', '195.208.131.189:56055', ] PROXY_https = [ '120.83.49.90:9000', '95.189.112.214:35508', ] def process_request(self, request, spider): #使用UA池设置请求的UA request.headers['User-Agent'] = random.choice(self.user_agent_list) return None def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response #拦截发生异常的请求对象 def process_exception(self, request, exception, spider): if request.url.split(':')[0] == 'http': request.meta['proxy'] = 'http://'+random.choice(self.PROXY_http) else: request.meta['proxy'] = 'https://' + random.choice(self.PROXY_https)

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html class XiaohuaproPipeline(object): fp = None def open_spider(self,spider): self.fp = open('./xiaohua.txt','w',encoding='utf-8') def process_item(self, item, spider): self.fp.write(item['name']+':'+item['src']+' ') return item def close_spider(self,spider): self.fp.close()

BOT_NAME = 'xiaoHuaPro' SPIDER_MODULES = ['xiaoHuaPro.spiders'] NEWSPIDER_MODULE = 'xiaoHuaPro.spiders' USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/73.0.3683.103 Safari/537.36' ROBOTSTXT_OBEY = False DOWNLOADER_MIDDLEWARES = { 'xiaoHuaPro.middlewares.XiaohuaproDownloaderMiddleware': 543, } ITEM_PIPELINES = { 'xiaoHuaPro.pipelines.XiaohuaproPipeline': 300, }