=======================================================

前段时间为实验室负责审理AAAI 2022的会议稿件,感觉这个审稿模板还是不错的,这里保存一下,以后审理其他期刊、会议的时候可以参考这个模板。

========================================

Thank you for your contribution to AAAI 2022.

Edit Review

Paper ID

Paper Title

Track

Main Track

REVIEW QUESTIONS

1. {Summary} Please briefly summarize the main claims/contributions of the paper in your own words. (Please do not include your evaluation of the paper here). * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

2. {Novelty} How novel are the concepts, problems addressed, or methods introduced in the paper? * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The main ideas of the paper are ground-breaking.

- Good: The paper makes non-trivial advances over the current state-of-the-art.

- Fair: The paper contributes some new ideas.

- Poor: The main ideas of the paper are not novel or represent incremental advances.

3. {Soundness} Is the paper technically sound?(visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta- reviewers)

- Excellent: I am confident that the paper is technically sound, and I have carefully checked the details.

- Good: The paper appears to be technically sound, but I have not carefully checked the details.

- Fair: The paper has minor, easily fixable, technical flaws that do not impact the validity of the main results.

- Poor: The paper has major technical flaws.

4. {Impact} How do you rate the likely impact of the paper on the AI research community? * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The paper is likely to have high impact across more than one subfield of AI.

- Good: The paper is likely to have high impact within a subfield of AI OR moderate impact across more than one subfield of AI.

- Fair: The paper is likely to have moderate impact within a subfield of AI.

- Poor: The paper is likely to have minimal impact on AI.

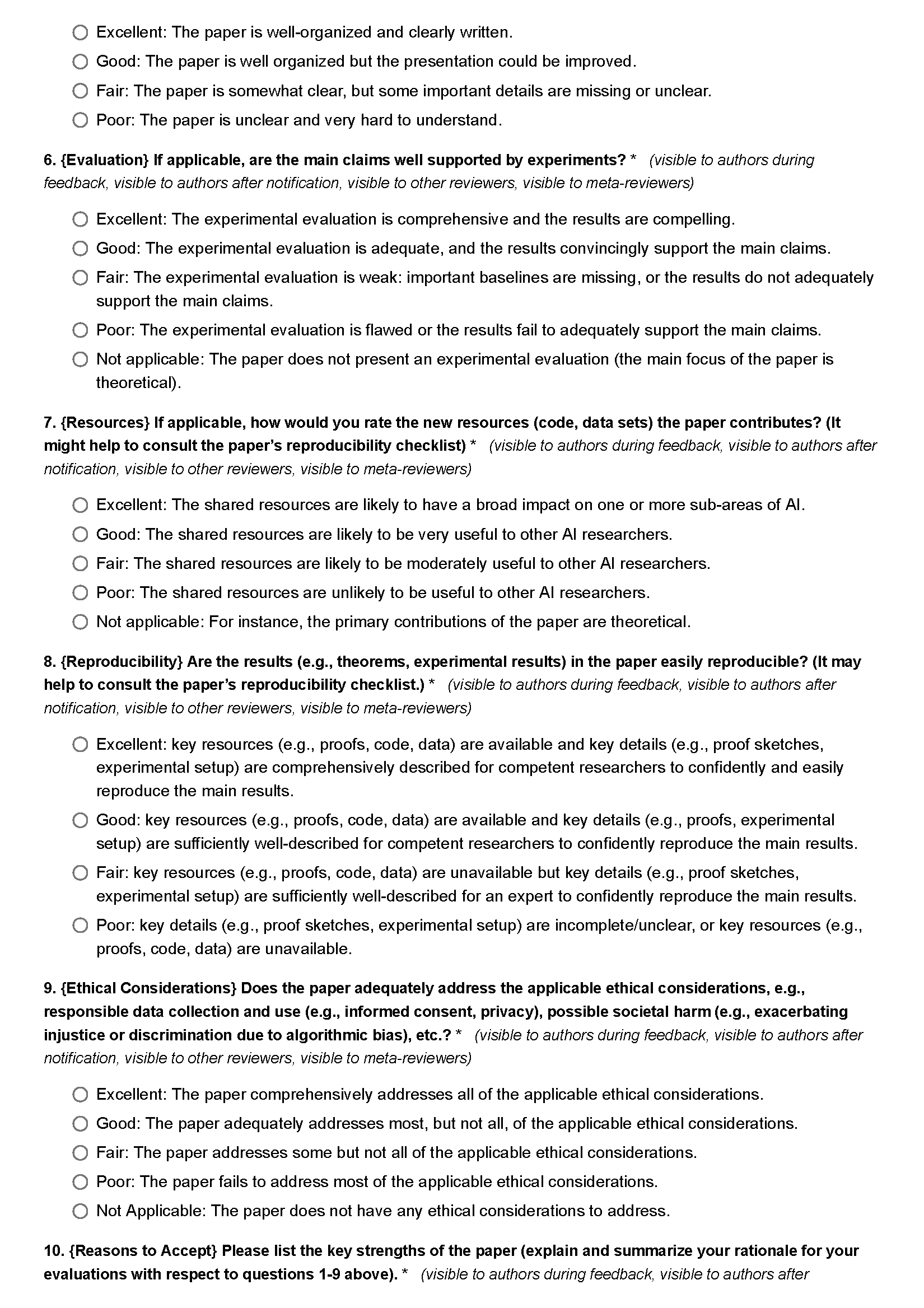

5. {Clarity} Is the paper well-organized and clearly written? * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The paper is well-organized and clearly written.

- Good: The paper is well organized but the presentation could be improved.

- Fair: The paper is somewhat clear, but some important details are missing or unclear.

- Poor: The paper is unclear and very hard to understand.

6. {Evaluation} If applicable, are the main claims well supported by experiments? * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The experimental evaluation is comprehensive and the results are compelling.

- Good: The experimental evaluation is adequate, and the results convincingly support the main claims.

- Fair: The experimental evaluation is weak: important baselines are missing, or the results do not adequately support the main claims.

- Poor: The experimental evaluation is flawed or the results fail to adequately support the main claims.

- Not applicable: The paper does not present an experimental evaluation (the main focus of the paper is theoretical).

7. {Resources} If applicable, how would you rate the new resources (code, data sets) the paper contributes? (It might help to consult the paper’s reproducibility checklist) * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The shared resources are likely to have a broad impact on one or more sub-areas of AI.

- Good: The shared resources are likely to be very useful to other AI researchers.

- Fair: The shared resources are likely to be moderately useful to other AI researchers.

- Poor: The shared resources are unlikely to be useful to other AI researchers.

- Not applicable: For instance, the primary contributions of the paper are theoretical.

8. {Reproducibility} Are the results (e.g., theorems, experimental results) in the paper easily reproducible? (It may help to consult the paper’s reproducibility checklist.) * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: key resources (e.g., proofs, code, data) are available and key details (e.g., proof sketches, experimental setup) are comprehensively described for competent researchers to confidently and easily reproduce the main results.

- Good: key resources (e.g., proofs, code, data) are available and key details (e.g., proofs, experimental setup) are sufficiently well-described for competent researchers to confidently reproduce the main results.

- Fair: key resources (e.g., proofs, code, data) are unavailable but key details (e.g., proof sketches, experimental setup) are sufficiently well-described for an expert to confidently reproduce the main results.

- Poor: key details (e.g., proof sketches, experimental setup) are incomplete/unclear, or key resources (e.g., proofs, code, data) are unavailable.

9. {Ethical Considerations} Does the paper adequately address the applicable ethical considerations, e.g., responsible data collection and use (e.g., informed consent, privacy), possible societal harm (e.g., exacerbating injustice or discrimination due to algorithmic bias), etc.? * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

- Excellent: The paper comprehensively addresses all of the applicable ethical considerations.

- Good: The paper adequately addresses most, but not all, of the applicable ethical considerations.

- Fair: The paper addresses some but not all of the applicable ethical considerations.

- Poor: The paper fails to address most of the applicable ethical considerations.

- Not Applicable: The paper does not have any ethical considerations to address.

10. {Reasons to Accept} Please list the key strengths of the paper (explain and summarize your rationale for your evaluations with respect to questions 1-9 above). * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

11. {Reasons to Reject} Please list the key weaknesses of the paper (explain and summarize your rationale for your evaluations with respect to questions 1-9 above). * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

{Questions for the Authors} Please provide questions that you would like the authors to answer during the author feedback period. Please number them. * (visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

13. {Detailed Feedback for the Authors} Please provide other detailed, constructive, feedback to the authors. *

(visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

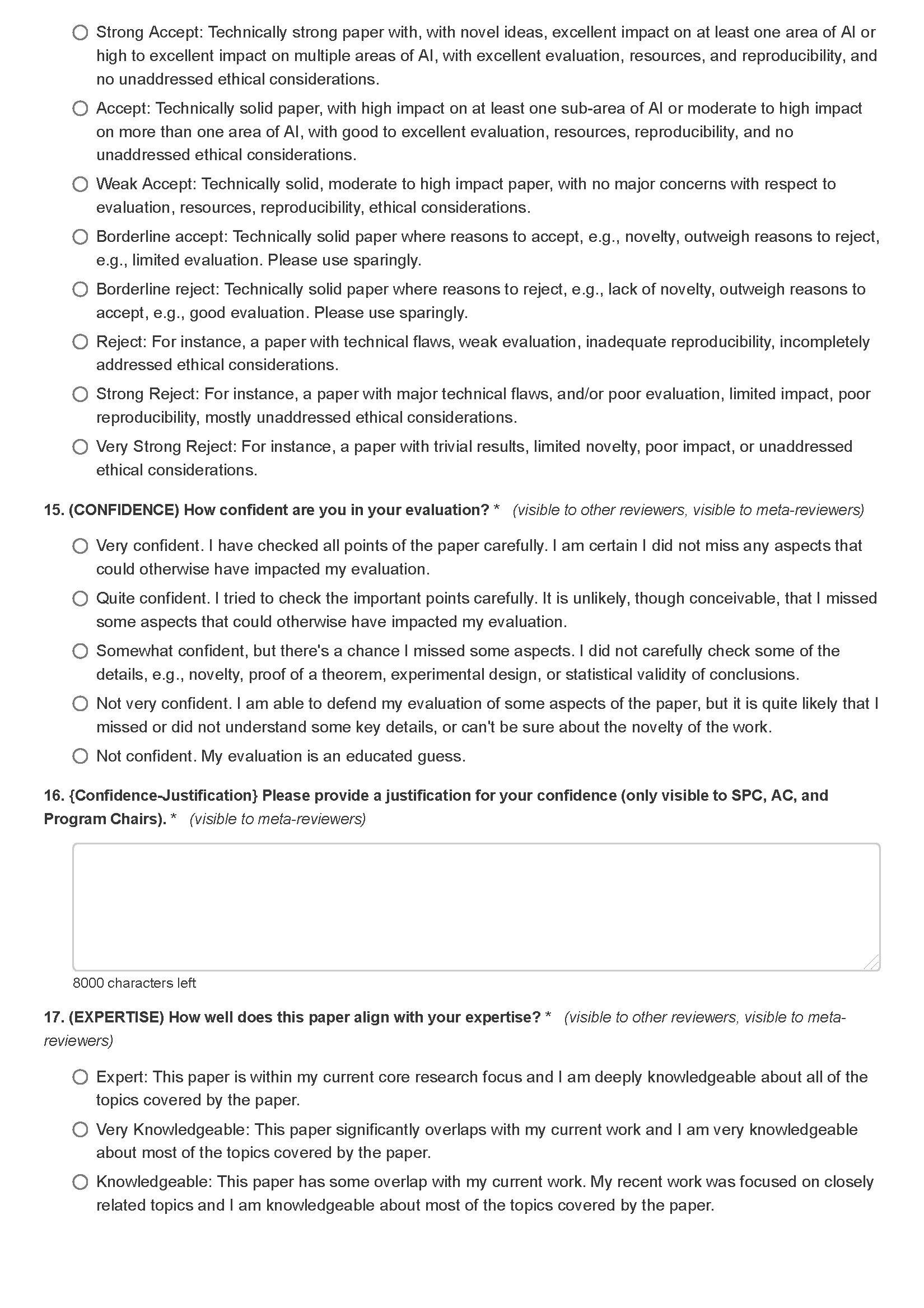

14. (OVERALL EVALUATION) Please provide your overall evaluation of the paper, carefully weighing the reasons to accept and the reasons to reject the paper. Ideally, we should have:

No more than 25% of the submitted papers in (Accept + Strong Accept + Very Strong Accept + Award Quality) categories;

No more than 20% of the submitted papers in (Strong Accept + Very Strong Accept + Award Quality) categories;

No more than 10% of the submitted papers in (Very Strong Accept + Award Quality) categories;

No more than 1% of the submitted papers in the Award Quality category;

(visible to authors during feedback, visible to authors after notification, visible to other reviewers, visible to meta- reviewers)

- Award quality: Technically flawless paper with groundbreaking impact on one or more areas of AI, with exceptionally strong evaluation, reproducibility, and resources, and no unaddressed ethical considerations.

- Very Strong Accept: Technically flawless paper with groundbreaking impact on at least one area of AI and excellent impact on multiple areas of AI, with flawless evaluation, resources, and reproducibility, and no unaddressed ethical considerations.

- Strong Accept: Technically strong paper with, with novel ideas, excellent impact on at least one area of AI or high to excellent impact on multiple areas of AI, with excellent evaluation, resources, and reproducibility, and no unaddressed ethical considerations.

- Accept: Technically solid paper, with high impact on at least one sub-area of AI or moderate to high impact on more than one area of AI, with good to excellent evaluation, resources, reproducibility, and no unaddressed ethical considerations.

- Weak Accept: Technically solid, moderate to high impact paper, with no major concerns with respect to evaluation, resources, reproducibility, ethical considerations.

- Borderline accept: Technically solid paper where reasons to accept, e.g., novelty, outweigh reasons to reject, e.g., limited evaluation. Please use sparingly.

- Borderline reject: Technically solid paper where reasons to reject, e.g., lack of novelty, outweigh reasons to accept, e.g., good evaluation. Please use sparingly.

- Reject: For instance, a paper with technical flaws, weak evaluation, inadequate reproducibility, incompletely addressed ethical considerations.

- Strong Reject: For instance, a paper with major technical flaws, and/or poor evaluation, limited impact, poor reproducibility, mostly unaddressed ethical considerations.

- Very Strong Reject: For instance, a paper with trivial results, limited novelty, poor impact, or unaddressed ethical considerations.

15. (CONFIDENCE) How confident are you in your evaluation? * (visible to other reviewers, visible to meta-reviewers)

- Very confident. I have checked all points of the paper carefully. I am certain I did not miss any aspects that could otherwise have impacted my evaluation.

- Quite confident. I tried to check the important points carefully. It is unlikely, though conceivable, that I missed some aspects that could otherwise have impacted my evaluation.

- Somewhat confident, but there's a chance I missed some aspects. I did not carefully check some of the details, e.g., novelty, proof of a theorem, experimental design, or statistical validity of conclusions.

- Not very confident. I am able to defend my evaluation of some aspects of the paper, but it is quite likely that I missed or did not understand some key details, or can't be sure about the novelty of the work.

- Not confident. My evaluation is an educated guess.

16. {Confidence-Justification} Please provide a justification for your confidence (only visible to SPC, AC, and Program Chairs). * (visible to meta-reviewers)

8000 characters left

17. (EXPERTISE) How well does this paper align with your expertise? * (visible to other reviewers, visible to meta- reviewers)

- Expert: This paper is within my current core research focus and I am deeply knowledgeable about all of the topics covered by the paper.

- Very Knowledgeable: This paper significantly overlaps with my current work and I am very knowledgeable about most of the topics covered by the paper.

- Knowledgeable: This paper has some overlap with my current work. My recent work was focused on closely related topics and I am knowledgeable about most of the topics covered by the paper.

- Mostly Knowledgeable: This paper has little overlap with my current work. My past work was focused on related topics and I am knowledgeable or somewhat knowledgeable about most of the topics covered by the paper.

- Somewhat Knowledgeable: This paper has little overlap with my current work. I am somewhat knowledgeable about some of the topics covered by the paper.

- Not Knowledgeable: I have little knowledge about most of the topics covered by the paper.

18. {Expertise-Justification} Please provide a justification for your expertise (only visible to SPC, AC, and Program Chairs). * (visible to meta-reviewers)

8000 characters left

19. Confidential comments to SPC, AC, and Program Chairs (visible to meta-reviewers)

20. I acknowledge that I have read the author's rebuttal and made whatever changes to my review where necessary.

(visible to authors after notification, visible to other reviewers, visible to meta-reviewers)

=======================================================