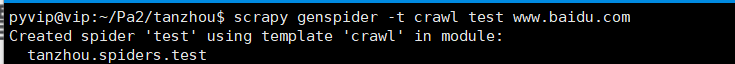

1. 在虚拟机中cd到项目目录,再运行下面代码创建spider文件:

scrapy genspider -t crawl test www.baidu.com

2. spider.py代码

import scrapy from scrapy.linkextractors import LinkExtractor from scrapy.spiders import CrawlSpider, Rule from tanzhou.items import TanzhouItem,DetailItem class TencentSpider(CrawlSpider): name = 'tencent' allowed_domains = ['hr.tencent.com'] start_urls = ['https://hr.tencent.com/position.php?lid=2268&tid=87&keywords=python'] rules = ( Rule(LinkExtractor(allow=r'start=d+'), callback='parse_item',follow=True), Rule(LinkExtractor(allow=r'position_detail.php?id=d+'), callback='parse_detail_item', follow=False), ) def parse_item(self, response): # 解析职位信息 tr = response.xpath( '//table[@class="tablelist"]/tr[@class = "even"]|//table[@class="tablelist"]/tr[@class = "odd"]') if tr: for i in tr: # 第二种方式,用items.py约束 item = TanzhouItem() item["jobName"] = i.xpath('./td[1]/a/text()').extract_first() item["jobType"] = i.xpath('./td[2]/text()').extract_first() item["Num"] = i.xpath('./td[3]/text()').extract_first() item["Place"] = i.xpath('./td[4]/text()').extract_first() item["Time"] = i.xpath('./td[5]/text()').extract_first() yield item def parse_detail_item(self,response): item = DetailItem() item['detail_content'] = response.xpath("//ul[@class = 'squareli']/li/text()").extract() item['detail_content'] = ' '.join(item['detail_content']) yield item

3. items代码:

import scrapy class TanzhouItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() jobName = scrapy.Field() jobType = scrapy.Field() Num = scrapy.Field() Place = scrapy.Field() Time = scrapy.Field() class DetailItem(scrapy.Item): detail_content = scrapy.Field()

4. pipelines代码:

import json from tanzhou.items import TanzhouItem,DetailItem class TanzhouPipeline(object): def process_item(self, item, spider): # 数据json化 ,如果是用items 则需要先转化成字典格式dict()再用json # item = json.dumps(item,ensure_ascii=False) if isinstance(item,TanzhouItem): item = json.dumps(dict(item),ensure_ascii=False) self.f.write(item) self.f.write(' ') if isinstance(item,DetailItem): item = json.dumps(dict(item), ensure_ascii=False) self.f2.write(item) self.f2.write(' ') return item # 爬虫开启时运行 def open_spider(self,spider): # 打开文件 self.f = open('info2.json','w') self.f2 = open('detail2.json', 'w') # 爬虫关闭时运行 def close_spider(self,spider): # 关闭文件 self.f.close() self.f2.close()