LeakyReLU

def leaky_relu(x, alpha=0.01):

"""Compute the leaky ReLU activation function.

Inputs:

- x: TensorFlow Tensor with arbitrary shape

- alpha: leak parameter for leaky ReLU

Returns:

TensorFlow Tensor with the same shape as x

"""

# TODO: implement leaky ReLU

condition = tf.less(x,0)

res = tf.where(condition,alpha * x,x)

return res

Random Noise

def sample_noise(batch_size, dim):

"""Generate random uniform noise from -1 to 1.

Inputs:

- batch_size: integer giving the batch size of noise to generate

- dim: integer giving the dimension of the the noise to generate

Returns:

TensorFlow Tensor containing uniform noise in [-1, 1] with shape [batch_size, dim]

"""

# TODO: sample and return noise

return tf.random_uniform([batch_size,dim],minval = -1,maxval = 1)

Discriminator

Architecture:

- Fully connected layer with input size 784 and output size 256

- LeakyReLU with alpha 0.01

- Fully connected layer with output size 256

- LeakyReLU with alpha 0.01

- Fully connected layer with output size 1

def discriminator(x):

"""Compute discriminator score for a batch of input images.

Inputs:

- x: TensorFlow Tensor of flattened input images, shape [batch_size, 784]

Returns:

TensorFlow Tensor with shape [batch_size, 1], containing the score

for an image being real for each input image.

"""

with tf.variable_scope("discriminator"):

# TODO: implement architecture

fc1 = tf.layers.dense(x,256,use_bias = True,name = 'fc1')

leaky_relu1 = leaky_relu(fc1,alpha = 0.01)

fc2 = tf.layers.dense(leaky_relu1,256,use_bias = True,name = 'fc2')

leaky_relu2 = leaky_relu(fc2,alpha = 0.01)

logits = tf.layers.dense(leaky_relu2,1,name = 'fc3')

return logits

Generator

Architecture:

- Fully connected layer with inupt size tf.shape(z)[1] (the number of noise dimensions) and output size 1024

ReLU- Fully connected layer with output size 1024

ReLU- Fully connected layer with output size 784

TanH(To restrict every element of the output to be in the range [-1,1])

def generator(z):

"""Generate images from a random noise vector.

Inputs:

- z: TensorFlow Tensor of random noise with shape [batch_size, noise_dim]

Returns:

TensorFlow Tensor of generated images, with shape [batch_size, 784].

"""

with tf.variable_scope("generator"):

# TODO: implement architecture

fc1 = tf.layers.dense(z,1024,use_bias = True,activation = tf.nn.relu)

fc2 = tf.layers.dense(fc1,1024,use_bias = True,activation = tf.nn.relu)

img = tf.layers.dense(fc2,784,use_bias = True,activation = tf.nn.tanh)

return img

Gan loss

def gan_loss(logits_real, logits_fake):

"""Compute the GAN loss.

Inputs:

- logits_real: Tensor, shape [batch_size, 1], output of discriminator

Unnormalized score that the image is real for each real image

- logits_fake: Tensor, shape[batch_size, 1], output of discriminator

Unnormalized score that the image is real for each fake image

Returns:

- D_loss: discriminator loss scalar

- G_loss: generator loss scalar

HINT: for the discriminator loss, you'll want to do the averaging separately for

its two components, and then add them together (instead of averaging once at the very end).

"""

# TODO: compute D_loss and G_loss

loss1 = tf.nn.sigmoid_cross_entropy_with_logits(labels = tf.ones_like(logits_real),logits = logits_real,name = 'discriminator_real_loss')

loss2 = tf.nn.sigmoid_cross_entropy_with_logits(labels = tf.zeros_like(logits_fake),logits = logits_fake,name = 'discriminator_fake_loss')

loss3 = tf.nn.sigmoid_cross_entropy_with_logits(labels = tf.ones_like(logits_fake),logits = logits_fake,name = 'generator_loss')

D_loss = tf.reduce_mean(loss1 + loss2)

G_loss = tf.reduce_mean(loss3)

return D_loss, G_loss

Optimizing

# TODO: create an AdamOptimizer for D_solver and G_solver

def get_solvers(learning_rate=1e-3, beta1=0.5):

"""Create solvers for GAN training.

Inputs:

- learning_rate: learning rate to use for both solvers

- beta1: beta1 parameter for both solvers (first moment decay)

Returns:

- D_solver: instance of tf.train.AdamOptimizer with correct learning_rate and beta1

- G_solver: instance of tf.train.AdamOptimizer with correct learning_rate and beta1

"""

D_solver = tf.train.AdamOptimizer(learning_rate = learning_rate,beta1 = beta1)

G_solver = tf.train.AdamOptimizer(learning_rate = learning_rate,beta1 = beta1)

return D_solver, G_solver

Least Squares GAN

def lsgan_loss(scores_real, scores_fake):

"""Compute the Least Squares GAN loss.

Inputs:

- scores_real: Tensor, shape [batch_size, 1], output of discriminator

The score for each real image

- scores_fake: Tensor, shape[batch_size, 1], output of discriminator

The score for each fake image

Returns:

- D_loss: discriminator loss scalar

- G_loss: generator loss scalar

"""

# TODO: compute D_loss and G_loss

D_loss = 0.5 * tf.reduce_mean(tf.square(scores_real - 1)) + 0.5 * tf.reduce_mean(tf.square(scores_fake))

G_loss = 0.5 * tf.reduce_mean(tf.square(scores_fake - 1))

return D_loss, G_loss

Deep Convolutional GANs

Discriminator

Architecture:

- Conv2D: 32 Filters, 5x5, Stride 1, padding 0

- Leaky ReLU(alpha=0.01)

- Max Pool 2x2, Stride 2

- Conv2D: 64 Filters, 5x5, Stride 1, padding 0

- Leaky ReLU(alpha=0.01)

- Max Pool 2x2, Stride 2

- Flatten

- Fully Connected with output size 4 x 4 x 64

- Leaky ReLU(alpha=0.01)

- Fully Connected with output size 1

def discriminator(x):

"""Compute discriminator score for a batch of input images.

Inputs:

- x: TensorFlow Tensor of flattened input images, shape [batch_size, 784]

Returns:

TensorFlow Tensor with shape [batch_size, 1], containing the score

for an image being real for each input image.

"""

x = tf.reshape(x,shape = (tf.shape(x)[0],28,28,1))

with tf.variable_scope("discriminator"):

# TODO: implement architecture

conv1 = tf.layers.conv2d(x,filters = 32,kernel_size = (5,5),strides = (1,1),activation = leaky_relu)

max_pool1 = tf.layers.max_pooling2d(conv1,pool_size = (2,2),strides = (2,2))

conv2 = tf.layers.conv2d(max_pool1,filters = 64,kernel_size = (5,5),strides = (1,1),activation = leaky_relu)

max_pool2 = tf.layers.max_pooling2d(conv2,pool_size = (2,2),strides = (2,2))

flat = tf.contrib.layers.flatten(max_pool2)

fc1 = tf.layers.dense(flat,4*4*64,activation = leaky_relu)

logits = tf.layers.dense(fc1,1)

return logits

test_discriminator(1102721)

Generator

Architecture:

- Fully connected with output size 1024

ReLU- BatchNorm

- Fully connected with output size 7 x 7 x 128

ReLU- BatchNorm

- Resize into Image Tensor of size 7, 7, 128

- Conv2D^T (transpose): 64 filters of 4x4, stride 2

ReLU- BatchNorm

- Conv2d^T (transpose): 1 filter of 4x4, stride 2

TanH

def generator(z):

"""Generate images from a random noise vector.

Inputs:

- z: TensorFlow Tensor of random noise with shape [batch_size, noise_dim]

Returns:

TensorFlow Tensor of generated images, with shape [batch_size, 784].

"""

batch_size = tf.shape(z)[0]

with tf.variable_scope("generator"):

# TODO: implement architecture

fc1 = tf.layers.dense(z,1024,activation = tf.nn.relu,use_bias = True)

bn1 = tf.layers.batch_normalization(fc1,training = True)

fc2 = tf.layers.dense(bn1,7*7*128,activation = tf.nn.relu,use_bias = True)

bn2 = tf.layers.batch_normalization(fc2,training = True)

resize = tf.reshape(bn2,shape = (-1,7,7,128))

filter_conv1 = tf.get_variable('deconv1',[4,4,64,128]) # [height, width, output_channels, in_channels]

conv_tr1 = tf.nn.conv2d_transpose(resize,filter = filter_conv1,output_shape = [batch_size,14,14,64],strides = [1,2,2,1])

bias1 = tf.get_variable('deconv1_bias',[64])

conv_tr1 += bias1

relu_conv_tr1 = tf.nn.relu(conv_tr1)

bn3 = tf.layers.batch_normalization(relu_conv_tr1,training = True)

filter_conv2 = tf.get_variable('deconv2',[4,4,1,64])

conv_tr2 = tf.nn.conv2d_transpose(bn3,filter = filter_conv2,output_shape = [batch_size,28,28,1],strides = [1,2,2,1])

bias2 = tf.get_variable('deconv2_bias',[1])

conv_tr2 += bias2

img = tf.nn.tanh(conv_tr2)

img = tf.contrib.layers.flatten(img)

return img

test_generator(6595521)

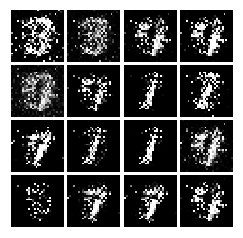

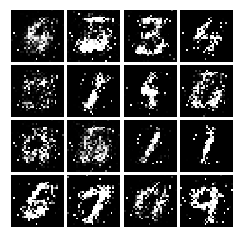

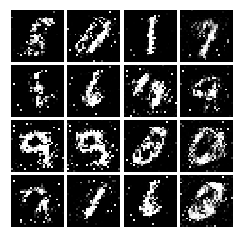

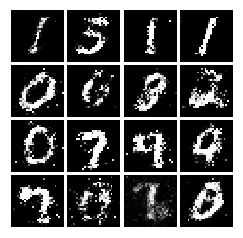

Epoch: 0, D: 0.2112, G:0.3559

Epoch: 1, D: 0.196, G:0.2681

Epoch: 2, D: 0.1689, G:0.2728

Epoch: 3, D: 0.1618, G:0.2215

Epoch: 4, D: 0.1968, G:0.2461

Epoch: 5, D: 0.1968, G:0.2429

Epoch: 6, D: 0.2316, G:0.1997

Epoch: 7, D: 0.2206, G:0.1858

Epoch: 8, D: 0.2131, G:0.1815

Epoch: 9, D: 0.2345, G:0.1732

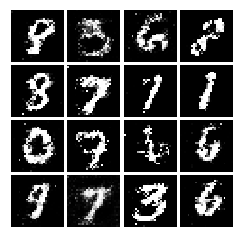

Final images