**********目的:

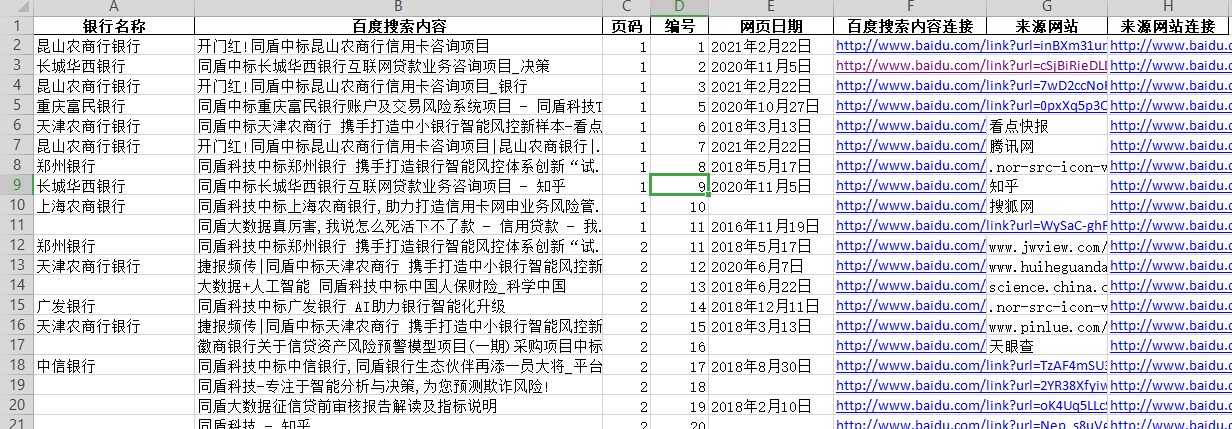

搜索‘同盾’‘中标’‘信贷’关键词信息

**********爬虫效果:

**********参看学习爬虫教程:

透彻讲解使用Selenium的网站: http://www.python3.vip/tut/auto/selenium/01/ Selenium学习网址: https://www.cnblogs.com/lweiser/p/11045023.html 学习beautifulSoup网址: https://www.jianshu.com/p/dc8df30ee0c8 https://beautifulsoup.readthedocs.io/zh_CN/v4.4.0/

**********代码:

一、抓取百度数据

# -*- coding: utf-8 -*- """ Created on Tue Mar 16 08:40:08 2021 @author: Administrator 抓取数据,并存为xlsx文件。 """ import pandas as pd import time from bs4 import BeautifulSoup import requests #容易被反扒,实验失败。 from selenium import webdriver #模拟认的操作 from selenium.webdriver.chrome.options import Options heads={} heads['User-Agent'] = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/69.0.3497.100 Safari/537.36' chrome_options = Options() # 设置chrome浏览器无界面模式 chrome_options.add_argument('lang=zh_CN.UTF-8') # 设置中文 # chrome_options.add_argument('window-size=1920x3000') # 指定浏览器分辨率 chrome_options.add_argument('--disable-gpu') # 谷歌文档提到需要加上这个属性来规避bug # chrome_options.add_argument('--hide-scrollbars') # 隐藏滚动条, 应对一些特殊页面 chrome_options.add_argument('blink-settings=imagesEnabled=false') # 不加载图片, 提升速度 #chrome_options.add_argument('--headless') # 浏览器不提供可视化页面. linux下如果系统不支持可视化不加这条会启动失败 # 设置手机请求头 (手机页面反爬虫能力稍弱) chrome_options.add_argument('Mozilla/5.0 (Linux; Android 6.0; Nexus 5 Build/MRA58N) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.157 Mobile Safari/537.36') wd = webdriver.Chrome(r'C:Program Files (x86)GoogleChromeApplicationchromedriver.exe') #共计76页 page_list=[] for i in range(1,77): page_num=i url = "https://www.baidu.com/s?wd=同盾%20信贷%20中标&pn={}".format((i-1)*10) page_list.append((page_num,url)) #开始抓取数据 info_list = [] for page_num,url in page_list: print(page_num,url) if 0: html_source = requests.get(url, headers=heads).content else: wd.get(url)# 调用WebDriver 对象的get方法 可以让浏览器打开指定网址 html_source = wd.page_source soup = BeautifulSoup(html_source,"lxml") text1 = soup.select("div[id='content_left']")[0] text2 = text1.select("div[class='result c-container new-pmd']") for i in range(len(text2)): print(i) try: re_bianhao = text2[i]['id'] re_riqi = text2[i].select("div > span")[0].get_text().strip() re_text1 = text2[i].select("h3 > a")[0].get_text() re_url1 = text2[i].select("h3 > a")[0]['href'] re_lanyuan_site = text2[i].select("div > a")[0].get_text() re_lanyuan_url = text2[i].select("div > a")[0]['href'] info_dict = {'页码':page_num ,'编号':re_bianhao ,'网页日期':re_riqi ,'百度搜索内容':re_text1 ,'百度搜索内容连接':re_url1 ,'来源网站':re_lanyuan_site ,'来源网站连接':re_lanyuan_url } info_list.append(info_dict) except Exception as e: print('wrong2!') print(str(e)) time.sleep(10) time.sleep(1.1) #抓取一个页面后休息1.1秒 #输出为excel pd.DataFrame(info_list).to_excel('raw_data2.xlsx',index=False) #print(text2[0].prettify()) # 百度反扒策略: 1.多次请求会被返回网络问题(requests方法中可能是cookie或其他被识别)。返回~~~百度安全验证~~~~~~~~~~~~~~~网络不给力,请稍后重试~返回首页~~~~问题反馈~~~~~

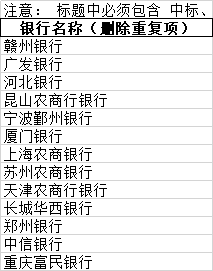

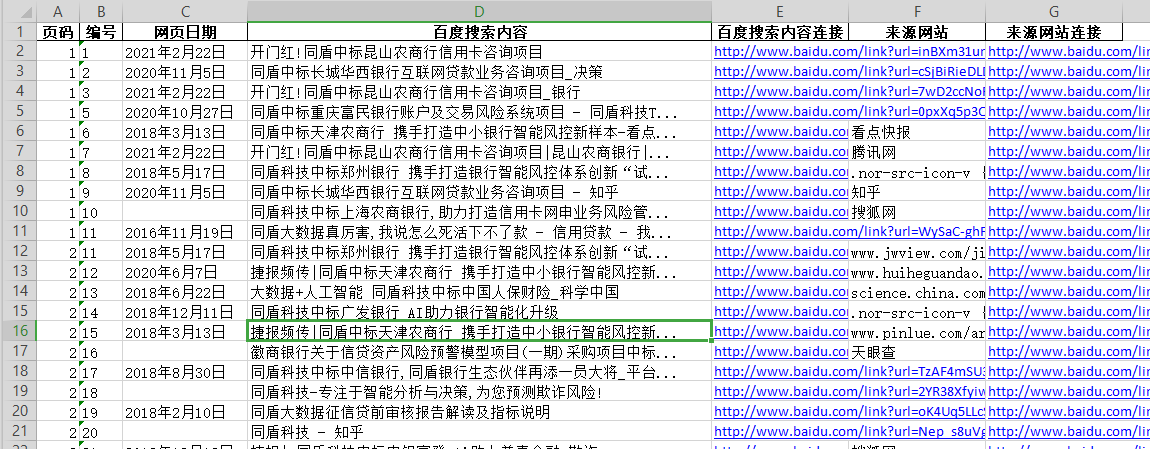

二、提取关键信息

# -*- coding: utf-8 -*- """ Created on Tue Mar 16 11:25:29 2021 @author: Administrator 在文本中提取银行名称 """ import pandas as pd import time df1 = pd.read_excel('raw_data.xlsx') def f1(str1): #str1 = '同盾科技中标广发银行 AI助力银行智能化升级_网易新闻' try: str2 = str1.split('中标')[1] if '农商行' in str2 or '银行' in str2: str2 = str2.split('农商行')[0] str2 = str2+'农商行' str2 = str2.split('银行')[0] str2 = str2+'银行' return str2 else: return '' except: return '' f1(str1='同盾中标长城华西银行互联网贷款业务咨询项目_决策') df1['银行名称'] = df1['百度搜索内容'].map(f1) df2 = df1[['银行名称','百度搜索内容','页码', '编号', '网页日期', '百度搜索内容连接', '来源网站', '来源网站连接']] df2.to_excel('raw_data2.xlsx',index=False)