windows环境下

springBoot项目中:

pom.xml新增

<dependency> <groupId>com.internetitem</groupId> <artifactId>logback-elasticsearch-appender</artifactId> <version>1.6</version> </dependency> <!--集成 logstash 日志--> <dependency> <groupId>net.logstash.logback</groupId> <artifactId>logstash-logback-encoder</artifactId> <version>5.3</version> </dependency>

logback-spring.xml

<?xml version="1.0" encoding="UTF-8"?> <configuration> <!-- 日志存放路径 --> <property name="log.path" value="/home/ruoyi/logs" /> <!-- 日志输出格式 --> <property name="log.pattern" value="%d{HH:mm:ss.SSS} [%thread] %-5level %logger{20} - [%method,%line] - %msg%n" /> <!-- 日志输出格式 %d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n --> <!-- 控制台 appender--> <appender name="CONSOLE" class="ch.qos.logback.core.ConsoleAppender"> <encoder> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern> <charset>UTF-8</charset> </encoder> </appender> <!-- 文件 滚动日志 (all)--> <appender name="allLog" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!-- 当前日志输出路径、文件名 --> <file>${log.path}/all.log</file> <!--日志输出格式--> <encoder> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern> <charset>UTF-8</charset> </encoder> <!--历史日志归档策略--> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <!-- 历史日志: 归档文件名 --> <fileNamePattern>${log.path}/%d{yyyy-MM, aux}/all.%d{yyyy-MM-dd}.%i.log.gz</fileNamePattern> <!--单个文件的最大大小--> <maxFileSize>64MB</maxFileSize> <!--日志文件保留天数--> <maxHistory>15</maxHistory> </rollingPolicy> </appender> <!-- 文件 滚动日志 (仅error)--> <appender name="errorLog" class="ch.qos.logback.core.rolling.RollingFileAppender"> <!-- 当前日志输出路径、文件名 --> <file>${log.path}/error.log</file> <!--日志输出格式--> <encoder> <pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern> <charset>UTF-8</charset> </encoder> <!--历史日志归档策略--> <rollingPolicy class="ch.qos.logback.core.rolling.SizeAndTimeBasedRollingPolicy"> <!-- 历史日志: 归档文件名 --> <fileNamePattern>${log.path}/%d{yyyy-MM, aux}/error.%d{yyyy-MM-dd}.%i.log.gz</fileNamePattern> <!--单个文件的最大大小--> <maxFileSize>64MB</maxFileSize> <!--日志文件保留天数--> <maxHistory>15</maxHistory> </rollingPolicy> <!-- 此日志文档只记录error级别的 level过滤器--> <filter class="ch.qos.logback.classic.filter.LevelFilter"> <level>error</level> <onMatch>ACCEPT</onMatch> <onMismatch>DENY</onMismatch> </filter> </appender> <!-- 文件 异步日志(async) --> <appender name="ASYNC" class="ch.qos.logback.classic.AsyncAppender" immediateFlush="false" neverBlock="true"> <!-- 不丢失日志.默认的,如果队列的80%已满,则会丢弃TRACT、DEBUG、INFO级别的日志 --> <discardingThreshold>0</discardingThreshold> <!-- 更改默认的队列的深度,该值会影响性能.默认值为256 --> <queueSize>1024</queueSize> <neverBlock>true</neverBlock> <!-- 添加附加的appender,最多只能添加一个 --> <appender-ref ref="allLog" /> </appender> <!--输出到logstash的appender--> <appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender"> <!--可以访问的logstash日志收集端口,这边要跟logstash.conf对应--> <destination>xxxxx:4560</destination> <encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"> <!--自定义字段,区分应用名称--> <customFields>{"appname":"logdemo"}</customFields> </encoder> </appender> <!-- root 级别的配置 --> <root level="INFO"> <appender-ref ref="CONSOLE" /> <!--<appender-ref ref="allLog" />--> <appender-ref ref="ASYNC"/> <appender-ref ref="errorLog" /> <appender-ref ref="logstash" /> </root> <!--可输出mapper层sql语句等--> <logger name="com.tingcream" level="debug"> </logger> <!--输出jdbc 事务相关信息--> <logger name="org.springframework.jdbc" level="debug"> </logger> </configuration>

logstash的config文件中,新增logstash.conf内容:其中的xxxx是项目名,生成的索引也是xxxx-日期,比如说dev-spring-demo-2022.03.01

input { tcp{ mode => "server" host => "0.0.0.0" port => 4560 codec => json_lines } } output { elasticsearch { hosts => ["http://localhost:9200"] index => "xxxxx-%{+YYYY.MM.dd}" #user => "elastic" #password => "changeme" } }

启动logstash的命令

logstash -f C:\logstash-7.12.0\config\logstash.conf

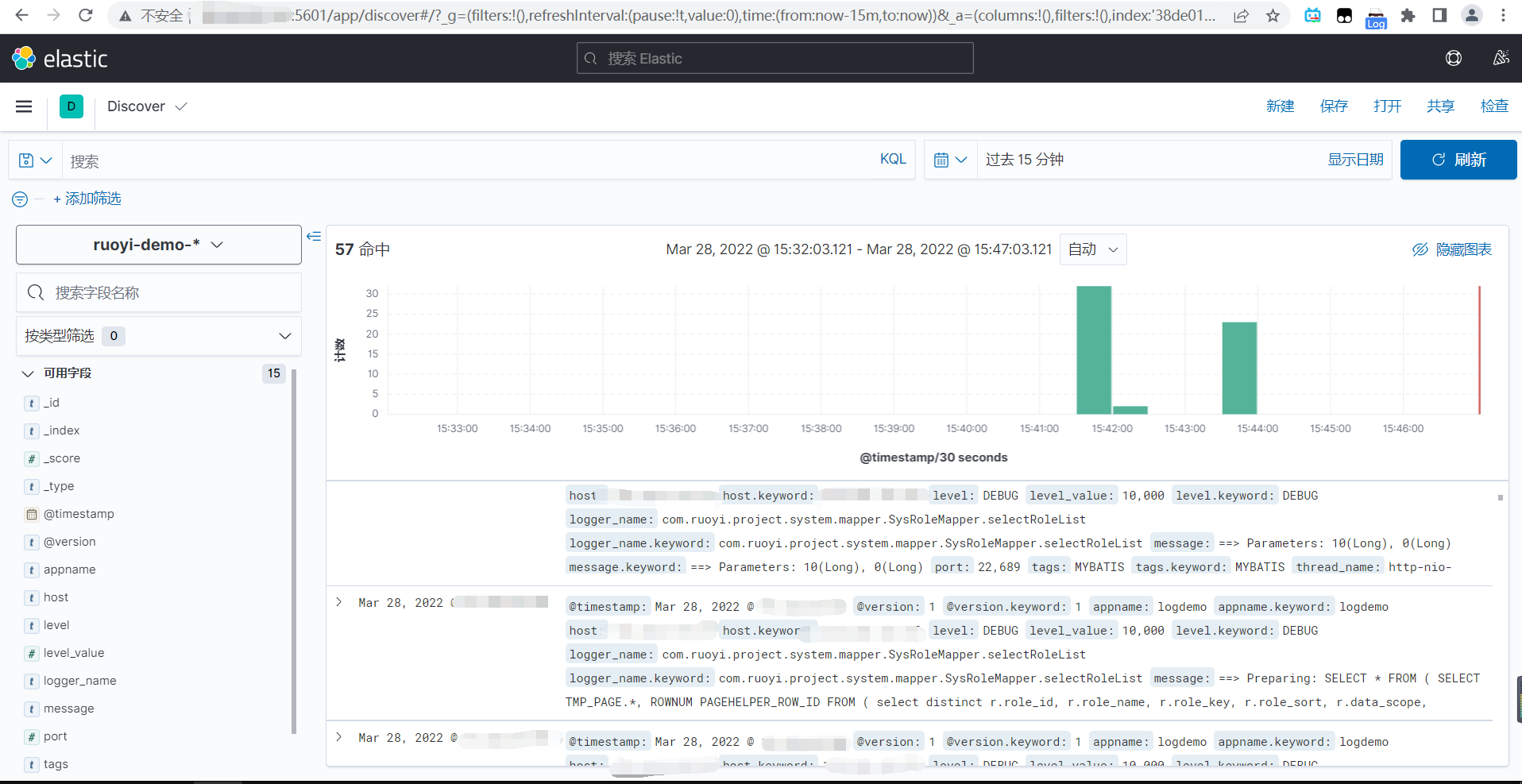

运行项目之后,kibana可视化显示记录。

更新:

logstash 的cofig加入两种来源(mysql和springboot的日志)

注意 filter 谨慎写,会导致配置中字段失效,比如说加了type,那 tcp里面的type字段就会失效导致没法同步数据

# Sample Logstash configuration for creating a simple # Beats -> Logstash -> Elasticsearch pipeline. input { stdin { } tcp{ mode => "server" host => "0.0.0.0" port => 4560 codec => json_lines type => "demo" } jdbc{ # 连接的数据库地址和数据库,指定编码格式,禁用SSL协议,设定自动重连 jdbc_connection_string => "jdbc:mysql://localhost:3306/ruoyi?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&useSSL=true&serverTimezone=GMT%2B8" # 用户名密码 jdbc_user => "jdbc_user" jdbc_password => "jdbc_password" # jar包的位置 jdbc_driver_library => "C:\apache-maven-3.0.5\repository\mysql\mysql-connector-java\5.1.6\mysql-connector-java-5.1.6.jar" # mysql的Driver jdbc_driver_class => "com.mysql.jdbc.Driver" jdbc_default_timezone => "Asia/Shanghai" jdbc_paging_enabled => "true" jdbc_page_size => "50000" #statement_filepath => "config-mysql/test.sql" #注意这个sql不能出现type,这是es的保留字段 statement => "select user_id,user_name,email from sys_user" #查询sys_user表中的这些字段 schedule => "* * * * *" tracking_column => "unix_ts_in_secs" use_column_value => true tracking_column_type => "numeric" type => "local-mysql" } }

filter { } output { stdout { codec => json_lines } if[type] == "demo" { elasticsearch { hosts => "http://localhost:9200" index => "demo-%{+YYYY.MM.dd}" } } if[type] == "local-mysql" { elasticsearch { doc_as_upsert => true action => "update" hosts => "http://localhost:9200" # index名 index => "mysql-sysuser" # 需要关联的数据库中有有一个id字段,对应索引的id号 document_id => "%{user_id}" } } }

filter {

mutate {

copy => { "user_id" => "[@metadata][_id]"}

remove_field => ["@version","host","@timestamp","type"] # 删除字段

}

}