1. Package

# -*- coding: utf-8 -*-

import os, sys, glob, shutil, json

os.environ["CUDA_VISIBLE_DEVICES"] = '0'

import cv2

import numpy as np

from PIL import Image

from tqdm import tqdm, tqdm_notebook

%pylab inline

import torch

torch.manual_seed(0)

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.benchmark = True

import torchvision.models as models

import torchvision.transforms as transforms

import torchvision.datasets as datasets

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.autograd import Variable

from torch.utils.data.dataset import Dataset

2. Data

定义数据集

def __init__(self, loader=default_loader):

这个里面一般要初始化一个loader(代码见上面),一个images_path的列表,一个target的列表

def __getitem__(self, index):

这里就是在给你一个index的时候,你返回一个图片的tensor和target的tensor,使用了loader方法,经过 归一化,剪裁,类型转化,从图像变成tensor

def __len__(self):

return所有数据的个数

这三个综合起来看呢,其实就是输入所有数据的长度,它每次给你返回一个shuffle过的index,以这个方式遍历数据集,通过 getitem(self, index)返回一组你要的(input,target)

class SVHNDataset(Dataset):

def __init__(self, img_path, img_label, transform=None):

self.img_path = img_path

self.img_label = img_label

if transform is not None:

self.transform = transform

else:

self.transform = None

def __getitem__(self, index):

img = Image.open(self.img_path[index]).convert('RGB')

if self.transform is not None:

img = self.transform(img)

lbl = np.array(self.img_label[index], dtype=np.int)

lbl = list(lbl) + (5 - len(lbl)) * [10]

return img, torch.from_numpy(np.array(lbl[:5]))

def __len__(self):

return len(self.img_path)

# 训练集

# glob.glob(): 匹配符合条件的所有文件,并将其以list的形式返回

# train_path: ['./data/mchar_train/000000.png',...]

train_path = glob.glob(`'./data/mchar_train/*.png'`)

train_path.sort()

train_json = json.load(open(`'./data/mchar_train.json'`))

train_label = [train_json[x]['label'] for x in train_json]

print(len(train_path), len(train_label))

# train data:

# 30000 30000

# 验证集

val_path = glob.glob('../../../dataset/tianchi_SVHN/val/*.png')

val_path.sort()

val_json = json.load(open('../../../dataset/tianchi_SVHN/val.json'))

val_label = [val_json[x]['label'] for x in val_json]

print(len(val_path), len(val_label))

# val data:

# 10000 10000

# 测试集

test_path = glob.glob('./data/mchar_test_a/*.png')

test_path.sort()

test_label = [[1]] * len(test_path)

print(len(test_path), len(test_label))

定义读取数据dataloader

# Dataset:对数据集的封装,提供索引方式的对数据样本进行读取

# DataLoder:对Dataset进行封装,提供批量读取的迭代读取

# 在加入DataLoder后,数据按照批次获取,每批次调用Dataset读取单个样本进行拼接。

# train

# SVHNDataset参数:img_path, img_label, transform

train_loader = torch.utils.data.DataLoader(

SVHNDataset(train_path, train_label,

transforms.Compose([

# transforms.Compose:

# 传入参数为一个列表,列表中的元素是对数据进行变换的操作

transforms.Resize((64, 128)),

transforms.RandomCrop((60, 120)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])),

batch_size=40,

shuffle=True,

num_workers=10,

)

# val

val_loader = torch.utils.data.DataLoader(

SVHNDataset(val_path, val_label,

transforms.Compose([

transforms.Resize((60, 120)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])),

batch_size=40,

shuffle=False,

num_workers=10,

)

# test

test_loader = torch.utils.data.DataLoader(

SVHNDataset(test_path, test_label,

transforms.Compose([

transforms.Resize((70, 140)),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])),

batch_size=40,

shuffle=False,

num_workers=10,

)

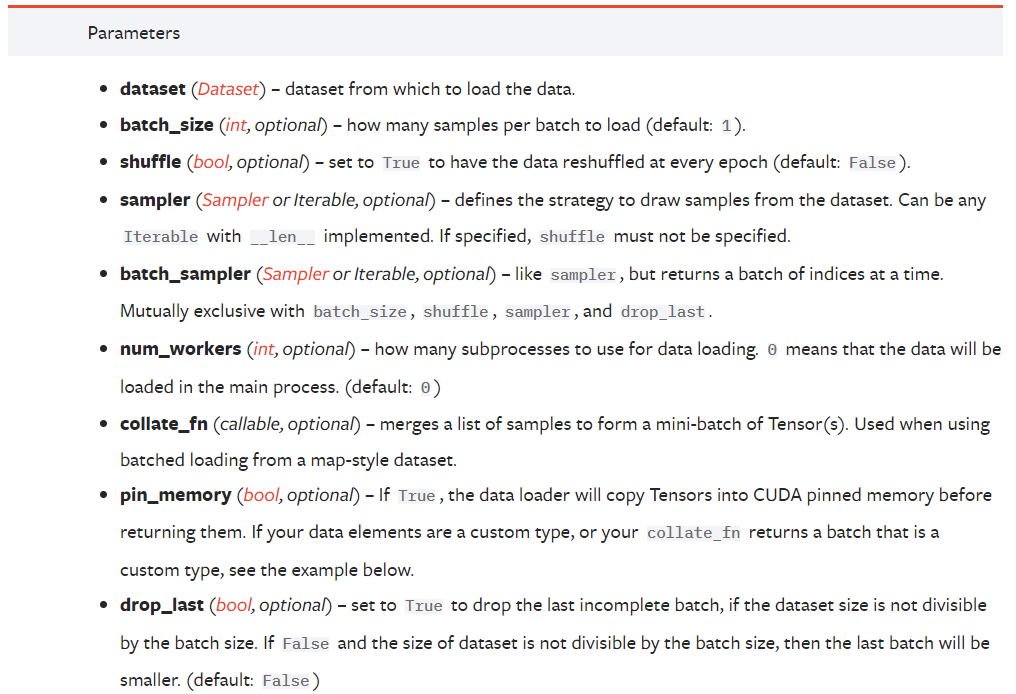

DataLoader

Model

class SVHN_Model1(nn.Module):

def __init__(self):

super(SVHN_Model1, self).__init__()

# 使用resnet18网络

model_conv = models.resnet18(pretrained=True)

# 将resnet的平均池化设置为自适应平均池化*

# nn.AdaptiveAvgPool2d(output_size):

# 将输出尺寸指定为output_size,通道数前后不发生变化。

model_conv.avgpool = nn.AdaptiveAvgPool2d(1)

model_conv = nn.Sequential(*list(model_conv.children())[:-1])

self.cnn = model_conv

self.fc1 = nn.Linear(512, 11)

self.fc2 = nn.Linear(512, 11)

self.fc3 = nn.Linear(512, 11)

self.fc4 = nn.Linear(512, 11)

self.fc5 = nn.Linear(512, 11)

def forward(self, img):

feat = self.cnn(img)

# print(feat.shape)

feat = feat.view(feat.shape[0], -1)

c1 = self.fc1(feat)

c2 = self.fc2(feat)

c3 = self.fc3(feat)

c4 = self.fc4(feat)

c5 = self.fc5(feat)

return c1, c2, c3, c4, c5

*nn.AdaptiveAvgPool2d()

- 参数形式为

output_size或H*W

m = nn.AdaptiveAvgPool2d((3,7))

input = torch.randn(1, 64, 8, 9)

print(m(input))

#torch.Size([1, 64, 3, 7])

m = nn.AdaptiveAvgPool2d(7)

input = torch.randn(1, 64, 10, 9)

print(m(input))

#torch.Size([1, 64, 7, 7])

m = nn.AdaptiveAvgPool2d((None, 3))

input = torch.randn(1, 64, 10, 9)

print(m(input))

# torch.Size([1, 64, 10, 3])

Train()

def train(train_loader, model, criterion, optimizer):

# 切换模型为训练模式

model.train()

train_loss = []

for i, (input, target) in enumerate(train_loader):

if use_cuda:

input = input.cuda()

target = target.cuda()

c0, c1, c2, c3, c4 = model(input)

loss = criterion(c0, target[:, 0]) + \

criterion(c1, target[:, 1]) + \

criterion(c2, target[:, 2]) + \

criterion(c3, target[:, 3]) + \

criterion(c4, target[:, 4])

optimizer.zero_grad()

loss.backward()

optimizer.step()

train_loss.append(loss.item())

return np.mean(train_loss)

……

Predict()

def predict(test_loader, model, tta=10):

model.eval()

test_pred_tta = None

# TTA 次数

for _ in range(tta):

test_pred = []

with torch.no_grad():

for i, (input, target) in enumerate(test_loader):

if use_cuda:

input = input.cuda()

c0, c1, c2, c3, c4 = model(input)

if use_cuda:

output = np.concatenate([

c0.data.cpu().numpy(),

c1.data.cpu().numpy(),

c2.data.cpu().numpy(),

c3.data.cpu().numpy(),

c4.data.cpu().numpy()], axis=1)

else:

output = np.concatenate([

c0.data.numpy(),

c1.data.numpy(),

c2.data.numpy(),

c3.data.numpy(),

c4.data.numpy()], axis=1)

test_pred.append(output)

test_pred = np.vstack(test_pred)

if test_pred_tta is None:

test_pred_tta = test_pred

else:

test_pred_tta += test_pred

return test_pred_tta

Run

model = SVHN_Model1()

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(model.parameters(), 0.001)

use_cuda = True

if use_cuda:

model = model.cuda()

best_loss = 1000.0

for epoch in range(10):

train_loss = train(train_loader, model, criterion, optimizer)

val_loss = validate(val_loader, model, criterion)

val_label = [''.join(map(str, x)) for x in val_loader.dataset.img_label]

val_predict_label = predict(val_loader, model, 1)

val_predict_label = np.vstack([

val_predict_label[:, :11].argmax(1),

val_predict_label[:, 11:22].argmax(1),

val_predict_label[:, 22:33].argmax(1),

val_predict_label[:, 33:44].argmax(1),

val_predict_label[:, 44:55].argmax(1),

]).T

val_label_pred = []

for x in val_predict_label:

val_label_pred.append(''.join(map(str, x[x!=10])))

val_char_acc = np.mean(np.array(val_label_pred) == np.array(val_label))

print('Epoch: {0}, Train loss: {1} \t Val loss: {2} \t Val Acc: {3}'.format(epoch, train_loss, val_loss, val_char_acc))

# 记录下验证集精度

if val_loss < best_loss:

best_loss = val_loss

# print('Find better model in Epoch {0}, saving model.'.format(epoch))

torch.save(model.state_dict(), './model.pt')

# 加载保存的最优模型

model.load_state_dict(torch.load('./model.pt'))

test_predict_label = predict(test_loader, model, 1)

print(test_predict_label.shape)

test_label = [''.join(map(str, x)) for x in test_loader.dataset.img_label]

test_predict_label = np.vstack([

test_predict_label[:, :11].argmax(1),

test_predict_label[:, 11:22].argmax(1),

test_predict_label[:, 22:33].argmax(1),

test_predict_label[:, 33:44].argmax(1),

test_predict_label[:, 44:55].argmax(1),

]).T

test_label_pred = []

for x in test_predict_label:

test_label_pred.append(''.join(map(str, x[x!=10])))

- 按照题目要求格式存储预测结果

import pandas as pd

df_submit = pd.read_csv('./data/mchar_sample_submit_A.csv')

df_submit['file_code'] = test_label_pred

df_submit.to_csv('submit.csv', index=None)

代码来源:DataWhale